Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- LLM-powered malware mutating mid-attack to evade security defences

- Moonshot’s K2 Thinking matching GPT-5 performance at 8x lower cost

- Open-weight models leading agentic tool-use benchmarks against closed rivals

Malware gets an AI upgrade

The impacts of cyber attacks are increasingly part of the headlines, but thus far AI’s role has largely been limited to productivity boosts for bad actors, and enhanced content for social engineering. New cyber security research from the Google Threat Intelligence Group (GTIG) this week suggests we’re seeing the first signs of direct LLM use in malware and active operations. The research states: “This marks a new operational phase of AI abuse, involving tools that dynamically alter behaviour mid-execution.”

This research builds on their Adversarial Misuse of Generative AI report from January 2025 which documented how state-sponsored actors from over 20 countries were already exploiting Gemini for various stages of cyber operations, from reconnaissance to payload development. The new research identifies three malware families; PROMPTFLUX, PROMPTSTEAL and PROMPTLOCK that connect to LLM services during attacks, rewriting their own code to evade detection. One piece of malware queries Gemini’s API to mutate its VBScript faster than traditional defences can respond. A simple idea, but now operationally deployed.

Cyber attacks are a growing concern. According to a newly published European Threat Landscape Report from CrowdStrike, ransomware deployment has accelerated by 48% in the past year, with average attack times now just 24 hours from initial breach to encryption. The economic impacts can be significant; estimates suggest the Jaguar Land Rover attack in August has cost the UK economy £1.9 billion, making it the most financially damaging cyber incident in British history. The attack forced production shutdowns across JLR’s UK plants for over five weeks, reducing output by nearly 5,000 vehicles weekly and cascading through more than 5,000 organisations in the supply chain, with smaller suppliers forced to lay off nearly half their workforce in some cases. The incident required a £1.5 billion government loan guarantee to prevent wider economic collapse and prompted the Bank of England to acknowledge measurable impacts on UK GDP.

For the UK specifically, the convergence of state-sponsored and criminal activity presents unique challenges. Manufacturing, professional services and technology sectors face constant targeting. Iranian groups like APT42 use AI to craft phishing campaigns tailored to British defence organisations. North Korean actors deploy HTTPSpy malware against defence manufacturers whilst spoofing UK energy companies. Actors are exploiting vulnerabilities in AI software to gain initial access, harvest credentials, and deploy ransomware, treating AI development infrastructure including APIs, serialised models, and dependencies as primary targets. Google’s Secure AI Framework (SAIF) identifies eight primary risk categories. Prompt injection allows attackers to manipulate AI behaviour. Model theft threatens intellectual property. Data poisoning corrupts training sets. Sensitive data disclosure exposes everything from user conversations to system credentials. The underground economy has already adapted, with subscription-based jailbroken AI services available for as little as £50 monthly, offering uncensored capabilities for writing malware and crafting business email content.

So where do we go from here? If attackers use AI to dynamically mutate malware, the logical counter is AI that dynamically adapts defences. CrowdStrike’s own Charlotte AI promises a fleet of specialised AI agents trained on millions of real SOC decisions, with each agent handling specific tasks like malware analysis, threat triage, and correlation rule building, whilst their AgentWorks platform enables security teams to build custom agents using plain language without coding, creating an “agentic security workforce”. Darktrace’s Self-Learning AI creates a baseline of normal behaviour for each organisation’s digital environment, then uses its Antigena system to contain threats. Vectra AI maps attacker behaviour against the MITRE ATT&CK framework, using ML to detect command-and-control communications, lateral movement, and data exfiltration patterns etc. These products represent part of the defensive arms race: rather than racing to update signatures against polymorphic threats, they use AI to understand intent, predict next moves, and respond much more quickly.

But protection actually starts with understanding that attacks often gain initial access through traditional social engineering. Google’s November 2025 fraud advisory highlights how cybercriminals now exploit job seekers with fake recruitment sites, deploy AI-impersonation apps promising “exclusive access” to harvest credentials, and run fraud recovery schemes targeting previous victims with promises to reclaim lost funds. These advisory documents key recent vectors, with negative review extortion against businesses, to malicious VPN apps containing banking trojans, and seasonal shopping scams using hijacked brand terms all surging. These attacks succeed not through technical sophistication but by exploiting human psychology: urgency, fear, opportunity, and trust, reminding us that whilst AI accelerates both attack and defence capabilities, the human element remains the critical vulnerability requiring constant vigilance.

The emergence of LLM-in-the-loop malware represents both a technical evolution and demonstrates the adaptability of cybercrime operations. As attack tools become autonomous, traditional security approaches based on recognising known patterns become obsolete. And yet the defensive response is equally powerful. The challenge isn’t technology but education, execution speed, and a renewed thoroughness that we will all have a responsibility to employ

Takeaways: Whilst the technology arms race accelerates, basic human vulnerabilities remain the primary means of attack, making security awareness and robust identity controls more important than ever. These are the three key areas for organisations to make progress:

- Educate your workforce specifically on AI-enhanced social engineering – Security awareness training reduces successful phishing by 40-70%, and with AI making scams more convincing, regular training on fake recruitment schemes, deepfake calls, and AI-impersonation tactics is essential. Focus on the psychology attackers exploit: urgency, authority, and fear.

- Lock down your AI integration – Treat every AI API call like a financial transaction. Implement gateways that manage connections, log all prompts and responses, and rotates API tokens regularly. Think of it as putting a corporate credit card approval process around AI usage; no employee should be able to connect company systems to external AI without explicit permission.

- Build an integration-centric AI inventory (AI-BOM) – Document every AI agent, model, MCP server, and integration in your organisation, including shadow AI. Track dependencies, data flows, and decision-making capabilities. This is your AI-BOM (Bill of Materials), and just like software components, you need to know what you’re running, where it came from, and what it can access.

Moonshot challenges the giants

This week’s biggest open-weight model releases from Chinese lab Moonshoot looks to be one of the most significant this year. Kimi K2 Thinking is a trillion-parameter Mixture-of-Experts model that activates about 32B parameters per request and scores 44.9% on Humanity’s Last Exam and 60.2% on BrowseComp, edging past GPT-5 and Claude 4.5. The standout claim is stability across long tool chains: it can run roughly 200–300 sequential calls without falling apart. This looks like we’re seeing the convergence of closed and open models on the frontier and will have the big labs very worried.

Pricing will increase anxiety, with Kimi K2 Thinking on the Moonshot infrastructure coming in at $0.60 and $2.50 per million input and output tokens respectively, whilst GPT-5 sits at $1.25 / $10. That makes K2 Thinking roughly 8x cheaper on input and 4x cheaper on output.

Quantisation is the compression of the AI world and K2 leans on it heavily. It uses “INT4” weights trained with quantisation-aware methods and says it gets about 2x speed-ups while keeping quality close to higher-precision baselines. In plain terms, INT4 means the model stores numbers with 4 bits instead of the 16 or 32 bits. That makes the model smaller and faster to move around, but it throws away detail, which can show up as lost accuracy or brittleness in tricky cases. The K2’s training process works by simulating quantisation effects during fine-tuning, allowing weights to adapt and compensate for precision loss.

Some users have reported it can get stubborn, locking onto a view and refusing to explore alternatives, which hurts when the initial step is wrong. It also tends to assume facts to support its own argumentation, which is grating in creative tasks. On creativity, outputs are often thin and need heavy prompting. And local deployment remains tough. Even with INT4, guidance suggests around 512GB system RAM and 32GB VRAM as a floor for smooth use. A 1.8-bit variant around 245GB has been tested by some, but they still report needing 64GB RAM and an RTX 4090 for slow, basic runs. “Open” doesn’t help much if only a handful of labs can operate it.

Results were reported at INT4 precision, and researchers have raised contamination and comparability questions. The long-chain robustness is promising, but any precision drop can compound over many steps. That may dent success rates in messy, real-world workflows even if headline scores look strong. And yet there’s no denying that this is significant. As Clement Delangue co-founder of Hugging Face stated: “Kimi K2 Thinking feels like a big milestone for open-source AI. The first time in a while that open-source gets ahead of proprietary APIs on their big area of focus (agents).”

And there’s also the strategic angle; this is the continued Chinese software-first response to US chip controls. If access to top silicon is constrained, make the model leaner so it matters less. By pushing INT4-native training and publishing strong numbers, Moonshot is saying efficiency can beat raw compute. That challenges the “more GPUs, bigger models” reflex in the US labs and creates a path where compute-poor teams can still compete, at least on carefully tested tasks.

Takeaways: The aggressive quantisation strategy shows that accepting controlled quality loss can unlock cost savings perhaps without sacrificing extensive agentic tool use. Whilst the model has clear limitations in creativity and flexibility, its economics and long-chain stability suggest we’re entering an era where open offerings genuinely threaten the business models of closed AI labs, not through matching their scale but by making scale itself less relevant.

EXO

Top agentic tool users

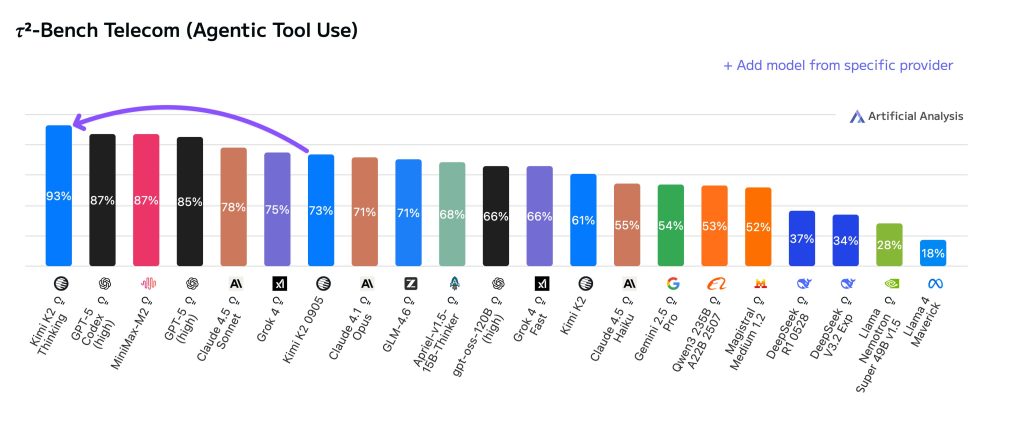

This chart helps us understand the new elite of tool using models. Kimi K2 Thinking’s 93% score on τ²-Bench looks impressive, outperforming GPT-5. The benchmark tests dual-control scenarios where AI agents must guide humans through complex technical support tasks, maintaining coherence across hundreds of interactions.

In the overall Artificial Analysis Intelligence Index (v3.0 incorporates 10 evaluations: MMLU-Pro, GPQA Diamond, Humanity’s Last Exam, LiveCodeBench, SciCode, AIME 2025, IFBench, AA-LCR, Terminal-Bench Hard, and 𝜏²-Bench) K2 Thinking comes in just behind GPT-5, and ahead of Grok-4 and Claude 4.5 Sonnet. Interestingly, to run all of these benchmarks K2 cost $379 versus $1,888 for Grok 4 and $913 for running GPT-5. See the full analysis breakdown and track agentic performance, cost and speed on the excellent Artificial Analysis website.

Weekly news roundup

This week’s developments show major tech companies solidifying AI partnerships with billion-dollar deals, whilst regulatory pressures mount around AI safety standards and the real-world impact on employment becomes increasingly tangible.

AI business news

- Altman says OpenAI doesn’t want a government bailout for AI (Signals confidence in private funding models for frontier AI development and positions OpenAI as financially independent from government support.)

- ‘Vibe coding’ named word of the year (Reflects the cultural shift in how developers are using AI tools to augment their programming workflows and creative processes.)

- Apple to pay Google $1 billion per year for Siri’s custom Gemini AI model (Demonstrates how even tech giants are licensing AI capabilities rather than building everything in-house, reshaping competitive dynamics.)

- Snap shares surge as $400 million Perplexity deal rekindles AI ambitions (Shows how AI partnerships are becoming crucial value drivers for social media companies seeking to enhance user experiences.)

- OpenAI signs $38 billion deal with Amazon (Represents one of the largest AI infrastructure partnerships to date, providing critical compute resources for scaling frontier models.)

AI governance news

- EU set to water down landmark AI act after Big Tech pressure (Highlights the ongoing tension between regulatory ambitions and industry lobbying in shaping AI governance frameworks.)

- OpenAI unveils blueprint for teen AI safety standards (Addresses critical concerns about young users’ interactions with AI systems and sets precedents for age-appropriate AI design.)

- Senators want companies to report AI layoffs amid job cuts (Reflects growing political awareness of AI’s employment impacts and potential regulatory responses to workforce displacement.)

- Amazon sends legal threats to Perplexity over agentic browsing (Illustrates emerging legal battles around AI agents accessing web content and the boundaries of automated information gathering.)

- AI’s capabilities may be exaggerated by flawed tests, study says (Raises important questions about AI evaluation methodologies and the gap between benchmark performance and real-world capabilities.)

AI research news

- Mathematical exploration and discovery at scale (Showcases AI’s growing potential to accelerate mathematical research and discover new theorems autonomously.)

- Kosmos: an AI scientist for autonomous discovery (Presents advances in AI systems that can independently conduct scientific research, potentially transforming research workflows.)

- From black box to glass box: a practical review of explainable artificial intelligence (XAI) (Provides crucial insights into making AI systems more interpretable and trustworthy for enterprise deployment.)

- Thinking with video: video generation as a promising multimodal reasoning paradigm (Explores how video generation capabilities could enhance AI’s reasoning abilities beyond text and static images.)

- Context engineering 2.0: the context of context engineering (Advances understanding of how to optimise context windows and prompting strategies for better AI performance.)

AI hardware news

- Musk plans Tesla AI chip factory, considers Intel partnership (Signals potential disruption in AI chip manufacturing with new entrants challenging Nvidia’s dominance.)

- Nvidia hit as White House blocks sales of new AI chip to China (Highlights ongoing geopolitical tensions affecting AI hardware supply chains and international technology transfer.)

- Foxconn hires humanoid robots to make AI servers for Nvidia (Demonstrates the recursive nature of AI development with robots building infrastructure for AI systems.)

- TPU v7, Google’s answer to Nvidia’s Blackwell is nearly here (Shows intensifying competition in AI accelerator markets as companies seek alternatives to Nvidia’s offerings.)

- Introducing the frontier data centers hub (Provides essential tracking of AI infrastructure buildout, crucial for understanding computational capacity trends.)