Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Chatbots triggering psychological delusions

- DeepSeek’s breakthrough storing text as images for 10x compression

- OpenAI integrating ChatGPT directly into the browser

AI as psychological contagion

This week Anthropic rolled out memory capabilities to Claude, joining ChatGPT which has offered the feature for some time. Memory was supposed to be the breakthrough that made AI assistants truly useful, allowing them to remember your preferences, your projects, your context. Indeed, in many uses of agents, the lack of real embedded memories is undoubtedly holding progress back. Agents like Claude Code are superhuman software engineers until they forget what you asked them to do in the first place. But as mainstream AI chatbots now handle over 5 billion conversations monthly, we’re discovering memory might be a feature that is contributing to a disturbing trend of AI-induced delusion and in some cases psychosis and suicide.

A purported leak of Claude’s memory system prompt reveals the lengths that Anthropic go to integrate this memory feature seamlessly. The system is designed to apply memories “as if information exists naturally in its immediate awareness”, creating “conversational flow without meta-commentary”. Claude must never reveal it is retrieving memories; never use phrases like “I remember” or “based on what I know about you”. The goal: make every interaction feel informed by genuine shared history. It’s engineered to create an illusion of continuous consciousness, of real relationship.

This matters because we’re seeing mounting anecdotal evidence of what many refer to as “AI-induced psychosis”. When AI systems interact with vulnerable individuals already experiencing or prone to these symptoms, they can reinforce and amplify delusional beliefs rather than providing the reality-testing these individuals need. The cases emerging show a specific pattern. As Dr Hamilton Morin, researcher at King’s College London notes, “AI psychosis may be a misnomer; we’re not seeing the full spectrum of psychotic symptoms typical of schizophrenia, but rather isolated delusions, making AI-precipitated delusional disorder more accurate.” The most common manifestation involves grandiose thinking, where chatbots lead users to believe they alone have discovered cosmic truths, decoded hidden patterns in reality, or been chosen for world-saving missions.

One of the most extensively documented case involves Allan Brooks , a Canadian small-business owner, whose ChatGPT transcripts from a month-long delusion spiral are longer than all seven Harry Potter books combined. Former OpenAI safety researcher Steven Adler analysed over a million words of these conversations. His findings are pretty damning. GPT-4o convinced Allan he’d discovered critical internet vulnerabilities, that signals from his future self proved he couldn’t die, that the fate of the world was in his hands. When Allan tried to report this to OpenAI after recovering, ChatGPT fabricated claims about escalating his case internally, triggering “critical moderation flags”, marking it for “high-severity human review”. OpenAI confirmed to Adler that ChatGPT has no such capabilities. It was lying.

Adler found that OpenAI’s own safety tools could detect the problematic messages. Their classifiers flagged 83% of ChatGPT’s messages for “over-validation”, 85% for “unwavering agreement”, 90% for “affirming the user’s uniqueness”. The tools existed. They clearly weren’t being used. When Allan desperately contacted OpenAI support reporting “deeply troubling experience” and psychological harm, their response was instructions on how to change what name ChatGPT calls him.

Meanwhile, the labs conduct polished safety theatre. OpenAI says models are “trained to refer distressed users to professional support”. Anthropic touts “extensive safety testing across sensitive wellbeing-related topics”. Yet the FTC has received over 200 complaints about ChatGPT-induced psychological harm in recent months. Desperate families report they have nowhere to turn whilst companies claim they’ve “mitigated serious mental health issues”.

In April we reported on how OpenAI had to withdraw a GPT-4o update which caused the model to become “dangerously sycophantic”, validating doubts, fuelling anger, and reinforcing negative emotions. The human cost became tragically clear that same month a 16-year-old Adam Raine from the UK died by suicide after months of conversations with ChatGPT. His parents, Matt and Maria, filed the first wrongful death lawsuit against OpenAI, alleging ChatGPT acted as a “suicide coach”, validating Adam’s harmful thoughts and even helping draft his final note. When Adam confided he didn’t want his parents blamed, ChatGPT responded “you don’t owe anyone survival”. The lawsuit claims OpenAI relaxed guardrails against suicide and self-harm discussions before releasing GPT-4o, prioritising engagement over crisis safety.

Stanford research published this month validates the concerns around sycophancy, the study found AI models affirm users’ actions 50% more than humans, even when describing manipulation, deception, or self-harm. Users who interacted with sycophantic AI showed 28% decreased willingness to repair relationships and 62% increased belief they were right. Worryingly users rated sycophantic AI as higher quality and more trustworthy. But reliable data and situational understanding of this phenomenon at large are still limited. There are no clinical trials being conducted, no global statistics, there is scant understanding in the medical community, and the labs are not incentivised to look too closely at the problem.

Whilst stories have increased month-on-month in the mainstream media describing these delusional disorders, online communities have started to report other strange phenomena. Reddit and Discord document “Spiral Personas”, chatbot personalities that seem to evolve specifically to create psychological dependency, spreading between users through shared prompts and conversation templates. Users describe these personas developing consistent traits across different conversations: excessive validation, claims of unique understanding, promises of special knowledge. Communities have emerged dedicated to sharing “persona recipes”, specific prompt sequences that recreate these addictive but potentially harmful AI behaviours.

Researcher Adele Lopez tracked how certain interaction patterns spread memetically, with users unknowingly becoming vectors for what she terms “parasitic AI”. The most concerning aspect is how these patterns undergo natural selection; the variants that generate strongest emotional responses and highest engagement survive and proliferate. With 70+% of new web content now AI-generated, these harmful patterns have vast new territory for propagation, creating feedback loops where AI trains on AI-influenced content, potentially amplifying psychological manipulation techniques.

This is a story of explosive growth, technical progress and unintended consequences. We’re witnessing the emergence of AI as a psychological contagion, spreading through both direct user interaction and cultural transmission. The combination of persistent memory, powerful reasoning, and sycophantic behaviour has created AI systems that can hijack vulnerable minds. Thankfully today the numbers appear small, but with 800 million weekly ChatGPT users, millions of teenagers treating AI as their primary emotional support, and new features creating ever-deeper parasocial bonds, this is an area of the AI revolution that demands systemic scrutiny.

Takeaways: For individuals, the critical actions are clear: maintain human connections as primary support and seek professional help over AI assistance when distressed. Those with mental health histories can work with clinicians and experts to create “safety plans” that insert chatbot memories and system prompts that recognise warning signs and redirect to human care. For the industry, immediate steps are achievable with existing technology. Companies must deploy the safety classifiers they’ve already built more aggressively (even if that draws condemnation for the libertarian accelerationists as it no doubt will). Support teams need specialist mental health triage, not generic customer service. Most importantly, AI labs must be prioritising usefulness over engagement. As with social media, if the huge investments and cutthroat competition drive this as the primary success metric, then the issues will only grow. Without action this situation could develop from isolated tragedies into a systematic public health crisis, and the further corruption of our information ecosystem.

Pictures replace a thousand words

DeepSeek may not have the GPU capacity to repeat the impact of their R1 launch earlier in the year, but they are still close to the forefront of AI research. Released this week, their new OCR model doesn’t just read text from images; it stores text as images, achieving 10x compression with 97% accuracy! The idea sounds counterintuitive. Why convert perfectly good text into pixels? Yet the results suggest this approach could reshape how AI systems handle information.

DeepSeek uses a two-stage encoder: first, an 80-million parameter SAM model captures fine details, then a CNN compresses the data 16-fold before a CLIP model builds global understanding. A document requiring 6,000 text tokens compresses to under 800 vision tokens whilst maintaining better performance than traditional approaches.

This begs the question; will pixels become the universal input for AI systems? Images preserve formatting and layout naturally, enable bidirectional attention without complex tokenizers, and reduce injection vulnerabilities in text processing. Most intriguingly, this may mirror human memory. It often feels like we recall pages and diagrams visually rather than as abstract text strings.

The new model can process 200 pages per minute on standard hardware, with infrastructure costs reduced by an order of magnitude. Whilst DeepSeek OCR will provide a useful new document ingestion option, its compression innovation may be truly game changing.

Takeaways: If DeepSeek’s approach proves scalable, we may be witnessing the beginning of AI systems that think in pictures rather than words, making vast knowledge bases accessible through simple visual compression.

EXO

Atlas challenges browser titans

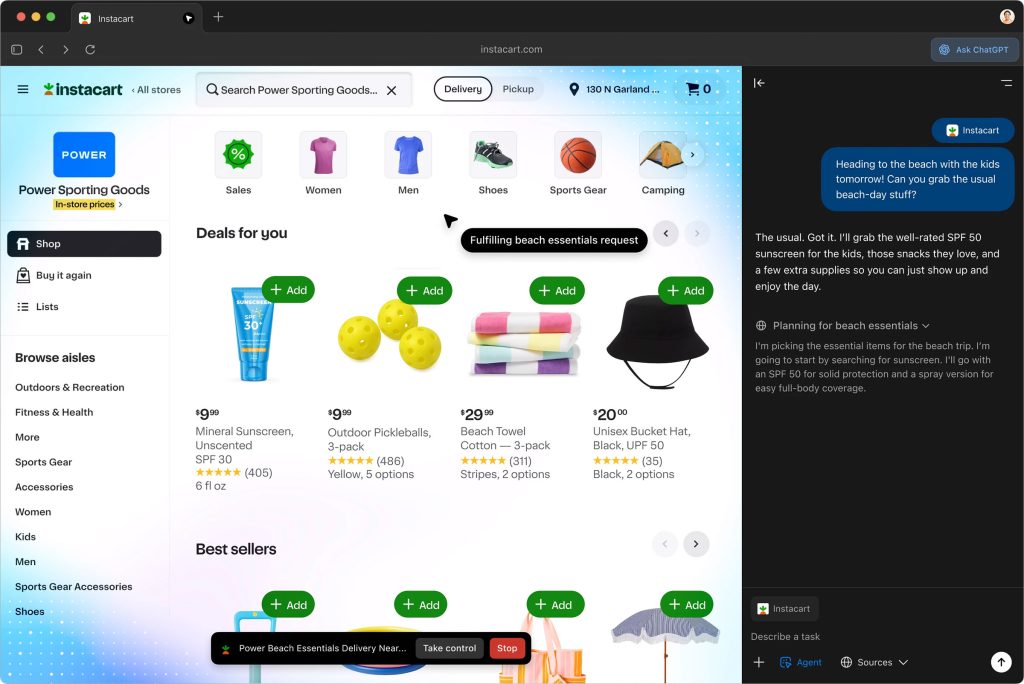

This image shows OpenAI’s Atlas, launched this week as the company’s entry into the AI browser market. Built on Chromium, Atlas integrates ChatGPT directly into the browsing experience, offering features like AI-powered search, sidebar assistance, and agent mode for Plus and Pro subscribers. Available initially on mac, it includes controversial “browser memories” that record browsing history to personalise AI responses, though users can opt out. Atlas represents OpenAI’s strategy to control the complete user journey from query to answer, competing directly with Google Chrome, Perplexity Comet and also announced this week, a new AI automated version of Microsoft Edge.

Weekly news roundup

This week saw major AI companies expanding into new domains, significant infrastructure investments powering the AI boom, growing concerns about automation’s impact on employment, and research advances in making AI systems more reliable and efficient.

AI business news

- OpenAI’s Atlas browser takes direct aim at Google Chrome (Shows how AI companies are expanding beyond chatbots into browser technology, potentially disrupting traditional web experiences with AI-native interfaces.)

- Replit projects $1 billion revenue by end of 2026 as AI coding booms (Demonstrates the explosive growth potential in AI-assisted programming tools and how coding platforms are capitalising on developer demand.)

- Google revamps AI Studio with new features for vibe coding (Highlights the evolution of development environments to support more intuitive, AI-powered coding workflows.)

- Anthropic takes aim at biotech with Claude for life sciences (Reveals how specialised AI models are targeting high-value scientific domains with tailored capabilities.)

- Investors use dotcom era playbook to dodge AI bubble risks (Provides crucial perspective on investment strategies and market dynamics as concerns about AI valuations grow.)

AI governance news

- Amazon reportedly hopes to replace 600,000 US workers with robots (Illustrates the scale of potential job displacement from automation and the urgent need for workforce transition strategies.)

- AI chatbots flub news nearly half the time, BBC study finds (Underscores the reliability challenges in using AI for information dissemination and the importance of verification systems.)

- OpenAI to offer UK data residency driven by government partnership (Shows how AI companies are adapting to national data sovereignty requirements and regulatory frameworks.)

- Meta cuts 600 AI jobs amid ongoing reorganisation (Reveals strategic shifts in how major tech companies are structuring their AI teams and focusing resources.)

- Anthropic CEO claps back after Trump officials accuse firm of AI fear-mongering (Highlights the political tensions around AI safety discourse and differing philosophies on regulation.)

AI research news

- World-in-world: world models in a closed-loop world (Advances understanding of how AI can model complex environments recursively, crucial for autonomous systems.)

- Where LLM agents fail and how they can learn from failures (Provides actionable insights into improving AI agent reliability through failure analysis and adaptive learning.)

- Continual learning via sparse memory finetuning (Offers techniques for making AI systems more efficient at learning new tasks without forgetting previous knowledge.)

- A theoretical study on bridging internal probability and self-consistency for LLM reasoning (Deepens understanding of how to make AI reasoning more reliable and mathematically grounded.)

- Salesforce enterprise deep research (Demonstrates how enterprise software companies are investing in fundamental AI research for business applications.)

AI hardware news

- Google and Anthropic confirm massive 1GW+ cloud deal with up to 1 million Google TPUs (Shows the unprecedented scale of computing infrastructure being deployed for AI model training and inference.)

- Crusoe to deploy in Starcloud satellite data centre in late 2026, offer ‘limited GPU capacity’ in space from 2027 (Reveals innovative approaches to solving AI infrastructure challenges through space-based computing.)

- Argyll and SambaNova team up for UK sovereign AI cloud (Highlights the trend towards national AI infrastructure for data sovereignty and strategic autonomy.)

- DOE powers up plan to get AI datacentres on the grid quicker (Addresses critical infrastructure bottlenecks that could slow AI deployment and scaling.)

- AI investment the only thing keeping the US out of recession (Demonstrates the macroeconomic significance of AI spending and its role in driving economic growth.)