Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

JOEL

This week we look at:

- How harness engineering and software dark factories are reshaping who writes code

- Mixed reactions to this week’s new models

- Anthropic’s data on agentic AI activity

Lights out for software engineering

In 1797, a young Londoner called Henry Maudslay walked out of Joseph Bramah’s lock workshop on Piccadilly after a dispute over pay, and set up his own operation on Wells Street off Oxford Road. Within a few years he had built a screw-cutting lathe so precise it could machine metal to within thousandths of an inch. The lathe didn’t just make screws. It made screws that were identical, every single time. Skill moved from the craftsman’s hands into the machine’s, and this breakthrough in mass production was one of many that created the conditions for the Industrial Revolution.

Two centuries later, something similar is happening to software engineering. OpenAI’s “Harness Engineering” article from early February described how a team built a product now exceeding a million lines of code over five months with a strict rule: no human-crafted code. When agents struggled, the fix was never to try harder. It was to ask what capability was missing, and how to make it legible and enforceable for the agent. The human role shifted entirely to designing environments, feedback loops and control systems.

Meanwhile at StrongDM, a team of three engineers is running what they openly call a “dark factory”: no human writes code, and no human reviews it either. Their rule of thumb is simple: “If you haven’t spent at least $1,000 on AI tokens today per human engineer, your software factory has room for improvement” . To make this work without everything collapsing, they’ve built what they call a “Digital Twin Universe”, behavioural clones of third-party services like Okta, Jira and Slack that allow agents to test at scale against realistic simulations. Test scenarios are kept as holdout sets the coding agents never see, mimicking external QA in a way that borrows more from machine learning evaluation than traditional software testing .

The idea is spreading fast. This week Howie Liu, CEO of Airtable, announced a new product built on the same principle, writing that he’s been personally burning through billions of tokens a week as a builder and that “what matters now is the system that lets agents learn, compound, and scale“.

At Stripe, homegrown coding agents called “Minions” now generate over 1,300 merged pull requests a week containing no human-written code, iterating against more than three million tests.

The “dark factory” label itself comes from manufacturing. China has become the global leader in these facilities, fully automated production floors that operate without human workers or even lighting. The Chinese government has backed the push with billions in robotics R&D. The metaphor translating to software was probably inevitable. But it’s not a perfect fit. Software development has never really been a factory process. It’s exploratory, creative, iterative. You’re navigating a broad problem space, hunting for product-market fit, often building something that hasn’t existed before. A car assembly line this is not. And yet the “darkness” part of the metaphor, the predominantly non-human, post-human quality of the work, that does apply. What’s interesting is that the new wave of agent-driven development retains the exploratory nature. Techniques like the “Ralph Wiggum” loop we’ve covered in recent weeks take a product requirements document, break it into small user stories with acceptance criteria, then loop through build-test-iterate cycles autonomously, shipping work while the human sleeps. That’s not just an assembly line. It’s an autonomous explorer, operating in the dark, but still searching.

There’s a clear software engineering spectrum emerging. At one end sits vibe coding, Andrej Karpathy’s philosophy from early 2025: “fully give in to the vibes, embrace exponentials, and forget that the code even exists”. It was the discovery moment, the rush of realising AI could write code at all. But the data on vibe coding’s output is mixed: research shows 4x code duplication, nearly 3x more security vulnerabilities and a 3x spike in readability issues compared to human-written code. Karpathy himself has recognised the limits, recently proposing “Agentic Engineering” as a successor that preserves human supervision.

In the middle of the spectrum sits agentic coding, the approach of Boris Cherny, creator of Claude Code. Agents work autonomously but within human-designed workflows. “I do still look at the code,” he says. On Lenny’s Podcast this week, he shared that some Anthropic engineers spend $100,000 a month each on AI code generation, comfortably exceeding StrongDM’s $1,000 per engineer per day.

But at the far and rapidly emerging end of this spectrum is the dark factory: full lights-out, where trust comes not from human review but from the harness itself, the context, the rules, the quality checks, the tests, the digital twin universes, the rigorous evaluation sets.

As we always say, if you want to see where all knowledge work is heading, watch software engineering. The pattern we’re watching now, vibe coding as discovery, harness engineering as industrialisation, the dark factory as destination, will repeat in legal work, in financial analysis, in consulting, in content production. The ratio of humans to agents, and the sophistication of the harness those humans design, will become the defining measure of a knowledge organisation’s capability.

Takeaways: Maudslay’s genius wasn’t building a better screw. It was building a machine that helped humans design and make better screws at scale. That’s the real lesson of harness engineering: the intelligence revolution in software isn’t about removing engineering. It’s the moment engineering became everything. The code is just the output. The engineering is only just getting started.

The new rhythm of AI progress

Three new models dropped this week. Google’s Gemini 3.1 Pro, Anthropic’s Sonnet 4.6 and xAI’s Grok 4.2. Google claimed a 77% score on ARC-AGI-2 for its standard Pro model, separate from the Deep Think variant that scored 84.6% last week, more than doubling its November result. Grok 4.20 promised “rapid learning” and a new multi-agent setup by default. Sonnet 4.6 offered a million-token context window at bargain pricing. And yet the collective reaction from the people actually using these models has been muted.

Google’s benchmark obsession is becoming a pattern. In November, Gemini 3 Pro broke the 1500 Elo barrier on LMArena and topped Humanity’s Last Exam. Users then reported a model that didn’t live up to expectation. This week’s sequel does not resolve that issue. Independent testing found Gemini 3.1 Pro spends up to 114 seconds planning before writing a single line of code, sometimes getting stuck in loops. Its agentic benchmark ranking dropped from 7th to 19th. Grok 4.20 is a version number that tells you everything about xAI’s priorities. Users report it misreads context and fixates on irrelevant details. Sonnet 4.6 is the most honest of the three: a capable mid-tier model filling the gap between Haiku and Opus, not pretending to be anything more.

Contrast this with a fortnight ago. OpenAI shipped Codex 5.3, a model that was instrumental in debugging its own training run. Anthropic released Opus 4.6 with adaptive reasoning and its highest-ever agentic coding scores. Those felt like genuine steps forward. This week’s batch feels like catch-up. It resembles the classic Intel tick-tock cadence. The “tock” delivers an architectural leap. The “tick” optimises the existing design to fill gaps. Reinforcement learning and increasingly sophisticated training infrastructure mean both ticks and tocks now arrive every few weeks. That pace would have been unthinkable in 2024.

Since November, agentic coding, tool use and terminal-based engineering have become the white-hot centre of AI development, as we explore in this week’s Dark Factory piece. Google appears to have tuned Gemini 3.1 Pro for its own surfaces: Search, Gmail, Workspace. That may be a sensible product decision. But it leaves a strategic gap. Gemini 3 showed enormous promise. Gemini 3.1 Pro does not restore Google to a position of relevance in the world of software engineering, and that is the race that matters most right now.

Takeaways: We are entering an era of rapid, regular model releases, some that push the frontier and many that fill gaps beneath it. The trick for anyone building with AI is learning to tell the difference, and not mistaking a tick for a tock.

EXO

New data on agent usage

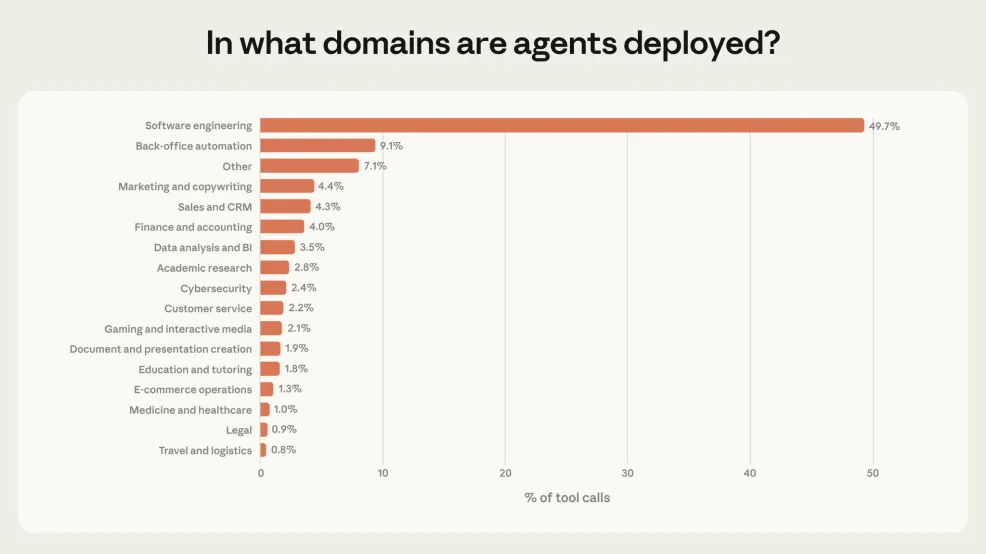

This chart comes from Anthropic’s new research paper, “Measuring AI agent autonomy in practice”, published this week. The team analysed millions of human-agent interactions across both Claude Code and Anthropic’s public API, studying individual tool calls as the building blocks of agent behaviour. The result? Software engineering accounts for 49.7% of all agentic activity. The next biggest category, back-office automation, manages just 9.1%. Everything else is in the low single digits. Customer service, a domain that has been talked about as a natural fit for AI agents for years, sits at just 2.2%, behind data analysis, cybersecurity, and even academic research.

The research also revealed that the longest autonomous Claude Code sessions nearly doubled in three months, from under 25 minutes to over 45 minutes. Experienced users auto-approve more actions but also interrupt more often, shifting from step-by-step approval to active monitoring. And Claude itself pauses to ask for clarification more than twice as often as humans interrupt it on complex tasks.

Weekly news roundup

This week’s AI landscape is dominated by the accelerating push toward autonomous agents — from OpenAI’s strategic hiring and device ambitions to new research on multi-agent cooperation — alongside growing tensions between AI companies and governments over military use, security, and workforce transformation.

AI business news

- OpenAI hires OpenClaw founder Peter Steinberger in push toward autonomous agents (Signals OpenAI’s deepening commitment to agentic AI, a key area for anyone building or deploying AI-powered workflows.)

- Altman-Amodei hand-holding snub goes viral at India AI event (A telling moment that underscores the intensifying rivalry between OpenAI and Anthropic as both compete for global influence and partnerships.)

- How AI is affecting productivity and jobs in Europe (Offers data-driven insight into AI’s real-world economic impact, essential reading for understanding workforce transformation trends.)

- Google launches Lyria 3 music generation model (Marks a significant step in generative AI for creative industries, raising fresh questions about copyright and the future of content creation.)

- OpenAI developing AI devices including smart speaker, The Information reports (Indicates OpenAI’s ambition to move beyond software into hardware, potentially reshaping how consumers interact with AI agents daily.)

AI governance news

- Pentagon officials threaten to blacklist Anthropic over its military chatbot policies (Highlights the escalating tension between AI safety principles and national security demands, a pivotal governance flashpoint.)

- Microsoft has a new plan to prove what’s real and what’s AI online (Addresses the critical challenge of AI-generated content authenticity, relevant to anyone concerned about misinformation and digital trust.)

- Accenture ‘links staff promotions to use of AI tools’ (A striking example of how organisations are beginning to formally incentivise AI adoption, with major implications for workplace culture and skills development.)

- European Parliament blocks AI on lawmakers’ devices, citing security risks (Demonstrates the growing institutional caution around AI data security, particularly in sensitive governmental contexts.)

- Announcing the NIST “AI agent standards initiative” for interoperable and secure innovation (A landmark move toward standardising AI agent interoperability and security, which will shape how multi-agent systems are built and deployed.)

AI research news

- Small reward models via backward inference (Proposes a more efficient approach to reward modelling, potentially making RLHF-style alignment more accessible for smaller teams and models.)

- Multi-agent cooperation through in-context co-player inference (Advances our understanding of how AI agents can collaborate dynamically, a foundational capability for real-world multi-agent deployments.)

- MAS-on-the-fly: dynamic adaptation of LLM-based multi-agent systems at test time (Introduces runtime adaptation for multi-agent systems, enabling more flexible and resilient agentic architectures.)

- Coding agent with lossless context management (Tackles one of the key practical bottlenecks in coding agents — context window limitations — with a lossless management approach.)

- Does socialisation emerge in AI agent society? A case study of Moltbook (Explores whether social behaviours emerge among interacting AI agents, raising fascinating questions about emergent properties in agent ecosystems.)

AI hardware news

- Nvidia CEO Jensen Huang claims company will unveil “a chip that will surprise people” at GTC next month (Builds anticipation for Nvidia’s next-generation silicon, which could reset performance expectations for AI training and inference.)

- Microsoft matches 100% of 2025 power use with renewables, with more than 40GW of contracted capacity (A major milestone in addressing AI’s enormous energy footprint, setting a benchmark for sustainable infrastructure at scale.)

- French AI cloud startup Policloud plans 1,000 sovereign micro-data centre deployments by 2030 (Reflects the growing European push for data sovereignty and distributed AI infrastructure as an alternative to hyperscaler dominance.)

- AMD underwrites $300m Crusoe loan so data centre firm can buy its AI chips (Shows AMD’s aggressive financing strategy to win AI data centre market share against Nvidia’s dominance.)

- Ericsson outlines AI vision ahead of 6G era (Previews how AI and next-generation connectivity will converge, with implications for edge computing and real-time AI applications.)