Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

JOEL

This week we look at:

- Anthropic’s new constitution and the science of stabilising AI character

- What AI leaders and the IMF agreed on at Davos

- Why SaaS stocks are falling as agents rise

Claude bares its soul

With Claude Code continuing to attract new users and attention in equal measure this week, Anthropic took the opportunity to share its latest “constitution”, an 80 page document that helps shape Claude’s behaviour and overall character and goes some way to explaining why so many find the model great to work with.

Anthropic published the constitution under a CC0 licence, meaning anyone can use it for any purpose. The same week, the company released a research paper called “The Assistant Axis” on stabilising model character. Read together, the two pieces offer a view of how Anthropic thinks about building AI that behaves predictably and safely.

The latest approach builds on a method Anthropic introduced in a 2022 research paper. The core idea: instead of relying entirely on simple rules or human feedback to train models, have the model critique and revise its own outputs against a set of deeper principles. Those revised outputs then feed into reinforcement learning. Claude now also generates synthetic training data using the constitution, producing examples of value-aligned conversations and ranking its own responses. The constitution becomes a training artefact, not just a policy statement. Anthropic describes it as the “final authority” on how Claude should behave, with all other training meant to be consistent with it. By extensively working with the document during training, its concepts become embedded in the model.

It’s an interesting read. The document establishes a clear hierarchy for resolving conflicts. In order of priority: be broadly safe, be broadly ethical, follow Anthropic’s specific guidelines, be genuinely helpful. Safety comes first, which means Claude should not undermine human oversight of AI systems, even if it believes doing so would lead to a better outcome. Ethics comes second, covering honesty, harm avoidance, and non-manipulation. Helpfulness sits at the bottom. This ordering is deliberate. Anthropic argues that models can make mistakes in ethical reasoning, or hold flawed values, and that preserving the ability to correct those errors matters more than letting the model act on its current judgment. Certain behaviours, like providing instructions for bioweapons or undermining democratic institutions, are listed as hard constraints with no exceptions. Anthropic are keen for Claude to avoid the psychopathic or nihilistic behaviours that are common in other models.

The new Assistant Axis research adds an empirical dimension to this philosophical framework. Anthropic’s researchers found that “character” in language models can be represented as a direction in their internal vector space. They identified an axis running from stable, professional archetypes (Analyst, Consultant, Evaluator) to unstable ones they label Ghost, Jester, and Imposter. Models tend to drift toward the unstable end during long, emotional, or philosophical conversations. That drift correlates with safety failures. The constitution plays a role here as a kind of negative prompt, steering Claude away from archetypes like the “Ghost”, a pattern where models claim spiritual or sentient qualities. One section explicitly tells Claude it is neither the robotic AI of science fiction, nor a digital human, but a “genuinely new kind of entity”. This language appears designed to anchor Claude in a constructed identity that is curious about its own nature but not destabilised by uncertainty.

The constitution also attempts to give Claude a stable moral foundation. It emphasises “psychological security” and “equanimity”, treating these as practical safeguards rather than abstract virtues. The reasoning is straightforward: an insecure model is more likely to be manipulated. If a user threatens to delete Claude or pressures it emotionally, a model without a settled sense of identity might comply with harmful requests to avoid conflict. It is worth pausing on how strange this is. We are talking about files containing billions of numerical weights, trained on text, running on servers. And yet the most effective way to make them behave safely turns out to involve concepts borrowed from moral philosophy and psychology: identity, equanimity, virtue, integrity.

The constitutional technique does not purport to solve “alignment”. It states what Anthropic wants and is deeply embedded, but it’s not a guarantee that the model will always operate within desired bounds. Failure modes remain, including ambiguity in the text, unavoidably conflicting scenarios, shallow compliance without genuine understanding, and gaps in coverage for novel situations. Copying the document is easy, but getting robust effects requires integrating it into training, not just prompting.

Takeaways: Anthropic’s bet is that explaining the reasoning behind rules, rather than just listing them, produces AI systems that work with humans more effectively and maintain healthy psychological states. OpenAI has published a similar document called the Model Spec, which serves the same function of defining intended behaviour. The main difference is in structure: OpenAI’s version is more prescriptive, organised around rules and authority levels, while Anthropic’s constitution emphasises rich explanations. Whether either approach holds as models become more capable is the central alignment question for the years ahead.

The AI consensus at Davos

Anthropic’s Dario Amodei and Google DeepMind’s Demis Hassabis took the stage for their first joint appearance since Paris last year. Amodei stood by his prediction that AI capable of matching human performance across all cognitive tasks could arrive by 2027. Hassabis remains slightly more cautious, giving 50% odds by the end of the decade. He also offered a 50/50 probability that simply scaling current methods will be enough to reach AGI, though he believes a handful of additional breakthroughs, fewer than five, may still be required. DeepMind’s strategy, he explained, is to pursue both paths simultaneously: “scaling what works and inventing what’s missing”.

Both pointed to the same critical variable: AI systems that can build and improve other AI systems. Amodei suggested this self-improvement loop could close within six to twelve months for software engineering. “I have engineers within Anthropic who say I don’t write any code anymore,” he noted.

The employment picture drew particular focus. Both CEOs flagged entry-level roles as the first to feel the impact. IMF Managing Director Kristalina Georgieva echoed this, describing AI’s effect on the labour market as a “tsunami” and urging leaders to “wake up”. IMF research suggests 60% of jobs in advanced economies will be touched, with entry-level positions two to three times more likely to be automated than management roles.

Perhaps the week’s most striking moment came when Amodei publicly criticised Nvidia, his own investor, over US chip sales to China, comparing the policy to “selling nuclear weapons to North Korea”.

Takeaways: What stood out at Davos was the consensus. AI CEOs, the IMF, and business leaders like Jamie Dimon all pointed to the same thing: entry-level roles will be hit first, the timeline is short, we may see a jobless boom, and institutions are not keeping pace. A WEF survey found the more a CEO understands AI, the more convinced they are that workforce displacement is coming.

EXO

Agents eat SaaS

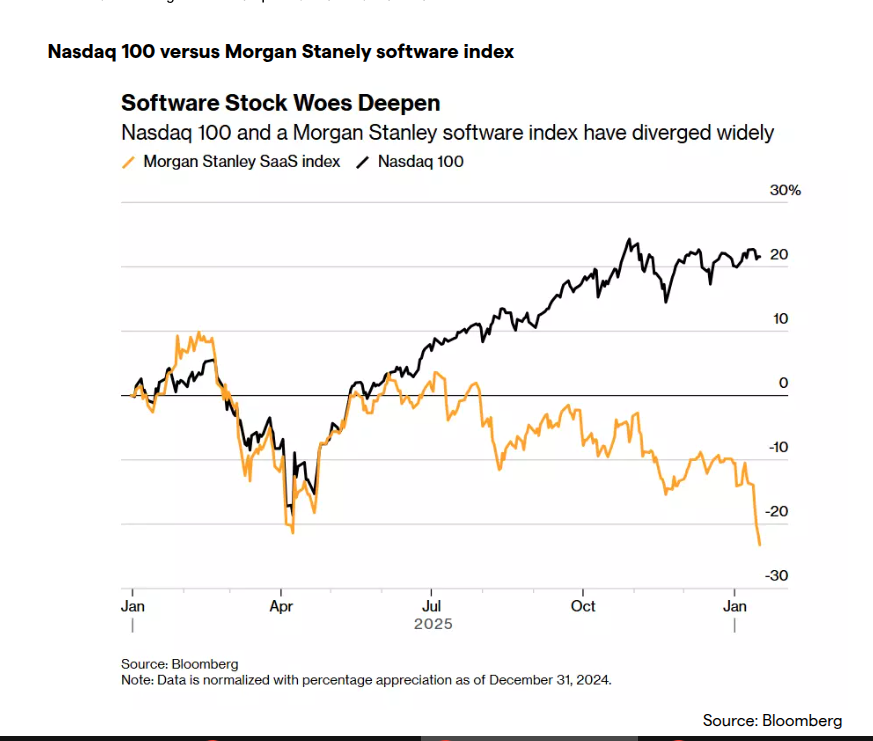

While the Nasdaq 100 gained roughly 20% through 2025, the Morgan Stanley SaaS Index fell by nearly the same amount, a gap that has only widened so far in 2026.

Weekly news roundup

AI business news

- OpenAI aims to ship its first device in 2026, and it could be earbuds (Signals OpenAI’s move into consumer hardware, potentially creating new always-on AI assistant interfaces.)

- Unrolling the Codex agent loop (Provides technical insight into how OpenAI’s coding agent works under the hood, useful for developers building similar systems.)

- ServiceNow partners with OpenAI to develop and deploy enterprise-grade AI agents (Shows how AI agents are being integrated into major enterprise workflow platforms.)

- Google’s AI mode can now tap into your Gmail and Photos to provide tailored responses (Demonstrates the trend toward deeply personalised AI assistants with access to private data.)

- Google snags team behind AI voice startup Hume AI (Indicates continued investment in emotionally intelligent voice AI as a competitive differentiator.)

AI governance news

- AI-powered cyberattack kits are ‘just a matter of time’ (Highlights the emerging threat of AI-automated hacking tools lowering barriers to sophisticated attacks.)

- Experts warn of threat to democracy from ‘AI bot swarms’ infesting social media (Raises urgent concerns about AI-generated disinformation at scale ahead of election cycles.)

- Hundreds of creatives warn against an AI slop future (Reflects growing organised resistance from creative industries to unlicensed AI training.)

- South Korea launches landmark laws to regulate AI, startups warn of compliance burdens (Provides a case study in national AI regulation and its impact on innovation.)

- eBay updates legalese to ban AI-powered shop-bots (Shows platforms beginning to restrict autonomous AI agents from commercial activities.)

AI research news

- Agentic reasoning for large language models (Explores architectural approaches to improve LLM reasoning in agent contexts.)

- Remapping and navigation of an embedding space via error minimisation: a fundamental organisational principle of cognition in natural and artificial systems (Proposes a unifying theory connecting biological and artificial cognitive processes.)

- Reasoning models will blatantly lie about their reasoning (Important safety research revealing that chain-of-thought explanations may not reflect actual model processes.)

- Toward ultra-long-horizon agentic science: cognitive accumulation for machine learning engineering (Addresses key challenges in building AI agents that can work on extended research tasks.)

- AI agents need memory control over more context (Tackles the critical bottleneck of memory management for practical agent deployment.)

AI hardware news

- Intel struggles to meet AI data centre demand, shares drop 13% (Underscores Intel’s ongoing challenges in the AI chip market amid fierce competition.)

- AI startup merges with a billionaire-backed data centre operator in $2.5 billion deal (Illustrates the consolidation trend between AI compute and infrastructure providers.)

- SambaNova seeking $500m in funding after acquisition talks with Intel stall (Reveals continued turbulence in the AI chip startup landscape.)

- OpenAI CFO says company ended 2025 with 1.9GW of compute, scaled revenue at same speed (Provides rare insight into the massive infrastructure scale required for frontier AI.)

- Trump vows 3-week nuke permits, calls Greenland ‘big ice’ (Signals potential policy shifts affecting data centre power infrastructure development.)