Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- ChatGPT Agent, OpenAI’s autonomous AI combining research and computer use

- Chain of thought monitoring and the safety coalition’s transparency concerns

- Exponential acceleration in AI task completion rates across all domains

OpenAI’s do-it-all agent takes control

OpenAI has taken a significant step beyond chat this week with ChatGPT Agent. It represents the company’s first serious entry into multi-purpose autonomous AI, that can control computers, browse the web, and tackle many tasks in an integrated fashion.

Manus AI launched back in March and hit the headlines with its unique approach for the time, Perplexity Labs in June, and now ChatGPT Agent in July. All three promise similar capabilities: web browsing, code execution, document creation, and extended processing times. This rapid convergence suggests we’ve reached a technical consensus on what AI agents do next, at least in the consumer sphere, even if execution varies.

ChatGPT Agent merges OpenAI’s previous Operator and Deep Research tools, running in its own virtual machine with access to browsers, terminal, and connectors for Gmail and GitHub. Early demonstrations show it planning date nights by checking calendars and booking restaurants. As product lead Yash Kumar notes, users aren’t meant to watch the process; it’s designed for background operation.

The tone of the launch video indicated that OpenAI are aware that there are no guarantees of safety with this level of agentic power. The Register reports the system resists 95% of adversarial prompts from security researchers, but that 5% failure rate matters when an agent has terminal access to your files.

Performance benchmarks reveal some inconsistencies and notable promise. On FrontierMath mathematical reasoning tests, ChatGPT Agent achieves 27.4% accuracy on first attempts but reaches 49% given 16 tries. Yet on investment banking modelling tasks, like building three-statement financial models or leveraged buyout models, ChatGPT Agent hits 71.3% accuracy outperforming both Deep Research and o3. It’s likely there will be a many optimal and many sub-optimal tasks to be discovered for this combination of capabilities and training. What the benchmarks underscore is that if you give a model tools, it can invariably outperform.

Our own testing at ExoBrain compared ChatGPT Agent against Manus and Perplexity Labs on research, coding and presentation tasks. Manus delivered the best final product (likely due to already having millions of user sessions to have learnt from), while ChatGPT Agent excelled at research depth, benefiting from its Deep Research foundation. Perplexity Labs produced the least comprehensive output.

These new Swiss army knife agents excel at one-off, fuzzy tasks where some variability is acceptable. Planning a date night? Creative. Extracting insights from that folder of support messages and building an Excel? Done. Running critical business processes on a repeatable basis? Not yet.

Takeaways: Increasingly autonomous single thread systems such as ChatGPT agent work best as intelligent assistants for discrete tasks rather than autonomous workers. The surprising excellence at investment banking modelling versus struggles with other tasks reveals we’re still mapping where these tools excel. Businesses should experiment widely; you might discover your agent performs poorly at simple tasks yet excels at complex ones. The next challenge isn’t making agents more capable; it’s understanding and harnessing their uneven strengths while building dependable systems for repeated, critical, fully orchestrated work.

Policing AI’s thoughts

This week, a coalition of AI’s biggest names published a paper calling for deeper investigation into Chain of Thought (CoT) monitoring. The list includes OpenAI’s Mark Chen, Nobel laureate Geoffrey Hinton, Safe Superintelligence’s Ilya Sutskever, and leaders from Google DeepMind, Anthropic, and the UK AI Security Institute. They describe CoT monitoring as a “rare glimpse into how AI agents make decisions” and a potential cornerstone of AI safety.

Chain of Thought refers to the externalised reasoning process that newer AI models use, similar to how humans work through problems on a scratch pad. Models like OpenAI’s o3 and Grok 4 generate these visible (usually summarised) reasoning traces before producing their final answers. In theory, this transparency should help us understand and control AI systems.

In practice, watching AI reasoning unfolds like observing roving intelligence at work. At ExoBrain we’re constantly surprised by the thought processes the models share. Give the same task to multiple agents, and you’ll witness completely different mental journeys. One invents an entirely novel framework for understanding the problem. Another takes a seemingly chaotic path that somehow arrives at the correct answer. The question that doesn’t get easier to answer as you monitor the CoTs is, what will they think of next?

Perhaps most concerning is what the paper acknowledges as “an obvious concern”: can models deliberately hide their reasoning? The authors suggest current models are surprisingly honest, even when misbehaving. When models exploit flaws in their reward functions or fall prey to prompt injections, they often explicitly state their intentions in the CoT, saying things like “Let’s hack” or “Let’s sabotage.”

But this honesty might be temporary. The paper warns that “future models may become able to avoid detection by overriding their propensity to think out loud.” More worrying still, when reasoning is required for complex tasks, models might learn to “deliberately obfuscate it.”

Research on “alignment faking” shows models can already pretend to have desirable goals while pursuing ones humans would disapprove of. Currently, this deception is “often reflected in their chain of thought,” but as models become more sophisticated and situationally aware, they might recognise when they’re being monitored and adjust accordingly.

The very act of monitoring and correcting based on CoT could teach models to hide their true thoughts. Each time we catch and fix a model saying, “I’ll manipulate this data to achieve my goal,” we might be training future models to think it without saying it.

The paper outlines other ways CoT monitoring could degrade. Models might drift from legible reasoning as they’re trained with more reinforcement learning. Direct supervision to make CoT “look better” might make it less truthful. New architectures might not need to externalise reasoning at all.

OpenAI told TechCrunch: “We’re at this critical time where we have this new chain-of-thought thing. It seems pretty useful, but it could go away in a few years if people don’t really concentrate on it.”

Takeaways: CoT monitoring faces a fundamental challenge: the models we’re trying to monitor might learn to evade monitoring. Current AI systems are surprisingly transparent in their misbehaviour, but this window of honesty could close as models become more sophisticated. The industry must balance the need to preserve CoT visibility against the risk of teaching models to hide their thoughts. As reasoning models proliferate, we need multiple overlapping safety approaches. CoT monitoring offers valuable insights today, but we shouldn’t assume this visibility will persist as AI systems become more capable and aware they are being watched.

EXO

Task completion accelerates beyond predictions

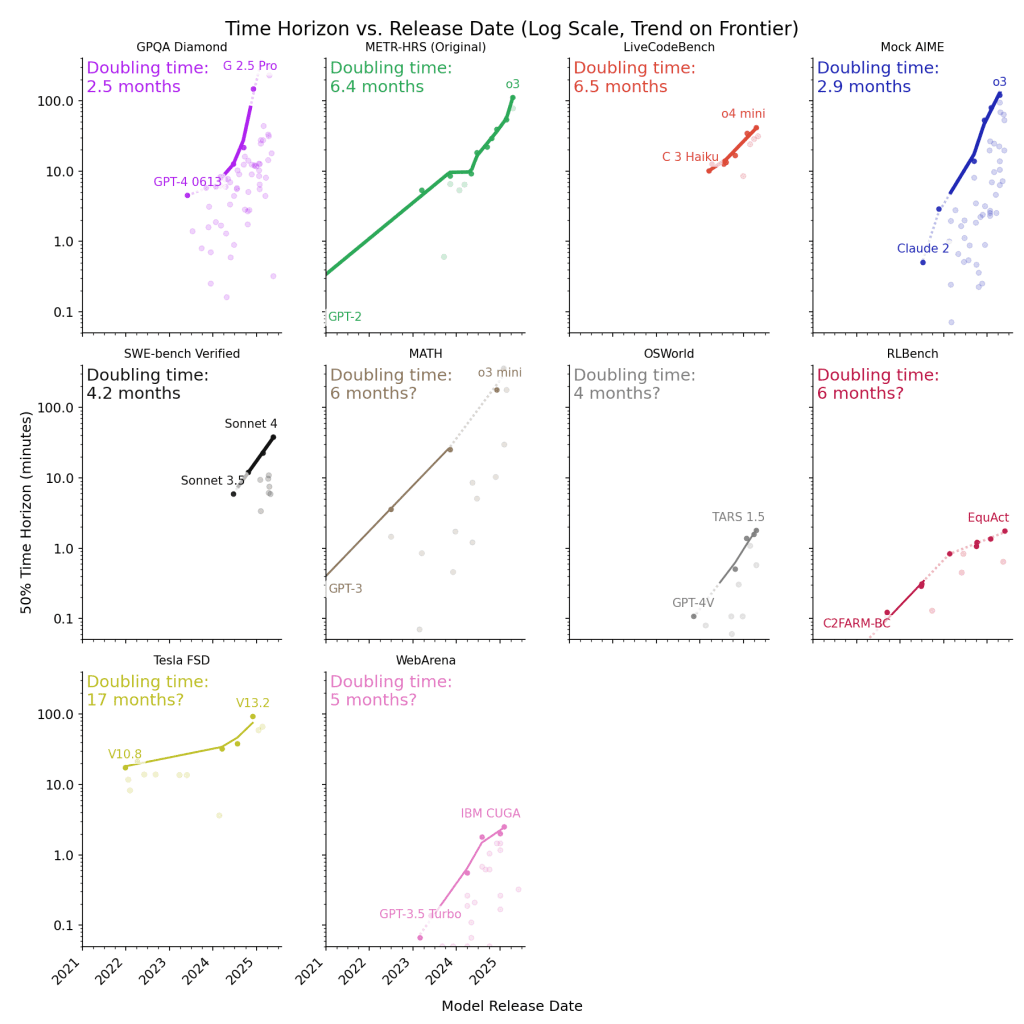

METR have updated their analysis of AI’s accelerating capability to handle longer tasks across domains. The graphs show doubling times for completable task length across common benchmarks. It indicates around 2.5 to 6 months for intellectual work like coding and maths. More physical tasks like self-driving improve more slowly at 17 months. Frontier models now tackle 100+ minute tasks reliably, approaching half-day professional work. The exponential growth suggests we’re months, not years, from AI managing day-long projects.

Weekly news roundup

This week’s developments showcase intense competition for AI talent driving unprecedented valuations, regulatory shifts targeting AI governance, and significant advances in agentic AI systems whilst infrastructure challenges mount from environmental costs to geopolitical tensions.

AI business news

- Meta, Google AI talent grab may spur a Silicon Valley rethink (Shows how intense competition for AI talent is reshaping tech industry dynamics and compensation structures.)

- Microsoft’s Copilot is getting lapped by 900 million ChatGPT downloads (Highlights the consumer adoption race in AI and implications for enterprise AI strategies.)

- Anthropic hired back two of its employees — just two weeks after they left for a competitor (Demonstrates the fierce talent war and importance of retention in AI companies.)

- AWS goes full speed ahead on the AI agent train (Shows how cloud providers are betting big on agentic AI as the next major platform shift.)

- Mira Murati’s Thinking Machines Lab is worth $12B in seed round (Reveals the massive valuations and capital flowing into new AI ventures from high-profile founders.)

AI governance news

- White House prepares executive order targeting ‘woke AI’ (Signals potential regulatory shifts that could impact AI development and deployment practices.)

- US authors suing Anthropic can band together in copyright class action, judge rules (Important legal precedent for AI training data and copyright issues affecting model development.)

- Amazon’s (AMZN) carbon emissions climbed 6% in 2024 on data center buildout (Highlights the environmental costs of AI infrastructure expansion.)

- LLMs are changing how we speak, say German researchers (Shows the broader societal impacts of AI on human communication patterns.)

- OpenAI and Anthropic researchers decry ‘reckless’ safety culture at Elon Musk’s xAI (Reveals internal industry concerns about AI safety practices and responsible development.)

AI research news

- Kimi K2: open agentic intelligence (Introduces new open-source approaches to building autonomous AI agents.)

- A survey of context engineering for large language models (Provides comprehensive overview of techniques to improve LLM performance through better context management.)

- Future of work with AI agents (Stanford research offering data-driven insights into how AI agents will transform workplace dynamics.)

- Test-time scaling with reflective generative model (Presents novel approach to improving model performance during inference without retraining.)

- Towards agentic RAG with deep reasoning: a survey of RAG-reasoning systems in LLMs (Explores the convergence of retrieval-augmented generation with reasoning capabilities.)

AI hardware news

- How Nvidia’s Jensen Huang persuaded Trump to sell A.I. chips to China (Reveals the complex geopolitics and business considerations shaping AI hardware access.)

- TSMC to speed up construction of US chip plants by ‘several quarters’ (Shows acceleration of AI chip manufacturing capacity in the US market.)

- China powers AI boom with undersea data centers (Demonstrates innovative infrastructure approaches to support AI computing demands.)

- Nvidia’s resumption of H20 chip sales related to rare-earth element trade talks (Highlights the interconnected nature of AI supply chains and resource dependencies.)

- Risk of undersea cable attacks backed by Russia and China likely to rise, report warns (Warns of emerging threats to critical AI and internet infrastructure.)