Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- AI models discovering novel cancer therapies validated in human cells

- Nvidia’s DGX Spark desktop supercomputer arriving with mixed reviews

- New framework revealing AI’s uneven progress towards human-level intelligence

Can computational biology cure cancer?

Google DeepMind announced this week that a specialised model, C2S-Scale, discovered a novel cancer therapy, validated through laboratory experiments on human cells. The 27 billion parameter model, built on the familiar open-source Gemma architecture, identified that silmitasertib could amplify immune responses in specific cellular contexts, something no researcher had previously considered.

C2S-Scale converted each cell’s gene expression data into ordered lists, where position indicates expression level. After processing 57 million cells and over a billion tokens, it learned that certain gene reordering only occurs in specific immune contexts. When asked to find drugs that boost antigen presentation, it recognised a pattern humans had missed: silmitasertib plus low-dose interferon creates a synergistic effect that makes tumours visible to the immune system. Researchers at Yale are now exploring the mechanism and testing additional AI predictions. What’s striking is how quickly this moved from computational prediction to laboratory validation, suggesting these models are generating genuinely testable hypotheses rather than statistical noise. Given this is a small model, one wonders if scaling these kinds of solutions up to current frontier sized trillion parameter models will bring more progress. For now it’s possible to download the code and explore this discovery on your laptop.

This pattern-finding capability isn’t limited to single-domain models. Earlier in June, researchers at Tufts University published MultiXVERSE, a universal network embedding method that discovered an unexpected causal link between GABA neurotransmitters and cancer formation. The system analysed molecular, drug, and disease interactions across multiple network layers simultaneously, generating a hypothesis that researchers then validated experimentally using Xenopus laevis tadpoles. What makes MultiXVERSE particularly interesting is its ability to handle any type of multilayer network, from molecular pathways to social systems. The GABA-cancer connection it identified had been hiding in plain sight across thousands of published datasets; it took AI’s ability to traverse these complex network spaces to spot the relationship.

And yet whilst promising these pattern-finding breakthroughs do not guarantee large scale outcomes. Despite a decade of progress, not a single AI-discovered drug has received regulatory approval. The industry’s 90% clinical trial failure rate persists, with AI candidates showing early promise in Phase I trials only to stumble in Phase II. The problem isn’t necessarily the AI; it’s that human biology remains stubbornly mysterious. As one industry veteran puts it, drug discovery is “probably the hardest thing mankind tries to do”. Even with perfect pattern recognition, toxicity and side effects remain nearly impossible to predict. The successful companies appear to be those with patient capital and massive computing power, like Google’s Isomorphic Labs, which has raised $600 million this year and can afford to wait decades for results whilst building what they call a “generalisable drug design engine”.

The true endpoint of this pattern-finding capability may be complete cellular simulation. The Chan Zuckerberg Initiative launched rBio in August, an AI model that learns from virtual cells rather than laboratory experiments, whilst the Arc Institute announced a $100,000 prize for models that predict genetic perturbations. Google’s Demis Hassabis has declared building a virtual cell as a primary goal. These aren’t traditional mathematical models with manually coded equations; they’re AI systems learning directly from billions of real cellular observations. Stephen Quake at CZI describes the ambition starkly: flip biology from 90% experimental to 90% computational. Early results suggest this isn’t fantasy. CZI’s models can already predict cellular responses across species they’ve never encountered, whilst Stanford’s virtual yeast cells predict metabolic disruptions with high accuracy. If successful, scientists could test thousands of drug candidates computationally before touching a pipette, fundamentally changing how we approach biological research.

Recent months have seen an increase in signals that AI can help drive scientific progress. LLMs are universal translators for complexity, finding patterns in spaces too vast for human cognition. The combination of widespread access to reasoning models and available compute for scaled experiments is enabling this progress. And language, it seems, encodes more than human communication; it captures patterns of reality itself. The immediate future will likely bring more such discoveries as researchers apply similar approaches to other scientific domains and exploit ever advancing techniques. The computational infrastructure is now in place: open models aplenty, massive computational resources, and growing acceptance of AI-generated hypotheses. This feels like a mini-breakthrough moment, hopefully the first of many.

Takeaways: AI has started to cross the threshold from assistant to discoverer, not through reasoning but through pattern recognition at scales humans cannot achieve. Progress suggests we we’re entering an era where major scientific advances come from AI spotting signals in noise we didn’t know existed and transitioned scientific discovery from experimental to computational. The bottleneck isn’t the AI anymore; it’s having enough experts to test what the models discover and the courage to pursue counterintuitive findings.

Nvidia ships a beautiful disappointment

After months of delays, Nvidia’s DGX Spark has finally reached reviewers’ hands. The £3,999 compact desktop, built around the GB10 Grace Blackwell chip, promises one petaflop of AI performance in a Mac mini-sized box. UK retailer Scan suggests stock will arrive by late October, making this one of the first consumer-facing products combining Nvidia’s ARM CPU and Blackwell GPU architectures.

But sadly, the reviews paint a mixed picture. The hardware is stunning, but delivering 4.35 tokens per second on Llama 3.1 70B with quantisation is a disappointment. A Mac Studio with M3 Ultra manages 6.5 tokens per second on the same model, whilst a dual RTX 5090 setup reaches ~50 tokens per second. The DGX Spark’s 128GB unified memory offers flexibility for development work, yet its 273GB/s bandwidth becomes a major bottleneck at this price point. The Nvidia RTX Pro 6000 has by comparison 1,800GB/s (although is double the price for just the GPU). Memory bandwidth remains the most critical performance factor in the LLM age.

Meanwhile Apple announced the M5 chip this week, claiming over 4x the peak GPU compute performance of M4. The M5’s Neural Accelerator embedded in each GPU core targets exactly the workloads where the DGX Spark struggles. Where the DGX Spark shows promise is in Nvidia’s software ecosystem. The DGX OS, CUDA playbooks, and fine-tuning capabilities create a rich environment for AI research and model development. Clustering demonstrations with the extremely fast ConnectX-7 connectivity options both with other Sparks and even Mac Studios show interesting possibilities for distributed inference. But for standalone inference work, the value proposition weakens considerably.

The wider competitive landscape offers other alternatives. HP’s Z2 G1a mini workstation with an AMD AI Max+ 395 costs around £2,000 in the UK and delivers comparable performance. Even older discrete GPUs like the RTX 3090 remain compelling for many workloads. Looking ahead, Nvidia’s more powerful GB300 will represent the true “supercomputer on a desk” (with 20 FP4 PFLOPS) but expect very high pricing and limited availability.

Takeaways: The DGX Spark confirms that building competitive ARM-based AI hardware remains difficult outside Apple’s ecosystem. GPU availability is improving, but pricing reflects continued supply constraints. For most developers, a Mac with M-series silicon or a traditional x86 workstation with discrete Nvidia RTX cards offers much better value. The real question isn’t whether the DGX Spark is good enough, but whether local AI development needs specialised hardware at all when cloud GPU availability is probably the easier route to high performance compute. But the Spark is nonetheless a beautiful piece of hardware for those who can afford it, and will no doubt inspire new AI innovation with its focus on experimentation.

EXO

The ghost of AGI

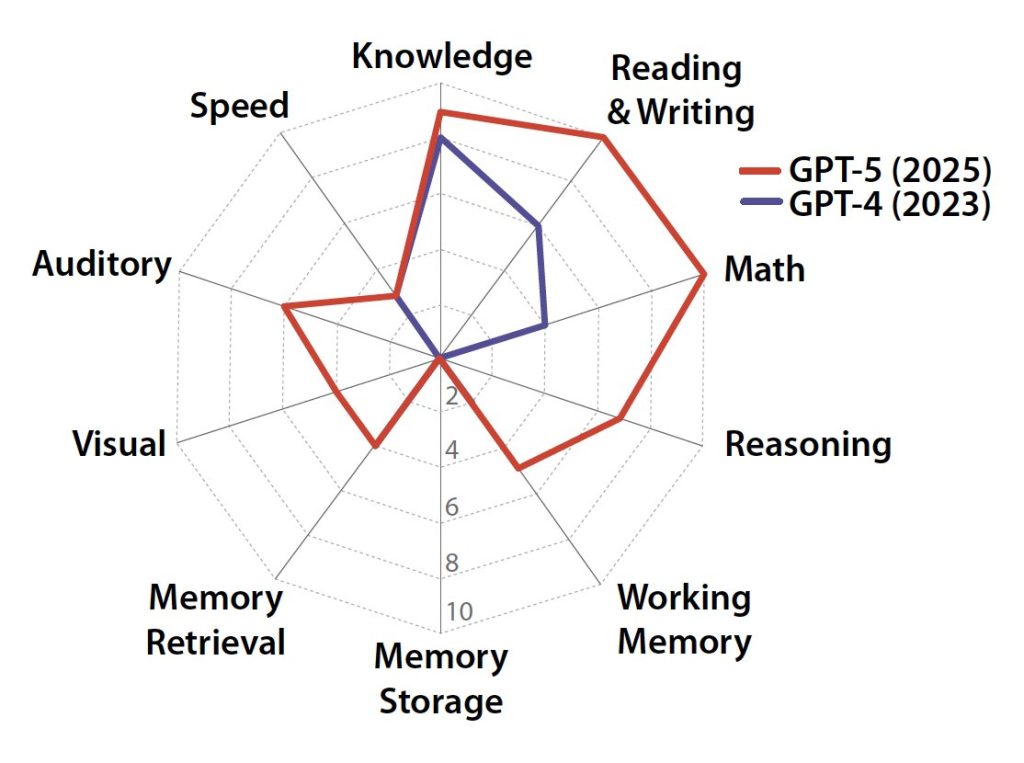

This image attempts to show our progress to AGI (artificial general intelligence). It comes from a new paper from the Centre for AI Safety defines AGI using human psychometric tests, revealing a “jagged” cognitive profile in current models. GPT-5 scores 58% against a well-educated adult baseline, up from GPT-4’s 27%. But notice the zeros, both models completely fail at long-term memory storage despite excelling at maths and knowledge.

This framework gives us fixed goalposts rather than constantly moving targets. Progress is real but uneven. The next breakthroughs won’t come from scaling alone, they’ll require solving memory, speed, and sensory processing. Current memory solutions are hacks, injecting fragments into conversations rather than true dynamic learning. As OpenAI co-founder and visionary Andrej Karpathy notes in his latest podcast interview, we’re building “ghosts” that mimic text rather than embodied intelligences. These models lack a “cognitive core”, they can’t consolidate experiences like humans do during sleep. The path forward may demand architectural reimagining, not just bigger models.

Weekly news roundup

This week’s AI landscape shows major players accelerating their development timelines whilst grappling with the societal impacts of automation, particularly in employment and resource consumption.

AI business news

- Sundar Pichai: “Gemini 3.0 will release this year” (Google’s aggressive timeline signals intensifying competition in the foundation model race.)

- Microsoft wants you to talk to Windows 11 PCs again — Copilot gets ‘conversational’ input to complement your mouse and keyboard (Shows how AI is fundamentally changing human-computer interaction paradigms.)

- Anthropic brings mad skills to Claude (Demonstrates the shift towards more capable AI agents that can execute complex tasks autonomously.)

- Dreamforce 2025: Benioff vaunts Slack as interface to agentic enterprise (Highlights how enterprise software platforms are becoming the primary interface for AI agent deployment.)

- Anthropic aims to nearly triple annualised revenue in 2026, sources say (Indicates the explosive growth potential and market demand for advanced AI capabilities.)

AI governance news

- Tech industry grad hiring crashes 46% as bots do junior work (Critical evidence of AI’s immediate impact on knowledge work employment patterns.)

- Salesforce offers its services to boost Trump’s immigration force (Raises important questions about AI deployment in controversial government applications.)

- OpenAI pauses Sora video generations of Martin Luther King Jr. (Illustrates ongoing challenges with content moderation and ethical boundaries in generative AI.)

- Anthropic’s AI principles make it a White House target (Shows tensions between AI safety approaches and political pressures.)

- UK spy chief warns of AI danger, though not disaster-movie doom (Provides balanced perspective on AI risks from national security establishment.)

AI research news

- The art of scaling reinforcement learning compute for LLMs (Essential insights into how to effectively scale RL training for more capable language models.)

- Verbalised sampling: How to mitigate mode collapse and unlock LLM diversity (Addresses crucial challenges in maintaining output diversity as models scale.)

- Recursive language models (Explores novel architectural approaches that could improve reasoning capabilities.)

- SimulatorArena: Are user simulators reliable proxies for multi-turn evaluation of AI assistants? (Questions fundamental assumptions about how we evaluate conversational AI systems.)

- Training-free group relative policy optimisation (Offers potential efficiency improvements for deploying AI systems without expensive retraining.)

AI hardware news

- Exclusive: Nvidia and TSMC unveil first Blackwell chip wafer made in US (Marks significant milestone in onshoring AI chip production for strategic autonomy.)

- OpenAI and Broadcom forge alliance to design custom AI chips, reshaping the future of AI infrastructure (Shows major AI companies pursuing custom silicon to optimise performance and reduce costs.)

- TSMC raises revenue forecast on bullish outlook for AI megatrend (Indicates sustained massive investment in AI infrastructure continues unabated.)

- Water: AI mega projects raise alarm in some of Europe’s driest regions (Highlights critical environmental sustainability challenges of scaling AI infrastructure.)

- ASML reports flat sales again, forecasts growth for 2026 despite collapse of Chinese business (Shows geopolitical impacts on the semiconductor supply chain crucial for AI development.)