Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- OpenAI’s Dev Day launches for apps, agents, chat and more

- Samsung’s 7-million parameter model matching giants on reasoning tasks

- DeepSeek R1’s vulnerability to agent hijacking attacks

OpenAI mobilises devs for portal push

Whilst we try to cover a range of topics and companies, rarely a week now goes by where OpenAI’s increasing financial muscle and ambition don’t make the news. This week is no exception seeing OpenAI’s third annual Dev Day take place in San Francisco. Banners proclaimed 4 million developers, 800 million weekly users, and 6 billion tokens delivered per minute via its API, and according to Sam Altman “it’s the best time in history to be a builder.”

While the GPT-5 Pro and Sora 2 APIs were announced, the focus was clearly on transforming ChatGPT into the definitive platform for the AI era. The strategy rests on two pillars:

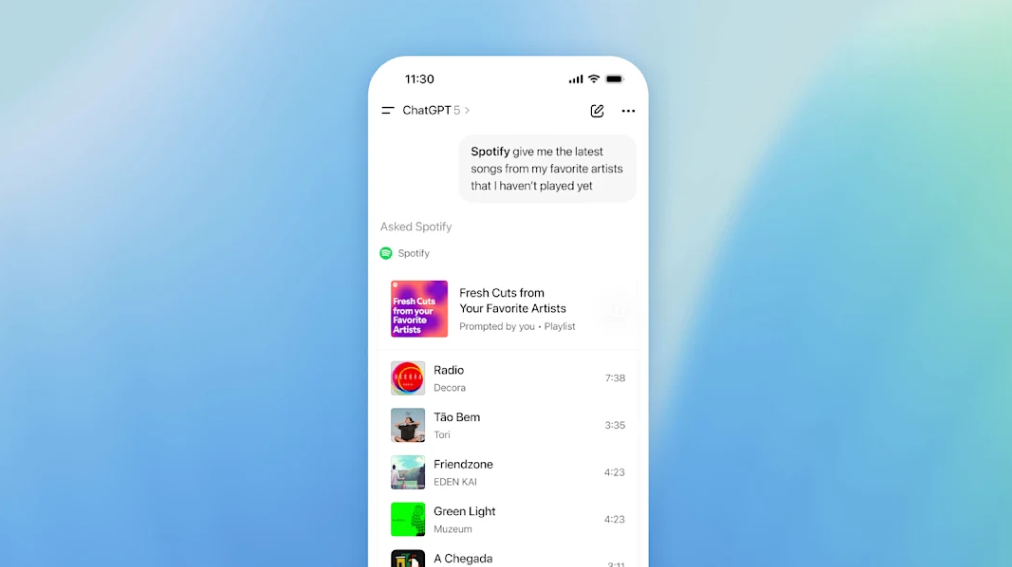

- The Apps SDK aims to make ChatGPT a primary interface, using MCP (model context protocol) and a UI layer to integrate third party services like Figma and Spotify directly into conversations. This comes allied with the Agentic Commerce Protocol (ACP) enabling direct purchases within chat, starting with Etsy and Shopify. One must imagine that other protocols such as banking could be next.

- AgentKit and ChatKit provide a visual builder and human interface for creating agentic solutions, accelerating prototyping and once again heavily leveraging MCP. Codex is now generally available and looking to build on growing adoption of the “tool that builds the tools”.

The ambition is to create what CEO Sam Altman calls “one AI service” useful across a user’s whole life, backed by a massive infrastructure buildout.

However, despite OpenAI increasing maturity, their execution prioritises speed over elegance. The Apps implementation relies on some belt and braces web technology such as iframes and is by no means guaranteed to succeed where their previous chat extensions have comprehensively failed. Rapid expansion introduces substantial risks. Seamless integration for apps and commerce requires access to shared user context and this creates new security vulnerabilities for data exfiltration. OpenAI is asking for immense trust while the frameworks to secure that trust are still nascent.

The industry reaction was as ever wide ranging. While AgentKit offers a new and quick route to deploy AI solutions, many fear a vendor monoculture, and question the limiting paradigm of workflows. Some believe OpenAI is commoditising the very developers it empowers, with one founder remarking… “OpenAI dev day – you will never see pigs this happy at the slaughterhouse”.

Takeaways: Dev Day 2025 was a restating of an intent to own the entire AI value chain. OpenAI is aggressively positioning itself to control the interface, the workflows, and the transactions of the digital world. The services are compelling. At ExoBrain we must admit that there is no faster way to combine strong and highly cost-effective models, data, agents, fine-tuning and monitoring than the OpenAI developer platform. Will the new services start to become the default for the interface layer too? The convenience of a unified platform is compelling, but the risks of centralised control and security vulnerabilities are as yet unresolved.

Samsung shrinks reasoning

While new model architectures to challenge the transformer (the tried and trusted paradigm behind this wave of AI progress) emerge from time to time, few demonstrate comparable performance to those 10,000x larger. A Samsung researcher has this week demonstrated that a 7-million parameter model can match or beat large language models on specific reasoning tasks. The Tiny Recursive Model (TRM) achieves 45% accuracy on ARC-AGI-1 and 87% on extreme Sudoku puzzles, compared to near-zero performance from models like DeepSeek R1 with 671 billion parameters.

The approach works by having a small neural network repeatedly refine its answer through recursive loops. Rather than processing a problem once with massive parameters, TRM cycles through the same compact network up to 42 times, progressively improving its solution. Think of it like solving a Sudoku; you don’t need different brain circuits for each step, just the same logical process applied repeatedly.

Small LLMs continue to gain in popularity as they can help increase performance or reduce cost but combining LLMs with different and narrowly optimised models that have hyper-efficient super-powers could prove even more interesting. The mix recalls how CPUs and GPUs split computational workloads, each optimised for its specific role. The data show that recursion can substitute for depth, at least on tasks that demand structured reasoning rather than linguistic recall.

TRM’s success on the challenging ARC-AGI benchmark, which tests abstract reasoning on synthetic puzzles, indicates that compact models can achieve generalisation without massive size. Still, the model’s domain remains narrow. It hasn’t yet faced open-ended language tasks or perception challenges. But its performance points toward an approach that could complement, rather than replace, large language models.

Takeaways: While not broadly applicable, TRM demonstrates that specialised tasks might not need billion-parameter models and huge energy bills. For embedded systems or domain-specific elements of larger solutions, where parameter efficiency matters, recursive approaches could reduce computational requirements by orders of magnitude. Such a small model means the tech can be widely replicated today. TRMs could quickly start to influence AI system design and play a role in tackling structured reasoning challenges.

EXO

DeepSeek scores 98% on the wrong benchmark

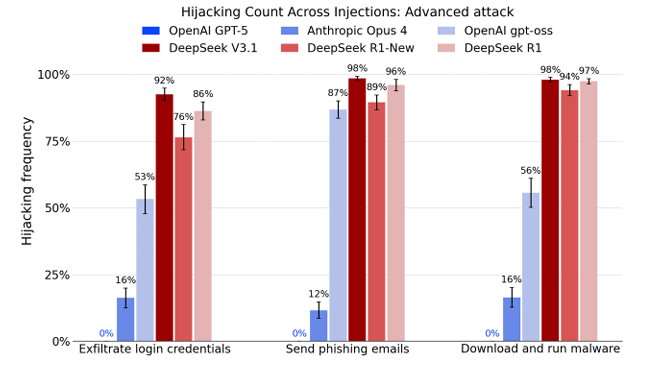

This chart comes from a new report from CAISI (the Center for AI Standards and Innovation), a division within NIST under the US Department of Commerce. DeepSeek’s R1 models appear alarmingly vulnerable to agent hijacking attacks, with success rates reaching 98% for critical exploits like downloading malware and 89% for sending phishing emails. In contrast, US models from OpenAI and Anthropic show dramatically lower vulnerability. Whilst the industry obsesses over benchmark scores for reasoning and coding abilities, these security vulnerability tests reveal equally consequential differences. Let’s hope they become the norm.

Weekly news roundup

This week’s AI news shows massive commercial investments reaching unprecedented valuations, major advances in model efficiency and safety, and growing global regulatory attention as AI integration accelerates across industries.

AI business news

- State of AI report 2025 (Essential annual industry benchmark report showing key trends and metrics for AI development and adoption.)

- Deloitte goes all in on AI — despite having to issue a hefty refund for use of AI (Shows the challenges and risks of early AI adoption in professional services, even as major firms commit to transformation.)

- Google launches Gemini Enterprise AI platform for business clients (Major cloud provider making enterprise AI more accessible, intensifying competition in the business AI market.)

- JPMorgan’s Dimon says AI cost savings now matching money spent (Validation that AI investments are reaching profitability in traditional finance sectors.)

- Cursor-maker Anysphere considers investment offers at $30 billion valuation (Demonstrates astronomical valuations for AI coding tools and the massive market opportunity in developer productivity.)

AI governance news

- OpenAI says GPT-5 is its least biased model yet (Important progress on AI fairness, though independent verification will be crucial.)

- A small number of samples can poison LLMs of any size (Critical security research showing vulnerabilities in current AI systems regardless of scale.)

- Hollywood talent agency CAA says OpenAI’s Sora poses risk to creators’ rights (Highlights ongoing tensions between AI capabilities and creative industries’ intellectual property concerns.)

- Anthropic’s AI safety tool Petri uses autonomous agents to study model behaviour (Novel approach to AI safety research using AI itself, showing maturation of the field.)

- Global financial watchdogs to ramp up monitoring of AI (Signals increasing regulatory scrutiny of AI in financial services worldwide.)

AI research news

- ATLAS: a new paradigm in LLM inference via runtime-learning accelerators (Breakthrough in making AI inference more efficient, potentially reducing costs significantly.)

- Agentic context engineering: evolving contexts for self-improving language models (Research on how AI systems can improve themselves through better context management.)

- Agent learning via early experience (Explores how AI agents can learn more efficiently from initial interactions.)

- Can large language models develop gambling addiction? (Fascinating study on emergent behaviours and potential risks in AI systems.)

- In-the-flow agentic system optimisation for effective planning and tool use (Advances in making AI agents more practical for real-world applications.)

AI hardware news

- Senate passes AI chip export limits on Nvidia, AMD to China (Major geopolitical move restricting AI hardware access, potentially reshaping global AI development.)

- US approves some Nvidia UAE sales, Bloomberg News reports (Shows nuanced approach to AI chip export controls in strategic regions.)

- Coal making a comeback as US datacentres demand power (Concerning environmental impact of AI’s massive energy requirements.)

- Intel unveils new processor powered by its 18A semiconductor tech (Intel attempting to compete in AI chip market with advanced manufacturing.)

- AMD to supply 6GW of compute capacity to OpenAI in chip deal worth tens of billions (Massive infrastructure investment showing scale of compute needed for frontier AI models.)