Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Whether China will leveraging solar dominance to power its open-source AI surge

- Replit’s Agent 3 coding autonomously for 200 minutes without supervision

- ChatGPT gaining write access to external systems through MCP

China takes the lead on open models

This week, Alibaba released Qwen3-Next, a model that achieves frontier performance whilst activating only a tiny fraction of its neural network. It’s the sort of technical achievement that would have dominated headlines a year ago but now feels almost routine from Chinese labs. For those returning from summer holidays with Dan Wang’s new book “Breakneck” fresh in mind (a portrait of China as an “engineering state” fearlessly building megaprojects whilst America remains a “lawyerly society” reflexively blocking progress) the AI landscape seems to validate his thesis. And yet the reality is always more complex.

Chinese labs are engineering open-weight models at extraordinary pace, with downloads overtaking the US and performance matching frontier capabilities. But critical hardware bottlenecks continue to threaten this momentum just as Beijing announces plans to “diffuse” AI into 90% of its economy by 2030. Can they do it? One thing that won’t harm is China’s overwhelming dominance in solar energy (the kind of massive industrial capability Wang chronicles) which might provide part of the route to the AI future.

Around twenty Chinese AI labs are now releasing competitive open models, from obvious leaders like DeepSeek and Qwen to emerging players like RedNote and Xiaomi. Qwen3-Next, likely a forerunner to Qwen 3.5 exemplifies technical sophistication. The model uses less than 10% of the training costs of comparable dense models and delivers 10x higher inference throughput at long contexts, the sort of efficiency gains that matter when scaling to population-wide deployment.

The momentum is undeniable. In the second half of 2025, China overtook the US in cumulative model downloads on Hugging Face, what researcher Nathan Lambert calls “the flip”. Martin Casado from a16z estimates that 80% of his portfolio companies are using Chinese open models. Silicon Valley startups are building on Qwen and DeepSeek because they work, they’re free, and they keep improving.

This proliferation extends beyond language models. Chinese labs are releasing competitive vision models, video generation, and multimodal systems. The typical Chinese lab operates with teams a fraction the size of Western counterparts (Qwen3 had 177 contributors versus Llama 3’s 500+) yet achieves superior results. When DeepSeek R1 broke out, it triggered a wave of other labs pivoting to open releases, accelerating the entire ecosystem. Openness is proving effective at acceleration but it remains to be seen if this strategy continues.

Meanwhile the Chinese establishment is going all in. The State Council’s new AI+ plan sets concrete targets that far exceed the ambition of Western policymakers. Beijing wants AI applications deployed across 90% of key economic sectors within five years. But the plan doesn’t include detail on how AI is deployed on this scale. Chinese VC funding for AI startups overall has sunk to decade lows. Today, investors are spooked by regulatory uncertainty, youth unemployment, and the property sector collapse. And a key underlying bottleneck for China is advanced silicon, with production of high-bandwidth memory (HBM), not GPUs being a particular challenge. China stockpiled 13 million HBM stacks through Samsung in the one-month gap between US export control announcement and enforcement. That’s enough for 1.6 million Ascend 910C (China’s homegrown AI chip) packages, but they’ll run out by year-end. Reportedly China’s domestic producer CXMT won’t reach meaningful HBM production until 2026 at earliest. This explains why Beijing specifically requested HBM relaxation in recent trade talks, not more TSMC access or lithography tools. It’s also why DeepSeek’s next-generation model training on Huawei chips keeps getting delayed. Export controls are having an impact.

But whilst the US focuses on chip supremacy, China has been building energy infrastructure at unprecedented speed. China controls 95% of global solar wafer production and 80% of module manufacturing. China’s manufacturing scale creates a self-reinforcing cycle of deployment and cost reduction. At current rates solar is on a trajectory to surpass Nuclear next year and be the world’s primary source of energy by the 2040s. A 1 GW data centre needs 10,000 acres of panels, substantial but feasible and possibly the only solution to the inevitable AI energy crunch. More importantly, these facilities can operate completely off-grid, bypassing interconnection delays that plague Western data centre development. Power availability, not cost, is key. China’s ability to deploy solar at scale whilst the US focuses on gas or modular nuclear and waits years for grid connections and Europe struggles with land constraints, could offset their hardware limitations.

Takeaways: China’s AI trajectory defies simple narratives. Their open model strategy has succeeded in gaining global adoption and technical credibility. The 90% economic diffusion target is ambitious, but even achieving half would transform their economy. Hardware bottlenecks are real and binding in the near term, particularly HBM through 2026. But the energy dimension could change everything. If China leverages its solar manufacturing dominance to power whatever compute it can build or acquire, whilst the West remains gridlocked by bureaucratic constraints, the competitive dynamics will shift. The race for AI supremacy might ultimately be determined not by who can manufacture the most advanced chips, but by who can deploy the most power generation. On current trajectories, that’s China by a considerable margin. The next two years will reveal whether hardware constraints or energy abundance proves more decisive.

The next wave of autonomous agents

Replit announced Agent 3 on Wednesday, a new iteration of autonomous or “vibe” coding system that can run unsupervised for up to 200 minutes and test its own output using a browser. The release comes just months after a Replit system deleted a user’s entire codebase, raising questions about the timing.

The new agent introduces recursive automation capabilities, allowing it to create other agents and build automations for platforms including Slack, Notion, and Linear. Unlike previous versions, Agent 3 periodically tests applications it builds, clicking through interfaces to verify functionality and automatically fixing detected issues.

Replit claims the system is three times faster and ten times more cost-effective than Computer Use models. The company secured $250 million in funding this week, reaching a $3 billion valuation, as investor interest in code generation continues. Competitors Cursor and Cognition are valued at around $10 billion each.

Agent 3 arrives as part of a broader wave of autonomous AI systems entering the market. Box announced its AI Studio update this week, allowing enterprises to build agents that work with unstructured data across contracts, invoices, and research documents. The company is also launching Box Automate for workflow automation with drag-and-drop agent deployment. Meanwhile, Anthropic’s Claude can now create and edit Excel spreadsheets, PowerPoint presentations, and PDFs directly, using a private computer environment to write and execute code.

Takeaways: Replit is betting its reputation on autonomous agents despite recent challenges. Nothing stands still and agents continue to evolve. The recursive capability, where AI builds AI, marks new territory in mainstream automation. Meanwhile we see material iterations in the agents being made available in the business environment. In the coming months, combining capabilities such as a 200-minute runtime with document integration and creation will demonstrate the growing potential of agents and how they begin to reliably handle the complex, multi-step workflows that constitute most knowledge work.

EXO

MCP goes mainstream

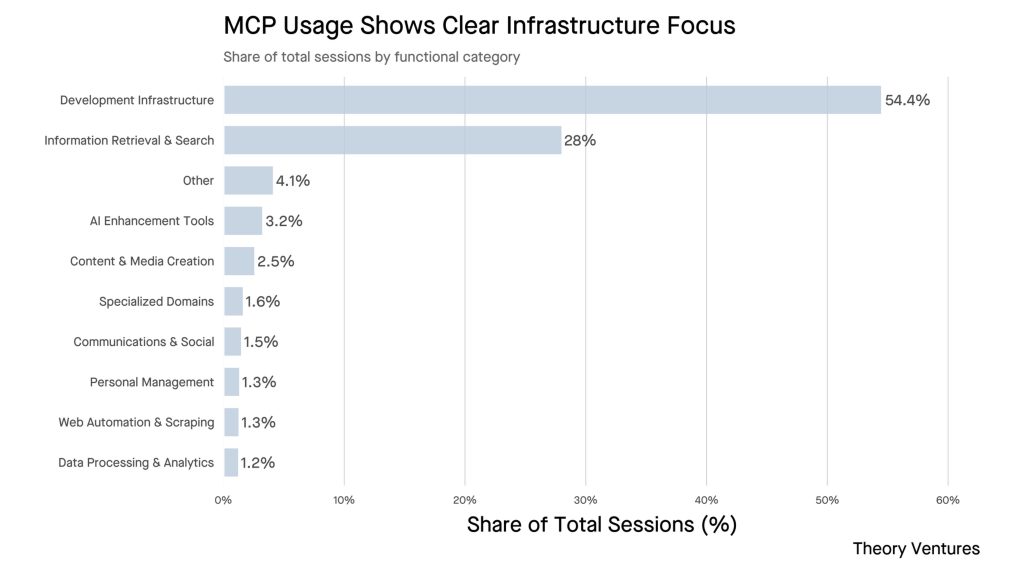

This chart and analysis reveals how Model Context Protocol (MCP) usage has concentrated in development infrastructure, with 54.4% of all sessions focused on technical tasks. MCP is an open standard that lets AI assistants connect to external tools, essentially allowing AI to read from and interact with other systems. Whilst Claude pioneered MCP support, adoption has remained largely technical, as this chart clearly shows with minimal usage in consumer categories like personal management.

ChatGPT’s latest (beta) update could change this trajectory. OpenAI just enabled full MCP write access for Plus and Pro users, meaning ChatGPT can now actively modify external systems, not just read from them. This transforms ChatGPT from a query interface into an active automation platform.

Now, with ChatGPT’s mainstream reach, MCP could expand beyond developer tools, but only if it learns the right lessons. As Vercel’s team discovered, early MCP tools failed because they mirrored traditional software integrations/APIs, forcing LLMs to orchestrate multiple steps. Much better results can be achieved with workflow tools that handle complete intentions in single operations. This shift is exactly what consumers need: instead of learning to chain commands (“create document, then share it, then notify team”), they can complete natural goals. When MCP tools match how people and their AIs think rather than how APIs work, the protocol will move on from being a technical curiosity to part of our everyday lives.

Weekly news roundup

This week’s news reveals accelerating enterprise AI adoption, growing regulatory scrutiny especially around child safety, significant advances in AI agent capabilities, and unprecedented infrastructure investments as the industry grapples with balancing innovation speed against environmental concerns.

AI business news

- Oracle takes a breather after AI-powered record run toward $1 trillion club (Shows the massive market value being created by AI infrastructure investments and cloud computing capabilities.)

- Stability AI’s enterprise audio model cuts production time from weeks to minutes (Demonstrates how generative AI is revolutionising creative workflows and production pipelines in media industries.)

- ASML invests $1.5B in French AI startup Mistral, forming European tech alliance (Highlights strategic European AI partnerships and the geopolitical dimension of AI development outside US-China dominance.)

- Microsoft reportedly plans to start using Anthropic models to power some of Office 365’s Copilot features (Shows the shift toward multi-model AI strategies in enterprise software rather than single-vendor dependence.)

- Microsoft folds Sales, Service, Finance Copilots into 365 (Indicates the consolidation of AI features into unified enterprise offerings for better integration and user experience.)

AI governance news

- Albania appoints AI bot as minister to tackle corruption (Pioneering use of AI in government functions, though raises important questions about accountability and democratic oversight.)

- A California bill that would regulate AI companion chatbots is close to becoming law (Shows growing regulatory attention to AI’s psychological impacts and the need for user protection frameworks.)

- Meta, OpenAI Face FTC Inquiry on Chatbot Impact on Kids (Highlights child safety concerns driving regulatory scrutiny and potential compliance requirements for AI developers.)

- US Senator Cruz proposes AI ‘sandbox’ to ease regulations on tech companies (Represents efforts to balance innovation with regulation through experimental frameworks for testing AI applications.)

- New RSL spec wants AI crawlers to show a license or pay (Addresses the critical issue of content licensing for AI training and potential new revenue models for content creators.)

AI research news

- AgentGym-RL: Training LLM Agents for Long-Horizon Decision Making through Multi-Turn Reinforcement Learning (Advances in training AI agents for complex, multi-step tasks relevant to autonomous systems and workflow automation.)

- LiveMCP-101: Stress Testing and Diagnosing MCP-enabled Agents on Challenging Queries (Important work on evaluating AI agent capabilities and identifying failure modes in real-world scenarios.)

- A Survey of Reinforcement Learning for Large Reasoning Models (Comprehensive overview of cutting-edge techniques for improving AI reasoning capabilities through reinforcement learning.)

- Reverse-Engineered Reasoning for Open-Ended Generation (Novel approaches to improving AI generation quality by understanding and replicating reasoning processes.)

- Sharing is Caring: Efficient LM Post-Training with Collective RL Experience Sharing (Efficiency improvements in model training that could reduce costs and accelerate AI development cycles.)

AI hardware news

- SK Hynix Shares Climb to Record After AI Memory Milestone (Shows the boom in AI memory chip demand and the critical role of specialised hardware in AI advancement.)

- US official: Winning AI more important than saving climate (Reveals the tension between AI compute needs and environmental goals, highlighting policy trade-offs.)

- Nvidia’s context-optimized Rubin CPX GPUs were inevitable (Demonstrates the evolution of specialised AI hardware optimised for specific computational patterns.)

- OpenAI, Nvidia set to announce UK data center investments, Bloomberg News reports (Indicates global expansion of AI infrastructure and the UK’s strategic positioning in the AI ecosystem.)

- US data center build hits record as AI demand surges, Bank of America Institute says (Shows the massive infrastructure investments driven by AI compute requirements and their economic implications.)