Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- AI models achieving golds at the maths Olympics whilst knowing their own limits

- New research that reveals which jobs AI impacts most

- How AI infrastructure spending is now contributing more to US GDP than consumers

Self-aware AI climbs down from Mount Stupid

As we reported last week, both Google DeepMind and OpenAI achieved gold medal performances at the 2025 International Mathematical Olympiad (IMO), scoring 35/42 points. This week, Google released a de-tuned (with reduced thinking time) version of the winning system in the form of Gemini 2.5 Deep Think, which could usher in the next phase in the development of the reasoning model. As details of the IMO record-breaking attempts emerge, it appears the most striking aspect of these results isn’t the elite problem-solving. OpenAI’s model looked at the hardest problem in the competition, one designed to separate the very best human mathematicians and refused to attempt it. For anyone working with language models, this refusal is revolutionary. Previous generations of LLMs would have confidently produced a plausible-sounding but entirely incorrect proof. This new model knew that it didn’t know.

This represents what you might define as AI’s progression along the Dunning-Kruger curve. Early LLMs were perched atop “Peak Mount Stupid,” confidently hallucinating answers to questions far beyond their capability. They were the quintessential overconfident amateurs who didn’t know what they didn’t know. The 2025 IMO models, however, have developed epistemic awareness; the ability to assess their own limitations and the confidence of their own reasoning.

Noam Brown from OpenAI, a key contributor to the development of the o-series reasoning models, specifically highlighted this as a breakthrough, addressing a major complaint from experts that previous models would “output a very convincing but wrong answer” when stumped. The experimental OpenAI model expressed uncertainty naturally, using phrases like “good!” when confident and question marks or “seems hard” when unsure.

The technical implementations for achieving this “AI self-awareness” vary, offering insights into the future of reliable AI architectures. It is clear that progress is no longer solely about the raw capability of base models, but about the sophisticated systems engineered to manage the reasoning process.

OpenAI’s approach emphasises internal calibration. They have incorporated confidence scoring for each reasoning step, creating an internal “reinforcement learning signal” that guides the model’s exploration. If the confidence score drops below a certain threshold during the reasoning process, the model can halt, re-evaluate, or, as seen in the IMO, simply refuse the task. Google DeepMind’s Deep Think, by contrast, achieves reliability through a structured, specialist architecture focused on sophisticated search strategies.

Traditional reasoning models rely on a linear chain-of-thought, essentially a depth-first search where one flawed step early on can corrupt the entire outcome, leading to hallucinations. Deep Think utilises a “parallel thinking” methodology. Internally, this functions like an advanced, tree-search algorithm. When faced with a complex problem, Deep Think doesn’t just pursue one solution; it spawns multiple concurrent hypotheses and explores them simultaneously. This breadth-first exploration dramatically increases the robustness of the reasoning process.

Crucially, managing this parallelism efficiently requires more than just raw compute. Deep Think leverages novel reinforcement learning techniques trained on a “curated corpus of high-quality solutions.” This specialised training allows Deep Think to develop an internal intuition for recognising whether a specific reasoning path is likely to succeed. This enables the system to efficiently prioritise promising paths and, equally importantly, discard dead ends early, optimising its computational budget.

This combination of parallel architecture and learned intuition allows for rapid convergence on solutions; during our testing, Deep Think proved more capable on several complex tasks than Grok 4 Heavy. Furthermore, Deep Think maintains strict operational boundaries. The model card reveals it will sometimes “over-refuse” queries to ensure safety compliance, a policy-driven form of caution.

Ultimately these varying approaches are converging on the same outcome: systems that know when they are wrong. This is a critical necessity for future business value. This new level of reliability isn’t achieved by eliminating hallucination through perfect training data or larger parameter counts. It is achieved by building systems, whether based on internal calibration, parallelised search, or external verification, that can verify their progress, on hitherto hard to verify problems, as they think.

The implications extend far beyond mathematics competitions. In high-stakes fields like medical diagnosis, complex engineering, legal analysis, or financial modelling, the difference between a system that hallucinates confidently and one that appropriately expresses uncertainty could in the future be measured in lives or billions of pounds. An AI that can accurately assess the validity of its own reasoning process is the prerequisite for true autonomous agency in the enterprise. A model that can say “I don’t know” is paradoxically far more useful than one that always tries to be helpful.

The leap from models that could barely solve primary school maths in 2023 to achieving IMO gold with a few hours of reasoning represents just the opening chapter. We’re entering an era where AI reasoning time will stretch from minutes to days, potentially weeks, as these systems tackle increasingly complex challenges. The next frontier isn’t merely about solving competition problems faster, but about sustained reasoning that mirrors how humans actually work, iterating over ideas for months, pursuing dead ends, backtracking, and eventually arriving at genuine breakthroughs.

Takeaways: The 2025 IMO results suggest a promising transition from confident hallucination to appropriate uncertainty (epistemic awareness). Within the next few years, we’ll likely see models tackling far harder research problems, maintaining context over weeks of exploration, and perhaps even making inroads into problems that have stumped humanity’s brightest minds. The intelligence revolution won’t just be about speed; it will be about self-awareness, persistence, and the kind of prolonged contemplation that transforms fields of knowledge entirely.

JOOST

Visible and invisible AI workforce change

Two fresh sources published this week offer the clearest picture yet of how generative AI is reshaping work. A Microsoft-backed study of 200,000 Copilot conversations maps exactly which tasks people delegate to AI. The researchers convert these patterns into an “AI applicability score” for every US occupation. Information gathering and writing top the list. Sales, admin and programming roles show the highest overlap with what AI can do.

Meanwhile, a Gizmodo investigation exposes the hidden workforce that keeps these models running. The piece documents the annotation, logistics and moderation work performed by low-paid contractors. One interviewee calls the sector “a new era in forced labour”.

The academic study emphasises augmentation over replacement. Most user requests still treat Copilot as an assistant, not a substitute. But the Gizmodo report quotes executives planning cuts of up to 40 percent. Their reasoning? “AI doesn’t go on strike.” This reveals a disconnect between research caution and boardroom plans.

One finding from the Copilot data challenges conventional wisdom. Wages and education levels show weak correlation with AI exposure. High earners can’t buy protection through credentials. This suggests we need universal retraining budgets, not narrow coding bootcamps.

Labour advocates are pushing for recognition of data annotation as formal employment. They want minimum standards and proper contracts. This would close the loophole that keeps AI’s human infrastructure invisible on corporate reports.

But hard questions remain. Will investors stomach slower rollouts for better labour protections? Can regulators even find, let alone monitor, the sprawling data-labelling networks spanning continents? US jobs data already shows accelerating cuts, with analysts drawing direct lines to AI deployment. Are we watching the first tremors before the earthquake?

Takeaways: The latest studies confirm that AI touches every occupation, with full automation clustering in information and communication roles. Corporate boards are converting early productivity gains into headcount cuts at breakneck speed, creating a widening gap between C-suite promises and workplace reality. Recognising annotation work as formal employment would drag AI’s hidden human infrastructure into the light, forcing companies to account for these workers on their books. The next few quarters will reveal whether the deployment rush can be slowed by any force – regulatory pressure, investor concerns, or worker organisation. The race between AI capability and labour adaptation has begun.

EXO

Data centre dollars prop up the US economy

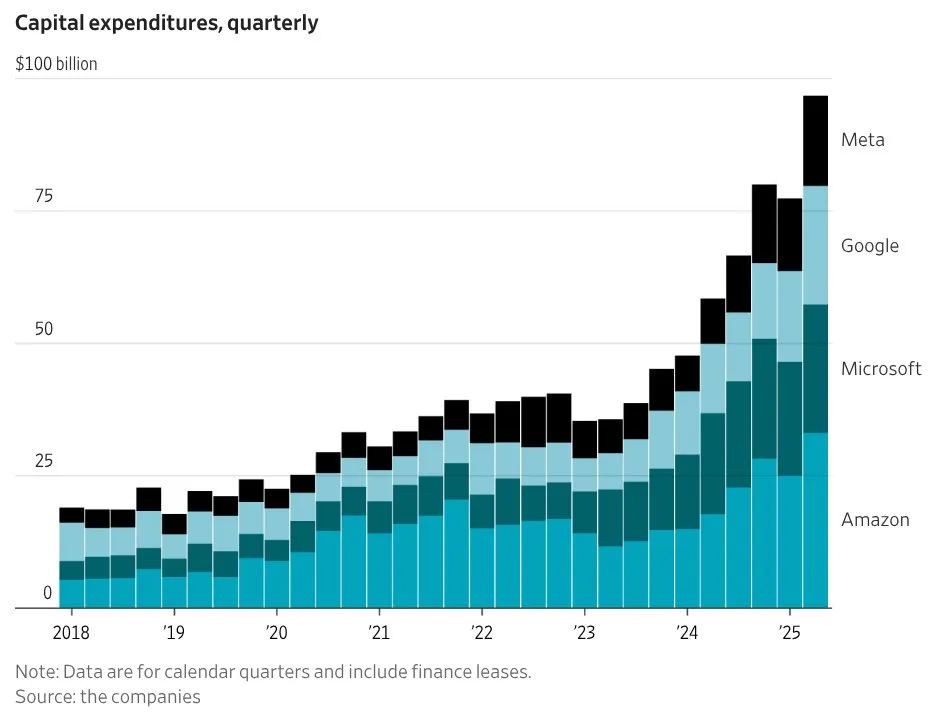

This chart provides a clear picture of the staggering scale of data centre AI infrastructure investment, that is now contributing more to US economic growth than consumer spending!

What started as quarterly burn of around $15 billion in 2018 has exploded to nearly $100 billion in 2025. Amazon leads with a $100 billion annual commitment, whilst Microsoft and Google each plan $75-80 billion. According to recent analysis, AI capital expenditure may already represent 2% of US GDP, potentially adding 0.7% to growth in 2025.

This isn’t just another tech bubble, it’s acting as a massive private sector stimulus programme. Without this infrastructure boom, the US might have faced a 2.1% GDP contraction in Q1. We’re witnessing spending on a scale that approaches railroad infrastructure investment in the 19th century as a percentage of GDP. But unlike railways that lasted a century, these datacentres house rapidly depreciating technology. GPUs become obsolete in years, not decades. Meanwhile, this capital reallocation is starving other sectors. Venture capitalists are funding almost exclusively AI projects. Traditional infrastructure projects are struggling for investment. Cloud companies are laying off staff whilst pouring billions into GPU clusters.

Takeaways: We’re living through a historic moment where private companies are essentially running an infrastructure programme that’s keeping the US economy afloat. The concentration of compute power in a handful of companies echoes the railroad monopolies of the Gilded Age. Whether this spending proves justified or becomes the most expensive bet in corporate history will define the next decade of technological and economic development.

Weekly news roundup

This week saw major funding rounds and infrastructure investments signal the AI industry’s continued expansion, whilst regulatory frameworks and security concerns highlight the growing need for responsible AI deployment across sectors.

AI business news

- OpenAI raises another funding deal, from Dragoneer, Blackstone and more (Shows continued investor confidence in leading AI companies and the massive capital requirements for developing frontier models.)

- Google rolls out Gemini Deep Think AI, a reasoning model that tests multiple ideas in parallel (Demonstrates the shift towards more sophisticated reasoning capabilities in AI models, crucial for complex problem-solving applications.)

- Tim Cook says Apple is ‘very open’ to AI acquisitions (Signals Apple’s strategy to accelerate AI development through acquisitions rather than solely internal R&D.)

- Amazon to pay New York Times at least $20 million a year in AI deal (Illustrates the emerging business model for media companies licensing content to AI developers.)

- ChatGPT’s study mode is here. It won’t fix education’s AI problems (Highlights the challenges of integrating AI tools into education whilst maintaining academic integrity.)

AI governance news

- Trump may not replace Biden-era AI rule (Suggests potential continuity in US AI policy regardless of political changes, important for long-term planning.)

- Meta faces Italian competition investigation over WhatsApp AI chatbot (Shows how European regulators are scrutinising AI integration in dominant platforms for antitrust concerns.)

- Voice actors push back as AI threatens dubbing industry (Illustrates the ongoing tension between AI capabilities and creative industry employment.)

- Enterprises neglect AI security – and attackers have noticed (Warns of the security vulnerabilities emerging as companies rush to deploy AI without proper safeguards.)

- EU law for GenAI comes into force this week (Marks a major milestone in AI regulation that will affect global AI development and deployment strategies.)

AI research news

- AlphaGo moment for model architecture discovery (Suggests breakthrough in automated AI architecture design, potentially accelerating model improvement.)

- Persona vectors: monitoring and controlling character traits in language models (Advances understanding of how to control AI behaviour, crucial for safe and reliable AI systems.)

- AlphaEarth Foundations helps map our planet in unprecedented detail (Demonstrates AI’s potential for environmental monitoring and climate change research.)

- Where to show demos in your prompt: a positional bias of in-context learning (Provides practical insights for optimising prompt engineering strategies.)

- A survey of self-evolving agents: on path to artificial super intelligence (Explores the frontier research area of AI systems that can improve themselves autonomously.)

AI hardware news

- OpenAI to launch AI data centre in Norway, its first in Europe (Shows geographic expansion of AI infrastructure and considerations for data sovereignty.)

- Nvidia says its chips have no ‘backdoors’ after China flags H20 security concerns (Highlights geopolitical tensions affecting the AI hardware supply chain.)

- SK Hynix surpasses Samsung as top memory maker for first time (Reflects the impact of AI demand on memory chip markets and supply chain dynamics.)

- Tesla signs $16.5B chip manufacturing contract with Samsung (Shows the massive scale of custom chip investments for AI and autonomous vehicles.)

- Meta to spend up to $72B on AI infrastructure in 2025 as compute arms race escalates (Demonstrates the unprecedented capital requirements for competing in frontier AI development.)