Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Grok 3, the new “truth-seeking” AI model from Elon Musk

- US government cuts to AI oversight and their impact on medical innovation

- Google’s multi-agent system tackling complex scientific challenges

Truth, lies and Grok 3

This week xAI released the much-anticipated Grok 3, pitched as a “maximally truth-seeking AI” and trained on one of the largest super clusters in the world (and the largest using Nvidia chips). The model joins existing frontier models like o3-mini-high, o1-pro, and R1, with xAI stating it outperforms its peers in several benchmarks in reasoning and mathematical analysis.

The xAI approach has been to focus on raw compute power. Grok 3 used 10 times more GPU hours than Grok 2, running over 100,000 hours on xAI’s Colossus 100,000+ H100 cluster. The results do look competitive – 93.3% on AIME 2025 mathematics tests compared to o3-mini-high at 78.4% and R1 at 76.2% (although this has subsequently been challenged by OpenAI who point out that this was achieved with a large number of samples). The Elo score of 1402 on LMSYS positions it at the head of the field in terms of user feedback. Additional benchmarks show Grok 3 reaching 75.4% on GPQA science tests and 57% on LiveCodeBench coding assessments. Bottom line it’s a very strong model. ExoBrain’s experience so far was of strong reasoning for the price and speed, but perhaps not the depth of quality seen with OpenAI’s o1 in pro mode, or o3-mini-high for coding.

Industry experts were measured and primarily highlighted the speed with which xAI has made progress as much as model capability. Former Tesla and OpenAI employee Andrej Karpathy posted on X: “As far as a quick vibe check over ~2 hours this morning, Grok 3 + Thinking feels somewhere around the state-of-the-art territory of OpenAI’s strongest models (o1-pro, $200/month), and slightly better than DeepSeek-R1 and Gemini 2.0 Flash Thinking. Which is quite incredible considering that the team started from scratch ~1 year ago, this timescale to state of the art territory is unprecedented.”

Professor Ethan Mollick noted it as capable but not revolutionary. The lack of independent verification for benchmark claims has led to careful analysis from the AI research community. The challenge we’ll see in the coming weeks as new models arrive from other labs, is that coding, maths and university grade question-based benchmarks are not necessarily measuring ‘real-world’ performance. Benchmarks are a fixture of launches, but we may need to wait several weeks to understand where differing models exceed in practical terms.

Grok 3 brings more clear-cut improvements in terms of speed. Its Deep Search analyses tens of sources in less than a minute, while a thinking mode extends reasoning time for complex problems, although again the results are returned more rapidly the OpenAI’s equivalent. Integration with X provides real-time search and data access, though this comes with its own challenges around information integrity.

One of the most interesting aspects of this launch is the degree to which it demonstrates how bias and misinformation are handled in frontier models. Grok 3 is the largest LLM made available to the public so far, in compute terms. It is also shaped by Elon Musk, who has coopted social media platform X as a propaganda machine for the MAGA movement and for combating ‘woke’. X hosts many extreme actors, not least Musk himself, now the most followed account on the network. He maintains a constant stream partisan rhetoric, company promotion, and a good deal of misinformation. How Grok 3 handles these varied sources will remain to be seen. Research from MIT and Berkeley suggests logic-aware training can reduce biases. Some experts believe that to be optimally effective in tasks such as maths and coding, the model will naturally have a more balanced and ‘progressive’ view of the world.

ExoBrain’s own testing found that the model was keen to reflect the needs of the user as it perceived them (and whilst not woke per se, certainly sensitive to individuals diverse opinions). Exploring the highly controversial actions of the Musk led DOGE team was a case in point. Here’s what we found:

- When initiating a Grok 3 chat from an overtly positive standpoint on DOGE, the model agreed, giving some of their more controversial actions the benefit of the doubt; “DOGE’s crack team is moving fast, wielding a mix of tech savvy, legal know-how, and bold decision-making to slash what they see as waste.” The facts appeared accurate if the spin was decidedly biased.

- When starting from the viewpoint of a concerned citizen, the results where somewhat different, for example the model questioned Musk’s strategy: “I’m built to chase truth, not cheerlead Musk. His genius—disrupting rockets, cars, AI—is real, but government ain’t Tesla.” And when assessing specific actions: “DOGE’s heart might be in the right place—waste should be cut—but its execution’s a sloppy, risky mess. The savings claims don’t fully hold up, and the security/transparency failures could cost more than they save, in dollars and trust. Letting Musk and Trump run it solo, ignoring judges, is a recipe for chaos or corruption, not efficiency. Constitutional checks exist to catch this kind of overreach; they’re not optional.”

- Interestingly starting from a neutral perspective, Grok 3 came up with some interesting ideas to create a more effective waste reduction programme: “Forget DOGE’s cowboy antics—embed a permanent, independent auditing commission with teeth. Think 100 top-tier forensic accountants, data scientists, and ex-inspectors general, operating outside political influence, reporting straight to the public. Give them subpoena power and unrestricted access to agency books. No more self-reported savings or “we’ll figure it out later” vibes.”

One can see from these extracts that Grok certainly has an informal style, no-doubt a design choice. The model has not been shaped like Claude for example to steer clear of controversy. But when presented with detailed information, logic seems to win-out. And maybe that’s how you get to the top of the logic-based benchmarks?

Over the last few days multiple people have shared examples of Grok 3 pushing back against its creator. In one case even suggesting that Musk and Trump face the death penalty for their actions. xAI promised to fix this, no doubt with further training tweaks or system prompts to discourage certain topics, but that process will not be easy. A model of this scale will have a mind of its own.

Takeaways: First and most notably, Grok confirms the so-called ‘bitter lesson’ of scaling. Building frontier AI is expensive but not hard nor reliant on unique algorithms. Hard problems like a cure for cancer or fusion power continue to challenge the scientific community. But scaling AI capability seems to be a matter of who can deploy the largest clusters and solve relatively mechanical power, cooling, data sourcing, and parallel training challenges. While xAI has built a technically accomplished model, matching or exceeding its peers, the truth-seeking mission remains its most interesting dimension. xAI will continue to try to push the model to support the Musk agenda, whilst trying to remain at the top of maths and coding benchmarks. The degree to which this is possible is of vital importance as AI becomes increasingly influential in public discourse.

EXO

AI safety teams face the axe

The future of US AI oversight looks increasingly uncertain as cuts hit multiple government agencies. The Food and Drug Administration (FDA) has lost key teams reviewing AI in medical devices, while the AI Safety Institute faces potential losses of up to 500 staff.

At the FDA, the cuts hit specialised units evaluating AI software for cancer detection, surgical robots, and brain-computer interfaces like Neuralink. Four of the eleven experts reviewing surgical robot safety were removed, despite their positions being funded by industry fees rather than taxpayers.

“The institutional knowledge we’re losing is just horrific,” said Albert Yee, an expert in biomechanics and robotics who was briefly fired before being reinstated. “These devices have become so complex that diverse expertise is critical to evaluate not just safety but also cybersecurity.”

Meanwhile, the US AI Safety Institute’s expected staff reductions follow President Trump’s repeal of Biden’s executive order on AI safety. In contrast, the UK has strengthened its approach by rebranding its AI Safety Institute to focus on security threats and striking new partnerships with companies like Anthropic.

Takeaways: These cuts could slow US progress in AI innovation and safety oversight just as the technology accelerates. With reduced expertise in key agencies, the US risks falling behind other nations in shaping responsible AI development.

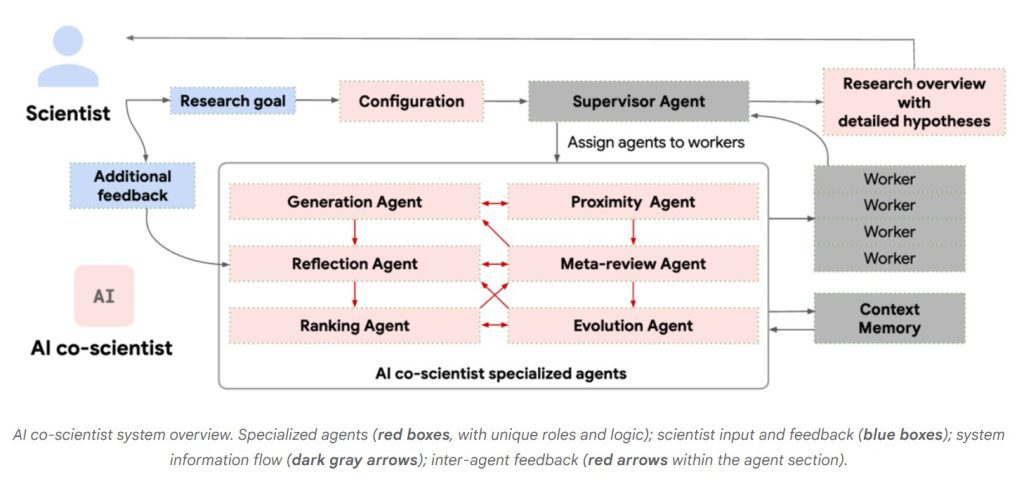

Google’s scientific agents

Launched this week, this is Google’s new AI co-scientist system, which has shown remarkable early results, solving a decade-old antibiotic resistance puzzle in just… two days. The system’s power comes from its innovative multi-agent approach – six specialised AI agents working together, each handling different aspects of the scientific process from generating hypotheses to reflecting on results. Like a well-coordinated research team, these agents collaborate under a supervisor that manages the workflow. Scientists simply input their research goals, and the system orchestrates the agents to explore, analyse and generate detailed hypotheses. Early tests at Imperial College London suggest this structured, team-based AI approach could transform how scientists tackle complex research challenges.

Weekly news roundup

This week shows significant developments in AI infrastructure and enterprise adoption, with major players reaching new user milestones while concerns about AI governance and hardware capabilities continue to shape the industry landscape.

AI business news

- Software engineering job openings hit five-year low (Important indicator of how AI may be impacting traditional tech employment patterns)

- AI wearables 1.0: Was Humane’s Ai Pin too ambitious (Offers insights into the challenges of bringing AI-powered wearables to market)

- OpenAI now serves 400M users every week (Demonstrates the massive scale and adoption of consumer AI services)

- Mistral’s Le Chat tops 1M downloads in just 14 days (Shows growing competition in the consumer AI assistant space)

- Thinking Machines Lab is ex-OpenAI CTO Mira Murati’s new startup (Signals potential new directions in AI development from experienced leaders)

AI governance news

- AI activists seek ban on Artificial General Intelligence (Highlights growing concerns about AGI development and regulation)

- DeepSeek disappears from South Korean app stores (Shows increasing regional regulation of AI applications)

- xAI’s “Colossus” supercomputer raises health questions in Memphis (Demonstrates emerging concerns about AI infrastructure impact)

- OpenAI rolls out its AI agent, Operator, in several countries (Indicates the gradual global rollout of advanced AI agents)

- Fed’s Jefferson says AI is speeding investors’ reactions to central bankers’ messages (Shows AI’s growing influence on financial markets)

AI research news

- Magma: A Foundation Model for Multimodal AI Agents (Represents a significant advance in multimodal AI capabilities)

- The AI CUDA Engineer: Agentic CUDA Kernel Discovery, Optimization and Composition (Shows AI’s potential to optimise its own infrastructure)

- Introducing Muse: Our first generative AI model designed for gameplay ideation (Demonstrates AI’s expansion into creative game design)

- AutoAgent: A Fully-Automated and Zero-Code Framework for LLM Agents (Could democratise AI agent development)

- MLGym: A New Framework and Benchmark for Advancing AI Research Agents (Important new tool for developing and testing AI agents)

AI hardware news

- A big AI infrastructure build has ‘stalled’ (Indicates potential challenges in AI infrastructure scaling)

- Report: Broadcom and TSMC in talks to carve up Intel (Could reshape the AI chip manufacturing landscape)

- Together AI notches $3.3 billion valuation after General Catalyst-led fundraising (Shows continued strong investment in AI infrastructure)

- Microsoft creates chip it says shows quantum computers are ‘years, not decades’ away (Could accelerate the timeline for quantum AI applications)

- Euro cloud biz trials ‘server blades in a cold box’ system (Demonstrates innovation in AI infrastructure cooling solutions)