Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Why France’s AI summit reveals deep divisions in global governance.

- How Rogo’s fine-tuned models are benefiting banking and investment management.

- Where AI adoption is highest according to Claude user data.

A country of geniuses in a data centre

The Sommet pour l’action sur l’IA played out in Paris this week as the latest in a series of international events that started with the Bletchley Park Safety Summit in 2023. While 60 nations signed the summit’s declaration on ethical AI development, the US and UK’s refusal to join highlighted the challenges ahead for international cooperation. The glitzy two-day event, co-chaired by French President Emmanuel Macron and Indian Prime Minister Narendra Modi, brought together over 100 nations and 1,000 delegates to address AI governance. US Vice President J.D. Vance argued that European regulatory efforts could slow AI innovation, while European leaders talked of balancing progress with ethical safeguards. The general assessment of the event, much like the UK’s gathering, was that global meetups are better than nothing, but the world is far from on-top of the challenges AI will bring.

France announced a multi-billion-Euro investment programme, including two new AI supercomputers and funding for 600 AI startups. President Macron framed this as a “wake-up call for European strategy,” acknowledging the region’s need to catch up with US and Chinese capabilities; European firms secured only 11% of global AI venture funding in 2024. The newly announced Current AI initiative, backed by €400 million in public-private funding, appears to be Europe’s bid to have it both ways – advancing AI development while maintaining its regulatory stance. But with US firms racing ahead with hundreds of billions of dollars of compute investments, Europe’s commitments look limited.

The US Vice President spoke to a packed hall, dismissing AI safety concerns as a smokescreen for regulation, arguing that America would remain the “gold standard” for AI. His message that the US “possesses all components across the AI stack”, and will “win by building” drew praise from bro-ligarchs and effective accelerationists on X. Of course, Vance omitted to mention the US’s total reliance on Taiwan for AI chips, and the recent presidential rhetoric regarding tariffs on these existentially vital imports. The speech painted regulation and social media curbs as an attack on free speech and innovation, repeating the tired trope that “massive incumbents” were weaponizing safety concerns to maintain market position. While Vance acknowledged AI’s impact on jobs, he rejected on grounds of faith the “replacement” narratives, instead framing AI as a productivity enhancer that would drive “pro-worker growth.” Much like the rest of the conference, ultimately the message was self-serving, and lacked any trace of the AI expertise or political subtlety needed to meaningfully contribute to the work ahead.

Perhaps the most compelling motif from the week came from Anthropic’s CEO Dario Amodei. Amodei characterised the summit as a “missed opportunity,” challenging the international community’s slow pace toward effective AI governance. He suggested that at some point in the next 2-years, somewhere on earth a data centre will house enough PhD-level brains that it will equate to an entire “country of geniuses”. To imagine that such an emerging population of minds needs only talk to remain ethical, faith to ensure they will be pro-worker, hope that they will not be misused, and free-speech absolutism to ensure that they are aligned, seems dangerously naive.

Takeaways: The summit’s most promising development was the emergence of some practical, technical governance through initiatives like ROOST (Robust Open Online Safety Tools). At ExoBrain, we believe that deeply embedding safety into web and AI infrastructure makes more sense than endless regulatory debates, especially as models, training techniques, and hardware become more powerful and proliferate uncontrollably. The ROOST approach introduces free, open-source tools for content moderation and safety checks that work at the protocol level. This technical foundation includes API-based moderation using large language models, cross-platform safety standards, and blockchain-based audit trails. France’s new state-backed Mistral-Next LLM shows how this might work, featuring built-in constitutional constraints. The Current AI initiative takes this further with its Public Interest AI Platform, implementing cryptographic access controls and real-time compliance checks. These engineering solutions could create useful standards for managed AI deployment, moving beyond policy discussions to practical safeguards that can keep pace with rapid technological change. But to be anything other than islands of calm, they need to be developed and embraced on a global scale. Sadly, the high-powered political delegates still fail to grasp this need for urgent technical collaboration. Let’s hope they do so before hundreds of billions of AI agents are deployed across countless independent systems, each operating under different rules and standards. The window for establishing unified technical controls is narrowing as AI development accelerates and those countries of geniuses hove into view. While summits and declarations have their place, the real work of “building” secure, standardised AI infrastructure needs to happen at the engineering level, and it needs to happen now.

EXO

AI fine-tunes financial services

A wave of AI tools is reshaping finance, starting with the tedious work that keeps junior bankers at their desks until midnight. Rogo, a new AI platform covered in an OpenAI case-study published this week, has automated $4.7 billion worth of analyst work since its 2024 launch, saving users 10+ hours a week.

By fine-tuning OpenAI’s latest models with financial data from S&P Global and Crunchbase and FactSet, Rogo has grown to serve over 5,000 bankers. The platform uses different AI models for different tasks, matching the right tool to each job. GPT-4o handles the analysis of earnings calls and market trends. The fast o1-mini model processes millions of documents to find relevant information quickly. For the really challenging work like valuations and deal structuring, they use the more powerful o1 model. Looking ahead, Rogo has hired Joseph Kim from Google’s Gemini team to work on predicting M&A success rates. Early tests on 10,000 past deals show 89% accuracy in forecasting regulatory issues two months before they’re announced.

Meanwhile, Microsoft have been identifying wealth management as a sector ripe for disruption. “Portfolio construction can be handled by conventional AI,” says Martin Moeller, Microsoft’s head of AI for financial services in EMEA. UBS appears to agree, CEO Sergio Ermotti recently highlighted AI’s potential to increase productivity and simplify jobs.

Behind the scenes, AI is handling ever more critical processes. Credit scoring processes are using AI-driven alternative credit assessments leverage non-traditional data points. Risk management systems inspect millions of transactions in real-time with AI, with one bank reporting they catch 92% of suspicious activities before any losses occur.

The next phase will be “agentic AI” and potentially systems that make independent investment decisions. For now, though, the focus remains on augmenting rather than replacing human judgment. As one private equity business noted, they’re closing deals 45% faster, but still need human expertise for the final decisions.

Takeaways: The financial sector’s embrace of AI extends far beyond chatbots and robo-advisors. Its real impact lies in areas customers rarely see – from using alternative data for credit scoring to automating complex regulatory compliance. While challenges around regulation and cybersecurity persist, banks can’t afford to wait. The most telling sign comes from major institutions building custom AI workflows and starting to adopt in-house designed agents rather than just buying off-the-shelf solutions. This suggests they see AI not just as a cost-saving tool, but as something that could expand their workforce and redraw competitive lines. In an industry where information advantages typically last minutes or seconds, the ability to analyse and act on data faster than rivals could prove more valuable than traditional advantages like size or brand.

Anthropic’s new economic index

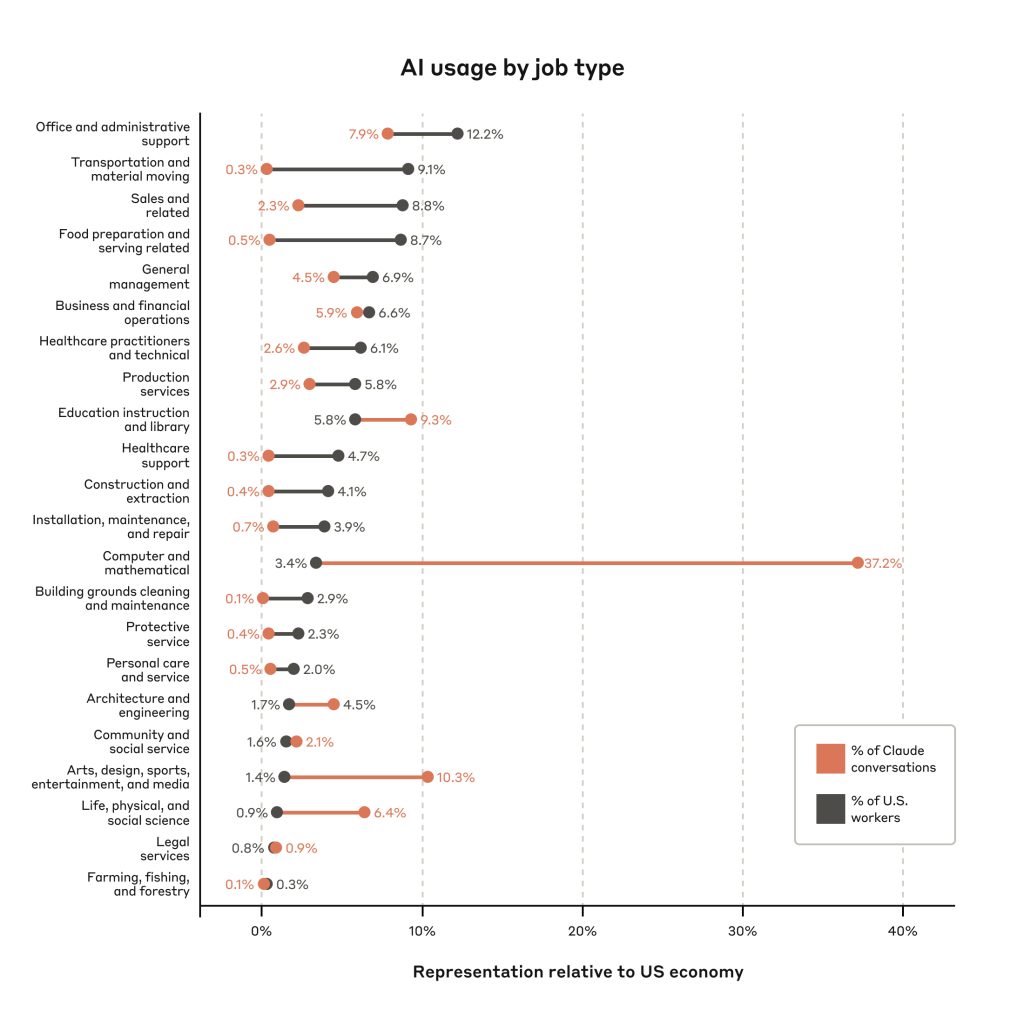

This week Anthropic launched their Economic Index, looking at how people are using Claude. This chart shows how AI adoption varies significantly across different job sectors compared to their representation in the US workforce. Computer and mathematical roles lead AI usage by a wide margin, accounting for 37.2% of Claude conversations despite representing only 3.4% of US workers. Interestingly arts and media roles show the second-highest AI adoption at 10.3% of conversations versus 1.4% of the workforce, while physical jobs like transportation (0.3% vs 9.1%) and farming (0.1% vs 0.3%) show minimal AI usage. Office and administrative support represents the largest share of US workers at 12.2% but accounts for only 7.9% of AI interactions, suggesting potential room for growth in AI adoption in this sector.

Weekly news roundup

This week’s developments show a significant shift towards in-house AI chip development by major players, increased focus on AI governance and security, and breakthroughs in efficient AI model scaling and deployment.

AI business news

- UK to explore use of Anthropic’s AI chatbot Claude for public services (Signals growing acceptance of AI assistants in government operations and public sector digital transformation.)

- OpenAI postpones its o3 AI model in favor of a ‘unified’ next-gen release (Important strategic shift that could affect the entire AI development landscape.)

- Adobe’s Sora-rivaling AI video generator is now available for everyone (Democratises access to advanced AI video generation tools for creators and businesses.)

- Glean Technologies jumps into no-code agentic AI development with Glean Agents (Makes AI agent development accessible to non-technical users.)

- OpenAI board rejects Musk’s $97.4 billion offer (Major development in the ongoing power struggle for control of leading AI companies.)

AI governance news

- UK drops ‘safety’ from its AI body, now called AI Security Institute, inks MOU with Anthropic (Reflects shifting priorities in national AI governance approaches.)

- AI risks could spark an ‘Osama bin Laden scenario,’ former Google boss fears (Highlights growing concerns about AI misuse from tech industry leaders.)

- Workday unveils AI agent workforce management system (Shows how AI is reshaping workforce management and HR practices.)

- AI-generated content raises risks of more bank runs, UK study shows (Reveals potential economic risks of AI-generated misinformation.)

- Thomson Reuters wins first major AI copyright case in the US (Sets important legal precedent for AI training data usage.)

AI research news

- Researchers led by University of Washington Nobel winner achieve a scientific breakthrough (Major advancement in AI’s application to scientific research.)

- Scaling up test-time compute with latent reasoning: a recurrent depth approach (Introduces new methods for improving AI model efficiency.)

- NoLiMa: Long-context evaluation beyond literal matching (Advances in evaluating AI models’ understanding of extended contexts.)

- Can 1B LLM surpass 405B LLM? Rethinking compute-optimal test-time scaling (Questions conventional wisdom about model size and performance.)

- On the emergence of thinking in LLMs I: searching for the right intuition (Explores fundamental questions about AI cognition and reasoning.)

AI hardware news

- Dominion sees data center power demand almost double in Virginia (Highlights growing infrastructure challenges for AI deployment.)

- Data center tweaks could unlock 76 GW of new power capacity in the US (Potential solution to AI’s growing energy demands.)

- DeepSeek reportedly exploring in-house chip development (Another major AI player moving towards chip independence.)

- Report: Arm to build its own chips, and Meta is first in line to buy them (Major shift in chip industry dynamics affecting AI hardware supply.)

- OpenAI reportedly finalizing design for in-house AI chip ahead of TSMC fabrication (Strategic move towards vertical integration in AI infrastructure.)