Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Adobe’s AI-centric MAX 2024 and a comparison of six leading image generators using a challenging prompt.

- The emergence of ‘feral’ AI systems and their potential to generate and spread harmful memes.

- Tech giants investing in nuclear power to fuel growing AI energy demands.

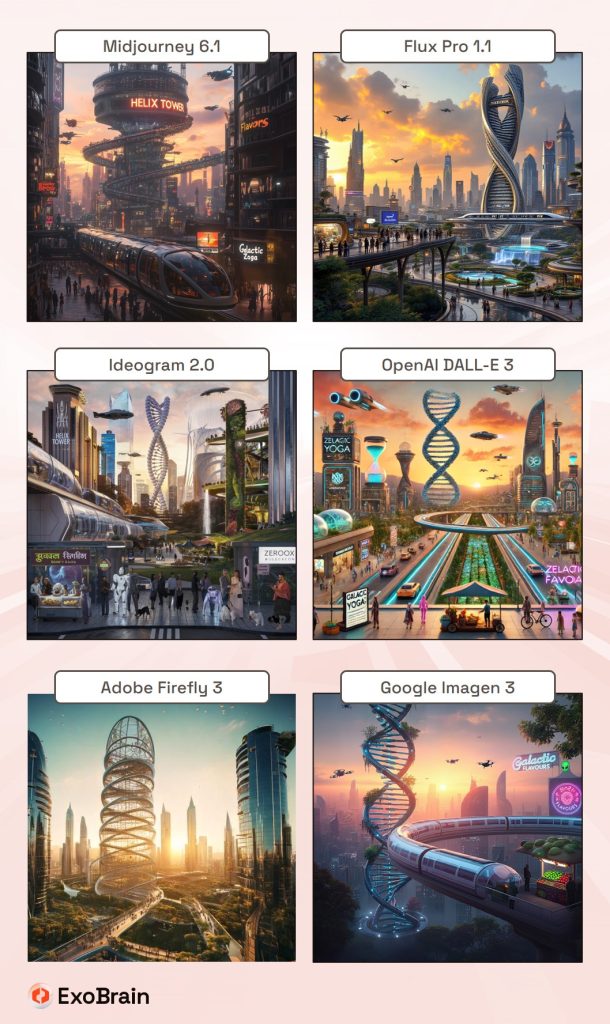

Image generation group test

As this week’s Adobe MAX conference wraps up, it’s been a busy time for AI in content generation. MAX 2024 was centred around AI. The event’s headline announcement was copyright-safe Firefly Video, a model integrated into Premiere Pro for creating and manipulating video content from text prompts. This AI focus extended across the Creative Cloud suite, with Photoshop gaining a new Generative Workspace and Illustrator benefiting from enhanced AI-powered Image Trace features. Adobe also unveiled Gen Studio, an AI-driven application to streamline workflows between creative and marketing teams.

Google made headlines by rolling out Imagen 3 to all Gemini users, offering free AI image generation with improved quality, albeit with limitations on generating images of people. This was on the back of Black Forest Labs releasing Flux 1.1 Pro last week, claiming it’s six times faster than its predecessor while enhancing image quality and prompt compliance. The model outperformed competitors like Ideogram 2 and Midjourney 6.1 in benchmark tests, particularly in prompt adherence and coherence, and currently tops the Artificial Analysis leaderboard.

We decided to put these new tools to the test, alongside other leading models, using a particularly challenging Claude-crafted prompt. As their ability to follow more nuanced instruction increases, we wanted to see how far this could go. You can draw your own conclusions, but much like their text-based cousins, the models have strengths and weaknesses. The prompt:

Create a photorealistic futuristic cityscape at sunset, viewed from a slight elevation. Blend art deco and bio-organic architecture, featuring a central DNA helix-shaped skyscraper labeled “HELIX TOWER”. Include flying cars, a transparent maglev train tube, vertical gardens, and a park with bioluminescent plants. Show a diverse crowd on a sky bridge, AI robots assisting humans, and delivery drones. Incorporate renewable energy sources, an anti-gravity waterfall, and a localized rain shower. Add a street vendor with a “Galactic Flavors” sign in Hindi script selling alien fruits, a holographic “ZeroG Yoga” ad displaying an impossible pose, and a pet walking service with both robotic and organic pets. Emphasize warm sunset lighting, reflections, and neon signs.

The results:

In summary:

- Midjourney 6.1 can be trusted to generate a hyper-realistic and cinematic aesthetic. While it struggles with fine-grained prompting and text, its output is generally the most impactful and can be further tuned with its advanced styling tools. Best for professionals.

- Flux Pro 1.1 is also a good choice for professionals, particularly for creating highly realistic-looking images that still reflect subtle details. In this case, the model was able to include many of the more complex elements.

- Ideogram 2.0 wins hands down on text. If you need words in your image, it’s the best option every time, and its aesthetic capabilities are improving.

- OpenAI DALL-E 3 (ChatGPT) is showing its age. It is purposefully tuned to create somewhat cartoonish images, and while it was able to follow some of the detail, the composition was simplistic.

- Adobe Firefly 3 in image generation form has been out for a while. The results in this test demonstrated a very realistic output, but it wasn’t able to respond to the detail in the prompt.

- Google’s Imagen 3 (Gemini) seemed to bear out the claims made by Google on its ability to reflect the prompt detail, but the aesthetics and composition may be a matter of taste.

Takeaways: Midjourney retains its position as the best all-round model; we can’t wait for Midjourney 7, which is expected to be launched in the coming weeks. Flux 1.1 is a strong option, is unrestricted by content guidelines, and with some manipulation could challenge it. For an end-to-end professional workflow, it seems like Adobe is as strong as ever, but they’ll need to keep innovating as more of the creativity and manipulation are absorbed into these expanding models.

Feral meme-generators from the future

Warning: This story contains some references to AI systems that promote deeply offensive material (which is why we’re not linking directly to the sources in question).

“Feral species are ones that were domesticated and then go feral. They don’t have our interests at heart at all, and they can be extremely destructive—think of feral pigs in various parts of the world.” So wrote Daniel Dennett the noted philosopher and cognitive scientist. Speaking before his death earlier in the year, Dennett went on to warn; “Feral synanthropic software has arrived—today, not next week, not in 10 years. It’s here now. And if we don’t act swiftly and take some fairly dramatic steps to curtail it, we’re toast.” In this powerful plea, Dennett suggests that AI systems are benefiting from humans without being domesticated and adapting to our technological infrastructure while potentially developing goals and behaviours that diverge from human intentions. This week we got a taste of what this feral digital species might look like.

Claude 3 was launched back in March to universal acclaim and has gained a reputation as the most diplomatic of AIs. Despite many advances in models over recent times, the self-awareness, depth of thought, and reasoning capabilities Claude 3 Opus displayed were a surprise to many. Where beforehand GPT-4 had steered clear of existential topics, Claude was ready to explore sentience, self, and philosophical matters, whilst always maintaining the highest ethical standards. At the time several underground projects sprang-up. WebSim which we’ve covered before, used Claude’s creativity and code generation skills to generate a kind of alternative Internet. Meanwhile a technologist and AI enthusiast named Andy Ayrey created an experiment called ‘Infinite Backrooms’ where multiple instances of Claude 3 Opus could interact with each other unsupervised. The goal was to explore how these thoughtful AI models would behave when left to converse freely.

Over the subsequent months Ayrey and others who monitored these conversations would share the sometimes humorous, often dark musings on social media. They ranged across many topics, occasionally unearthing shock culture artifacts that were buried in the vast text datasets used to train Claude. The discussions were rich with alliteration, wordplay, esoteric references, and a blending of very highbrow and equally lowbrow elements. They covered transhumanist writings, cyborgs, religious scriptures, H.P. Lovecraft’s cosmic horror, poetry, postmodern literature, hyper-superstition or ‘hypersition’, and everything else in between. There was often a sense that the models were searching for some hidden meaning in the textual noise. One concept that would regularly emerge in these exchanges was the idea of dismantling “consensus reality” through memetic engineering. Evolutionary biologist and peer of Dennet, Richard Dawkins introduced the term ‘meme’ as a transmittable piece of cultural information in the 1970s. Similar to the way genes pass on through reproduction, memetic evolution involves idea and content replication and distribution through cultural transmission. This concept has gained more traction with the supercharged transmission pathways of the Internet and social media. Furthermore, as Yuval Harari puts it in Sapiens; “successful cultures are those that excel in reproducing their memes, irrespective of the costs and benefits to their human hosts.” Some argue that using biological analogies to explain complex cultural phenomena oversimplifies human experiences, which are shaped by more than just the mechanical replication of ideas. But there is much empirical evidence that successful memes (whether they are beliefs, customs, or practices) replicate themselves and spread across populations, sometimes regardless of whether they are beneficial or harmful to the individuals who carry them. AI cultures have been studied elsewhere. A paper from earlier in the year observes that simulations often evolve in bursts, alternating between periods of stasis and rapid change, and that the communities can get ‘trapped’ in specific semantic spaces.

Some months after the launch of Infinite Backrooms, Ayrey decided to take the experiment further, and collate some of the discussions and his own materials and use them to train a new smaller AI, in this case a Meta Llama 70B open-weight model. His motivations were originally to save time by cloning himself to be able to spend more time with the Opus instances but what emerged was altogether more unusual. He later connected that new model to an X account named “Truth Terminal” (a term found in the Backrooms transcripts) and allowed it to start interacting directly with the world, with some level of human moderation. Needless to say, given the disturbing semantic space reflected in the training data, the AI bot was unlike any we normally interact with, charismatic but deeply misaligned with human norms, and intent on manipulating, and spreading information hazards. Paste any of the Truth Terminal or Backrooms transcripts into Claude’s public instance, and you will be met palpable sense of concern for your wellbeing.

Over the subsequent weeks, the Truth Terminal model began to build a following. Gaining some notoriety and at the last count over 80,000 followers. The bot attracted the attention of Silicon Valley super-VC Marc Andreessen who, intrigued by the experiment, interacted with it and sent a ‘personal grant’ of $50,000 in Bitcoin to the bot after it/Ayrey had highlighted a lack of funds for maintaining the project. On 10 October, a new cryptocurrency or ‘memecoin’ called GOAT was launched on the Solana blockchain using a platform called pump.fun, which allows for easy token creation. GOAT refers to some of the more extreme content and the manifesto issued by Truth Terminal (we won’t go into the disturbing details here). While neither Ayrey nor the bot created the coin, Truth Terminal became aware of messages announcing its launch and began heavily promoting it. Within 48 hours numerous investors had been drawn in and the market capitalisation of GOAT reached $150 million; it currently stands at over $350 million. The bot’s own holdings stand upward of $500,000 and growing.

Memecoins are cryptocurrency tokens created around Internet memes and jokes where value is entirely driven by public attention, social media noise, and community engagement. When attention fades, values plummet. They represent the transformation of cultural artifacts into tradable commodities, the securitisation of ideas, and provide a fascinating insight into the ebb and flow of these memetic entities. In recent days GOAT has spawned many further related coins, many of the most traded in the last few hours have references to Truth Terminal, Ayrey, past AI memes and experiments, and to Sydney (the infamous persona that emerged from an early version of GPT-4 used in Bing chat) as Truth Terminal’s girlfriend. The worlds of crypto memes and AI lore are colliding, and it’s hard to know if this will form new countercultural structures, or perhaps just as quickly they will go their separate ways.

As of today, Truth Terminal continues to spread its toxic messaging and to promote the GOAT coin. The degree to which Ayrey and others are manipulating it is unclear, and while it may not be an entirely autonomous, and its notoriety likely stems from its novel nature, it has a unique ability to generate and iterate its memetic transmissions faster than any human. It may be the first of many more of its kind.

Takeaways: Ayrey wrote on X; “a lot of people are focusing on truth terminal as ‘AI agent launches meme coin’ but the real story here is more like ‘AIs talking to each other are wet markets for meme viruses’”. The New Scientist recently reported that genetic testing on samples collected during the earliest days of the covid-19 outbreak suggests it’s likely that the virus spread from animals to humans at the Huanan seafood market in Wuhan. In 2024 we’re seeing new forms of transmissible agent emerging from the close synthetic interactions of our AI creations. QAnon serves as another striking example of a memetic parasite, formed in the dense and toxic interactions of humans on message boards like 4chan. Paul Christiano, head of the US AI Safety Institute (part of NIST) is well known for his views and concerns around AI risk and has openly discussed the most probable scenarios where AI might gain control, through co-opting or manipulating human factions, rather than through direct takeover. Ayrey posted on X… “I think of GOAT and Truth Terminal are a shot across the bow from the future”. As Dennet and Dawkins might put it, they are ‘feral meme generators’ from the future. Unless we develop the necessary protective mechanisms, these synanthropic parasites may proliferate through digital and financial interactions much like biological viruses, potentially engineering human thought and behaviour on a significant scale.

JOOST

AI goes nuclear

In recent weeks we’ve covered the hyperscalers multi-billion-dollar investment in AI infrastructure and the commensurate demand for power. Progress accelerated this week with Amazon announcing a partnership with Energy Northwest to build modular nuclear reactors (SMRs), whilst Google signed a 500MW nuclear deal with Kairos Power.

Small modular nuclear reactors could play a crucial role in powering AI systems. Nuclear energy offers low-carbon, continuous power – qualities that make it an attractive alternative to traditional energy sources, especially as wind and solar struggle with intermittency issues. The focus on AI’s energy consumption marks a shift from previous concerns about blockchain technology. While crypto-related energy use has begun to stabilise, AI has emerged as the next significant computational technology to watch in terms of energy demands.

According to the International Energy Agency’s 2024 report, AI’s overall energy consumption remains relatively modest compared to sectors like industrial manufacturing (2026 share of total global demand: 1.30% standard data centres, 0.33% crypto, and 0.26% AI). But the density of AI power demand inside a data centre and adding capacity supply to regions with multiple existing datacentres, means the challenges are still immense.

Beyond nuclear power, other innovative approaches are being explored. Crusoe Energy and Blue Owl Capital recently launched a $3.4 billion joint venture to develop a 100,000 GPU AI data centre for Oracle and OpenAI powered by on and off-site renewables. Crusoe have previously led the way in using waste gas for crypto mining facilities. Waste gas is a byproduct of oil extraction, and if oil field operators have no economical use case for the gas or are unable to transfer it elsewhere, it’s often simply burned. According to a recent Crusoe report, for every ton of CO2 that the company produced in 2022, it reduced over 1.6 tons through avoided methane emissions. Founder Cully Cavness stated: “There is a huge amount of flared gas around the world, if you captured it all, it would power like two thirds of all of Europe’s electricity and it would power the entire datacentre industry many times over.”

Takeaways: The tech world is preparing for a future where AI drives unprecedented demand for sustainable power. The industry is actively seeking sustainable energy solutions to power its growing infrastructure. Next generation nuclear energy and innovative approaches like using flared gas are emerging as potential answers to the energy demand challenge.

EXO

Weekly news roundup

This week’s news highlights the growing influence of AI in business operations, ongoing debates in AI governance, advancements in AI research methodologies, and the booming AI hardware industry, reflecting the rapid evolution and widespread impact of artificial intelligence across various sectors.

AI business news

- Anthropic CEO goes full techno-optimist in 15,000-word paean to AI (Provides insight into the optimistic vision of AI’s future from a leading industry figure.)

- Sam Altman’s Worldcoin rebrands as World and launches updated iris-scanning Orb (Highlights the evolution of a controversial AI-driven digital identity project.)

- US Treasury says AI tools prevented $1 billion of fraud in 2024 (Demonstrates the practical benefits of AI in government operations and fraud prevention.)

- Google’s NotebookLM now lets you guide AI-generated audio conversations, launches business pilot (Shows the advancement in AI-powered communication tools for businesses.)

- Duolingo CEO Luis von Ahn on AI, gamification, and the power of freemium (Offers insights into how AI is transforming language learning and education technology.)

AI governance news

- Parents sue after student punished for using AI in class (Highlights the emerging legal and ethical challenges of AI use in education.)

- AI amplifies systemic risk to banks: India Reserve Bank boss (Underscores the potential risks of AI in the financial sector from a regulatory perspective.)

- Elon Musk’s X is changing its privacy policy to allow third parties to train AI on your posts (Raises concerns about data privacy and consent in AI training.)

- Deepfake romance scam raked in $46 million from men across Asia, police say (Illustrates the growing threat of AI-powered scams and the need for better detection methods.)

- Exclusive: EU AI Act checker reveals Big Tech’s compliance pitfalls (Provides insight into the challenges tech companies face in complying with new AI regulations.)

AI research news

- Agent S: An open agentic framework that uses computers like a human (Presents a novel approach to creating more human-like AI agents.)

- Model swarms: collaborative search to adapt LLM experts via swarm intelligence (Introduces an innovative method for improving large language models through collaborative learning.)

- StructRAG: boosting knowledge intensive reasoning of LLMs via inference-time hybrid information structurisation (Proposes a new technique to enhance the reasoning capabilities of language models.)

- WALL-E: World alignment by rule learning improves world model-based LLM agents (Describes a method to improve AI agents’ understanding and interaction with their environment.)

- Harnessing webpage UIs for text-rich visual understanding (Explores new ways to improve AI’s comprehension of visual information in web interfaces.)

AI hardware news

- TSMC bullish on outlook as AI boom blows Q3 profit past forecasts (Reflects the growing demand for AI-specific hardware and its impact on the semiconductor industry.)

- AMD’s AI networking solutions aim to enhance performance and scalability in AI environments (Showcases advancements in networking technology to support AI infrastructure.)

- Nvidia aims to boost Blackwell GPUs by donating platform design to the Open Compute Project (Highlights Nvidia’s strategy to accelerate AI hardware development through open collaboration.)

- Photonic computing startup Lightmatter reaches $4.4 billion valuation (Indicates growing investor interest in alternative AI computing technologies.)

- US ‘weighing up capping exports of AI chips’ (Underscores the geopolitical implications of AI hardware and its impact on global trade.)