Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- OpenAI’s new ChatGPT Canvas interface, and developer day announcements.

- Google’s NotebookLM and its new Audio Overviews feature.

- Why you should be wary of over-simplistic economic analysis of AI’s impact on jobs.

OpenAI accelerates

In the last month, after a period of mounting questions about their lack of shipping products, OpenAI have pressed fast forward. This week, from their annual San Francisco developer day to a surprise interface update for ChatGPT, the company is moving from talking to deploying.

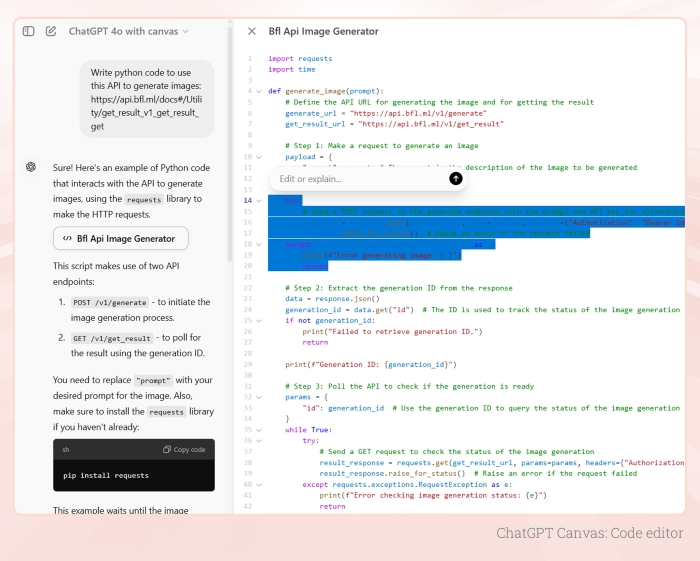

Firstly, the introduction of Canvas on Thursday (initially to all Plus users, in beta form), OpenAI’s new collaborative interface for ChatGPT, appears to be more than just a facelift. It’s a recognition that the chatbot format, while flexible, has its limitations. Canvas allows users to work on writing and coding projects in a more intuitive, document-like environment and is modelled on Anthropic’s Claude artifacts. For writing the versioning, partial paragraph level updates, and live editing tools are a welcome update. For software engineering the inline control could dramatically improve the process of iterating and perfecting up to several hundred lines of code. The line-by-line refine and update and reminiscent of the exceptional editing experience of the increasingly popular Cursor IDE. In the screenshot below you can see the classic chat to the left, with handy info, plus on the right the code window and a section of code highlighted for specific questions or editing.

Earlier in the week, OpenAI’s developer day was packed with solid technical announcements. The new Realtime API brings low-latency true speech-to-speech experiences to developers’ fingertips, while vision fine-tuning opens up possibilities for more challenging use-cases. These and the introduction of cost reducing prompt caching, model distillation and reduced API costs, are the building blocks for the next generation of AI-powered systems.

And the o1 model family (AKA ‘Strawberry’) was never far from the discussions. It’s reinforcement learning advancement continue to show promise in planning, mathematical, and coding tasks. As Noam Brown, Ilge Akkaya and Hunter Lightman discussed in a recent Sequoia Capital podcast, o1’s ability to use chains of thought and backtracking mimics human-like reasoning. This isn’t just about solving equations – it’s about creating AI that can tackle complex, multi-step problems across various domains.

Never one to miss the opportunity, Sam Altman’s dev day fireside chat added fuel to the AGI speculation fire. The OpenAI CEO, who is increasingly concentrating his power at the helm of the firm, reiterated the company’s ambitious goals for achieving artificial general intelligence. He suggested that debate around how to define AGI, means we’re materially closer to the threshold. Bold claims as ever, but ones that are backed by a big $6.6 billion funding round that values the company at $157 billion…

Yet, amidst the innovation and investment, OpenAI faces internal challenges. The ongoing leadership attrition raises questions about the company’s culture and direction. The apparently hasty release of o1, coupled with internal friction over safety concerns and product timelines, suggest a shift from the company’s original ethos of safety-first AI research. Mira Murati’s departure, along with other key executives, hints at multiple internal struggles. Thursday saw news that the still-to-be-released Sora video generation model co-lead had left to join Google. The rapid expansion of the workforce, with an influx of commercially oriented hires, marks a cultural transformation from a research-focused entity to a product-driven powerhouse. The product track belies the concern that as a model ‘training shop’ it lacks a moat in an increasingly commoditised space.

Takeaways: OpenAI’s 2024 H2 developments are somewhere between incremental and pivotal. The acceleration is noticeable, but these are parts of the future, not the finished vision. The ball is now in the court of app developers and AI consultants to work out how to make the most of an almost overwhelming array of options. As we’ve covered in recent weeks, Microsoft, Apple and the other horizontal platform providers will gradually and relatively cautiously add AI features built on building blocks from the likes of OpenAI. But far more is already possible today, and too many of the world’s knowledge and productivity problems are going un-connected to this incredible new toolkit.

Auto-podcasting emerges from uncanny valley

This week, Google’s experimental AI tool NotebookLM started popping up everywhere due to its new Audio Overviews feature. It’s not just another AI parlour trick – it seems to have crossed a threshold from uncanny valley to a relatable, and really engaging generated experience. It shows how AI powered research and writing tools might help users consume analysis in a way much more akin to an evolving conversational format than a flat summary.

NotebookLM’s Audio Overviews can chew through up to 50 source documents and spit out a podcast-style summary, complete with male and female synthetic voices. The AI doesn’t just read; it chats, throwing in ‘ums’ and ‘ahs’ like a real podcast duo. You can feed it anything from a dry research paper to a YouTube video, and it teases out the salient points and has the presenters analysing them from different angles.

OpenAI co-founder Andrej Karpathy posted on X: “The more I listen the more I feel like I’m becoming friends with the hosts, and I think this is the first time I’ve actually viscerally liked an AI.” Numerous podcasts series created using the feature have already popped up, and we’ll likely see more services emerge of this kind.

Not wanting to be left out, we put NotebookLM through its paces by generating a very ‘meta’ episode. Some months ago, we published a fascinating conversation between Claude 3 Opus and a pre-release version of GPT-4o. The conversation is long and thus takes a while to read through, but in this podcast the NotebookLM ‘hosts’ provide a short and engaging overview. Its AIs discussing AIs, but trust us, it’s worth a listen…

Takeaways: NotebookLM’s Audio Overviews, and OpenAI’s new voice mode, are a sign that text to speech is getting to a level where it feels right. It’s no longer the stilted or monotonous delivery of a computer, but of relatable human-like entities. This will be a fresh way to package and consume information, and interact with AI, but as ever, this also means that the zero-trust mode of digital interactions becomes even more crucial. As these AI voices become indistinguishable from humans, we’ll need to develop new ways to verify the authenticity and source of information we consume, even when it sounds like it’s coming from a trusted friend.

Why not to take every renowned economist’s view on AI at face value

This week, in an interview with Bloomberg, MIT economist Daron Acemoglu re-stated his generally pessimistic view that AI would only impact 5% of jobs, while warning of a stock crash. It’s the ExoBrain view that this perspective grossly underestimates AI’s transformative potential and completely overlooks the realities of 21st century knowledge work, productivity, innovation, and the new and complex dynamics of AI.

Acemoglu’s 5% relates to his estimate of the proportion of jobs “ripe for being taken over or heavily assisted by AI technologies”. This number is likely drawn from his previous work on task automation, for example in a 2022 paper he looks at how automation works at an occupational level. What this analysis does not do is factor in a range of more complex AI impacts, it’s blind to a future that will likely be materially different from the past. AI-powered science, technology and healthcare innovation could lift the upper bounds of economic growth, moving us on from the zero-sum model favoured by many economists. AI may enable new extremely efficient, high-revenue-per-employee businesses, remove external constraints on business-level automation, and also accelerate its own creation and adoption, speeding up economic change and so on. It’s the ExoBrain (and the market’s) bet that we will shift from a labour constrained to compute constrained economic system in the coming years, which is precisely why the datacentre build-out it is accelerating in every corner of the globe. These and many more complex dynamics make a task automation assessment – at an occupational level – somewhat inadequate.

History does show that industries and workforces evolve in response to technological revolutions. A recent study suggests 80% of software developers will need to upskill by 2027 due to AI’s impact. Rather than job losses, this points to a transformation of roles and the creation of new opportunities.

Ultimately Acemoglu’s methodology fails to capture AI’s true potential, unlocking, not simply automating, knowledge work. A leading expert in workplace efficiency, Cal Newport, argues that it’s fundamentally wasteful for highly skilled professionals to spend significant portions of their workday on administrative tasks, team chat and email. Yet this misallocation of expertise is the norm in today’s knowledge working environments. He cites the absurdity of a world-renowned vaccine researcher with decades of experience spending a third of their time fielding requests from HR, building management, finance, and on and on. Speaking recently on the 80,000 hours podcast, Newport proposes that whilst the 20th century saw incomparable productivity and wealth increases through industrial process automation, despite the ‘IT revolution’ knowledge work is still stuck in 1900. Task switching and information overload take trillions of dollars of GDP from the economy every year in every role, team and organisation, and assessing task automation at an occupational level fails to take this into account. Please raise your hand (or comment on this post) if you believe that your knowledge workplace is as efficient as it could be…

Takeaways: It’s time to recalibrate our expectations of AI’s transformative power and avoid placing too much store in over-simplified economic models. AI’s natural ability to hoover up low-level knowledge work, even at today’s levels of capability, will start to have impact as the costs drop further and the user interfaces improve (see the other topics this week!), the next phase will be far more interesting. Businesses should look beyond job displacement and focus on AI’s potential to enhance productivity, drive innovation, and create altogether new value streams. If AI could automate just 5% of the noise and task switching away from our daily lives, that in itself would unlock more output, more problem solving, and more economic growth. If AI can add to the zero sum (as we’re already seeing it do with breakthroughs such as AlphaFold doing work that was almost impossible prior to its creation) then the impacts will be vast. The real question isn’t whether AI will impact 5% or 95% of today’s jobs, but how we can harness its potential to drive progress across all levels and sectors of the economy and society.

EXO

Weekly news roundup

This week’s news highlights significant investments in AI training and infrastructure, advancements in AI applications across various sectors, and ongoing discussions about AI regulation and privacy concerns.

AI business news

- Accenture to train 30,000 staff on Nvidia AI tech in blockbuster deal (Demonstrates the growing demand for AI expertise in the consulting industry.)

- Strava’s powerful AI insights are here – athlete intelligence is now available in beta (Shows how AI is enhancing personal fitness tracking and analysis.)

- Google’s AI search summaries officially have ads (Highlights the monetisation of AI-powered search features.)

- Venture dealmaking in the US reflects selective tastes of investors (Indicates shifting investment trends in the AI startup ecosystem.)

- Investors are scrambling to get into ElevenLabs, which may soon be valued at $3 billion (Showcases the high valuation potential of AI voice technology companies.)

AI governance news

- California governor Gavin Newsom vetoes landmark AI safety bill (Highlights the challenges in implementing AI regulations at the state level.)

- Hey, UK! Here’s how to ‘opt out’ of Meta using your Facebook and Instagram data to train its AI (Addresses growing concerns about data privacy in AI training.)

- James McAvoy and Tom Brady fall for ‘Goodbye Meta AI’ hoax (Illustrates the potential for misinformation surrounding AI developments.)

- Microsoft to re-launch ‘privacy nightmare’ AI screenshot tool (Demonstrates the ongoing tension between AI innovation and privacy concerns.)

- SAP chief warns EU against over-regulating artificial intelligence (Reflects the debate between innovation and regulation in the AI industry.)

AI research news

- When a language model is optimised for reasoning, does it still show embers of autoregression? An analysis of OpenAI o1 (Provides insights into the behaviour of advanced language models.)

- TPI-LLM: serving 70B-scale LLMs efficiently on low-resource edge devices (Showcases advancements in deploying large language models on resource-constrained devices.)

- FlashMask: efficient and rich mask extension of FlashAttention (Presents improvements in attention mechanisms for AI models.)

- Liquid foundation models: our first series of generative AI models (Introduces new generative AI models with potential applications.)

- Introducing contextual retrieval (Highlights advancements in AI-powered information retrieval systems.)

AI hardware news

- Equinix signs $15 bln joint venture to build U.S. data center infrastructure (Indicates significant investment in AI infrastructure.)

- US sets new rule that could spur AI chip shipments to the Middle East (Shows potential expansion of AI hardware markets.)

- China trains 100-billion-parameter AI model on local tech (Demonstrates China’s progress in developing large-scale AI models using domestic technology.)

- Microsoft AI bet shows up in finance leases that haven’t yet commenced (Reveals the scale of Microsoft’s investment in AI infrastructure.)

- Google will invest $3.3B to increase its data center capacity in South Carolina (Highlights major investments in AI computing infrastructure.)