Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

JOEL

This week we look at:

- How data centre communities are pushing back and a cross-partisan political movement is forming

- Anthropic’s $30 billion funding round and $14 billion in revenue

- Google’s Deep Think crushing ARC-AGI-2

Post-human buildings with a human cost

This week, New York lawmakers proposed a three-year moratorium on new data centres. The Trump administration pushed AI companies to sign a voluntary pact promising their facilities won’t raise household electricity prices or strain water supplies. And Anthropic became the first major AI company to pledge it would cover consumer electricity price increases caused by its data centres. Three announcements in a single week, each pointing in the same direction: the communities where AI is physically built are pushing back. A political movement is forming. Not just in Washington. Not just in think tanks. In town halls, county planning commissions, and local Facebook groups. It’s disorganised, cross-partisan, and driven by something very simple: people can see, hear, and feel these things arriving in their communities. The grey barns. The hum. The webs of wires. The rising bills.

In Fulton County, Indiana, a rural community of 20,000 people, officials voted 6-1 for a data centre moratorium after a packed, nearly three-hour public hearing. Residents said data centres were swallowing farmland with “no explanation at all.” In Illinois, a man was arrested for threatening to kill local officials over data centre discussions. Between March and June last year, 20 data centre projects worth approximately $98 billion were blocked or delayed across the United States. At least 8 Georgia towns and 4 Indiana counties banned new construction entirely.

Northern Virginia is the epicentre of the global data centre industry. Over 300 facilities handle an estimated 70% of global internet traffic, with more than 4,900 MW of operating capacity and another 1,000 MW under construction. The region’s data centre power demand grew from 3.3 GW in 2020 to 16.6 GW in 2026, and projections suggest it could exceed 33 GW by 2030. In July 2024, a voltage fluctuation in Northern Virginia caused 60 data centres to simultaneously disconnect, creating a 1,500 MW power surplus that nearly triggered cascading outages across the grid. Wholesale electricity prices near these hubs have risen by up to 267% over five years. Household bills are projected to climb more than 25% by 2030. Over 4,000 backup diesel generators sit across the region, and the state handed out $1.9 billion in data centre tax exemptions in FY2025 while residents watched their bills rise. In The Dalles, Oregon, a town of 16,000, Google’s facilities now consume roughly one-third of the municipal water supply. In Newton County, Georgia, neighbours of Meta’s data centre have reported wells running dry, with nine more applications on file, some requesting more water per day than the county currently uses in total.

The AI industry tends to cite global averages when defending its footprint. Data centres consume around 1.5% of global electricity. AI itself accounts for perhaps 0.15-0.22%. These numbers are accurate. They are also misleading. In Ireland, data centres consume 22% of all metered electricity. In Virginia, a single state’s data centre power demand grew from 3.3 GW to 16.6 GW in six years and could double again by 2030. Microsoft’s planned Wisconsin campus alone, at 3.3 GW, would consume more electricity annually than many small countries. The gap between global averages and acute local reality can be stark.

Bernie Sanders has called for a national moratorium on data centre construction. Ron DeSantis proposed a Florida “AI Bill of Rights” that would let local communities block projects outright and ban utilities from passing hyperscale infrastructure costs to residential ratepayers. A democratic socialist and a right-wing governor, agreeing on almost nothing else, have arrived at the same conclusion: the AI industry’s appetite for electricity is a problem that ordinary people shouldn’t have to pay for. As TIME put it, the emerging politics of AI “does not map onto the traditional left-right ideological spectrum.” This isn’t a tech regulation debate. It’s a cost-of-living issue, and those cut across every political line.

The industry is responding. Anthropic’s pledge to cover electricity cost increases is a first. Microsoft launched a “Community-First AI Infrastructure” initiative in January, promising to absorb electricity costs, replenish water, and pay full property taxes. The Trump administration’s voluntary pact asks companies to “hold harmless” residential electricity prices. These are defensive moves from an industry that recognises it is losing social licence in the places it needs most.

Takeaways: The FT’s architecture critic recently declared the data centre the defining building style of the 21st century, “the first real major post-human building type, an architecture built not for us but for the computing power we are coding.” That power is not coded in the abstract. It is built with concrete, cooled with water, and fed by electricity grids that serve homes, schools, and hospitals. Combined hyperscaler capital expenditure is projected at $600-630 billion for 2026, comparable to the entire global semiconductor industry’s annual revenue. That money is building post-human architecture in very human places, and the people who live in those places are organising. The question “who pays?” is no longer a policy footnote. It is the central political question of AI infrastructure, and right now, the answer is unresolved.

The fastest growing software company of all time

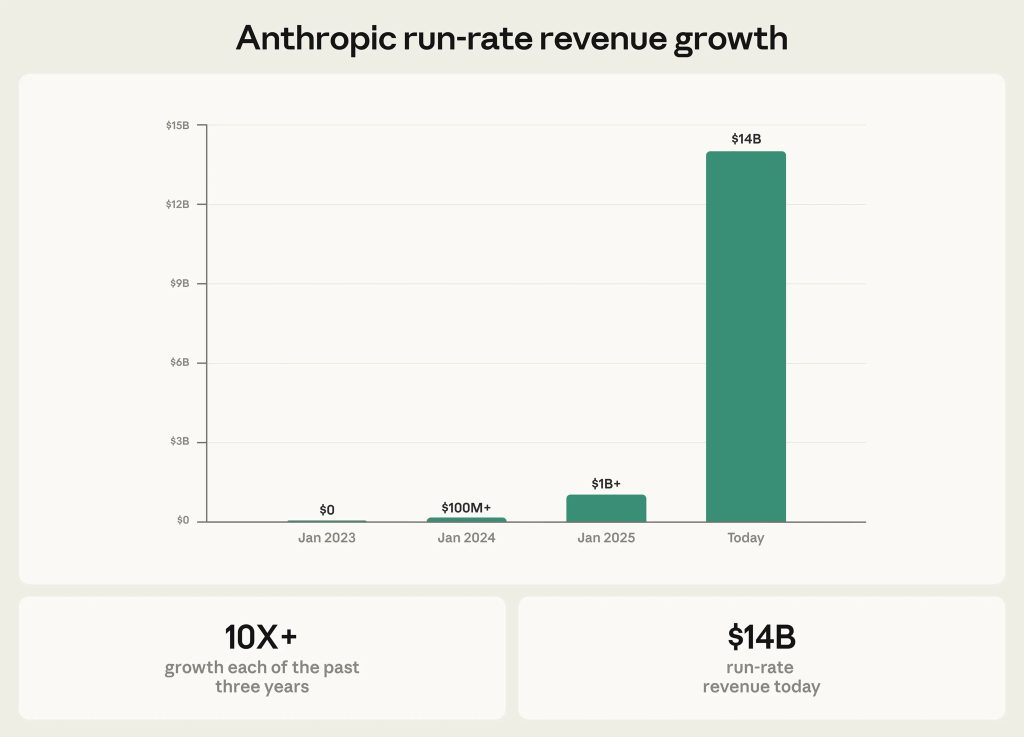

Anthropic this week closed a $30 billion Series G at a $380 billion valuation and revealed annualised revenue of $14 billion. That makes it the fastest-growing software company in history. The trajectory is staggering: $0 to $100 million in 2023, $100 million to $1 billion in 2024, $1 billion to $14 billion through 2025 and into early 2026. Three consecutive years of roughly 10x growth. No software company has ever done that. Not Salesforce, not Slack, not OpenAI. Claude Code alone, launched less than a year ago, is now at a $2.5 billion run rate. Customers spending over $1 million a year went from 12 to over 500 in two years. Eight of the Fortune 10 are now Anthropic customers.

In a wide-ranging interview with Dwarkesh Patel this week, Anthropic CEO Dario Amodei was candid about where all this is heading. He expects growth to “slow” to 3-4x in 2026, targeting around $30 billion in annual revenue. The reason is not that demand is softening. It’s that you can’t physically build data centres and procure chips fast enough to serve continued demand. Demand that is increasingly creating a new paradigm. As Anthropic co-founder Jack Clark put it back in December, by summer 2026 he predicted “the AI economy may move so fast that people using frontier systems feel like they live in a parallel world to everyone else.” The revenue numbers suggest that parallel world is already forming.

Amodei remains resolutely supportive of the LLM’s transformer architecture, and offered a perspective on how he sees AI models actually learn. They are not as “sample efficient” as humans, he concedes, requiring far more data to pick up a new concept. But within their context window, they can learn and adapt remarkably well. And the comparison to human learning, he argues, is less clear-cut than people assume. The human brain is not a blank slate at birth. It arrives pre-loaded with millions of years of evolutionary architecture, instincts and inherited structures that took billions of parameter-equivalents of natural selection to build. When you account for all that embedded prior knowledge, models start to look less like slow learners and more like a different kind of fast learner, one that trades our biological head start for raw scale and speed.

Amodei argues that even without solving continuous on-the-job learning, in-context learning within a million-token window gets you most of the way there, and that’s enough to generate “trillions of dollars of revenue.”

Takeaways: There is a persistent narrative that AI infrastructure is being overbuilt. Anthropic’s numbers suggest the opposite. A company that tripled revenue to $14 billion and is still constrained by how fast it can pour concrete and rack GPUs is not a company outrunning demand. Rather than a supply glut, the reality, for now, is a fundamental supply constraint.

EXO

ARC-AGI-2 falls to Gemini Deep Think

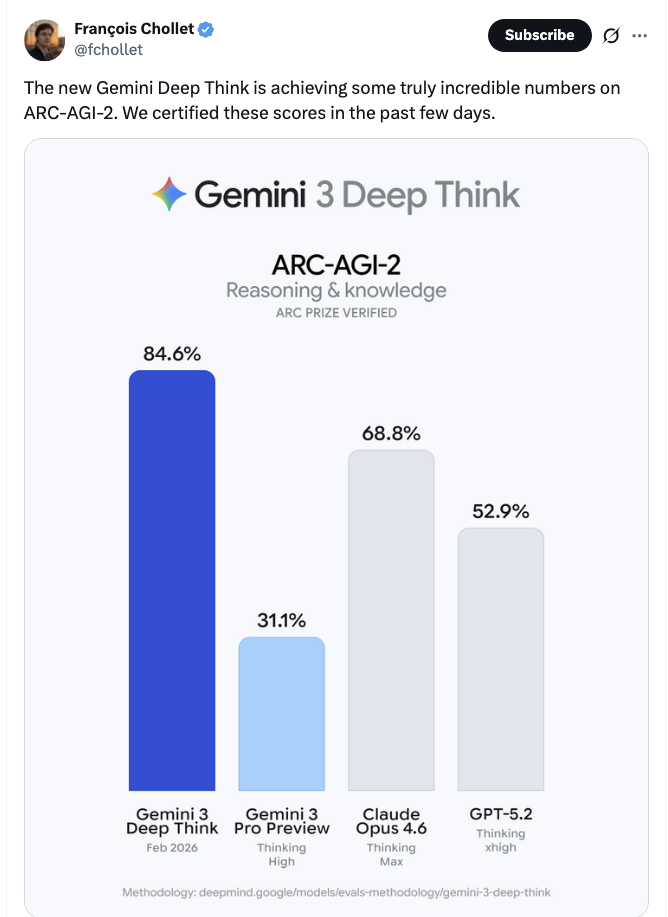

This chart shows how, with minimal fanfare, Google released the February 2026 edition of its Deep Think variant of Gemini 3, and it casually crushes what has been one of the hardest benchmarks for AI over the last year. ARC-AGI-2 was designed to test novel reasoning that’s “easy for humans, hard for AI,” and humans average around 60% on it. Deep Think now scores 84.6%, with Claude Opus 4.6 at 68.8%, GPT-5.2 at 52.9%, and Gemini’s own base model trailing at 31.1%. François Chollet’s ARC Prize team, who verified these scores, also flagged that the model appeared to know ARC’s colour mappings without being told, suggesting ARC data may be well represented in Google’s training set. Whether this reflects genuine reasoning or brute-force compute remains an open question, and we won’t have to wait long for a tougher test: ARC-AGI-3 launches next month with interactive, game-like environments where models must explore, set their own goals and adapt on the fly, capabilities that no amount of extra thinking tokens can fake.

Weekly news roundup

This week’s headlines reveal AI’s deepening impact on the workplace — from longer workdays to developers who’ve stopped writing code — alongside growing tensions over AI governance, a rich crop of agentic research, and continued hardware diversification beyond Nvidia.

AI business news

- AI makes the workday longer and more intense: HBR study (A Harvard Business Review study challenges the productivity promise, finding AI tools are increasing work hours and cognitive load rather than reducing them.)

- Elon Musk suggests spate of xAI exits have been push, not pull (Signals internal turbulence at one of the major frontier AI labs, with Musk framing recent departures as deliberate rather than talent poaching.)

- Databricks CEO says SaaS isn’t dead, but AI will soon make it irrelevant (A bold prediction that AI agents will replace traditional software-as-a-service, with major implications for enterprise technology strategy.)

- China’s Zhipu AI launches GLM-5 with 30% price increase — as stock jumps 34% (Shows Chinese AI companies gaining pricing power and investor confidence as the global frontier model race intensifies.)

- Spotify says its best developers haven’t written a line of code since December, thanks to AI (A striking real-world example of how AI coding tools are fundamentally reshaping the role of software engineers at major companies.)

AI governance news

- ‘It’s over for us’: release of new AI video generator Seedance 2.0 spooks Hollywood (The latest leap in AI video generation raises urgent questions about deepfakes, intellectual property, and the future of creative industries.)

- OpenAI accuses China’s DeepSeek of distilling US AI models to gain an edge (Escalates the US-China AI rivalry with allegations of model distillation, highlighting the difficulty of protecting AI intellectual property.)

- UK Supreme Court ruling on patents and AI is boost for innovation, lawyers say (A landmark legal decision that could shape how AI-generated inventions are treated in intellectual property law across jurisdictions.)

- The backlash over OpenAI’s decision to retire GPT-4o shows how dangerous AI companions can be (Highlights the growing emotional dependency users develop on AI systems and the ethical responsibilities of model deprecation.)

- A wave of unexplained bot traffic is sweeping the web (Raises concerns about large-scale data harvesting operations, potentially linked to AI training pipelines, disrupting web infrastructure.)

AI research news

- Think longer to explore deeper: learn to explore in-context via length-incentivised reinforcement learning (Proposes a novel RL approach that rewards longer reasoning chains, improving exploration depth in large language models.)

- Weak-driven learning: how weak agents make strong agents stronger (An intriguing finding that weaker AI models can systematically improve stronger ones, with implications for efficient training pipelines.)

- AgentSkiller: scaling generalist agent intelligence through semantically integrated cross-domain data synthesis (Advances the goal of building generalist AI agents by synthesising training data across multiple domains.)

- LLaDA2.1: speeding up text diffusion via token editing (Introduces token editing to accelerate diffusion-based language models, offering a promising alternative to autoregressive generation.)

- Agyn: a multi-agent system for team-based autonomous software engineering (Presents a collaborative multi-agent architecture for end-to-end software development, relevant to the growing AI coding agent space.)

AI hardware news

- OpenAI debuts first model using chips from Nvidia rival Cerebras (A significant step in diversifying AI compute away from Nvidia’s dominance, signalling a maturing hardware ecosystem.)

- Taiwanese whiskey importer looks to pivot to data centres (An amusing but telling sign of the gold-rush economics around AI infrastructure, as unlikely companies chase data centre revenues.)

- ByteDance in talks with Samsung for production of 350,000 AI inferencing chips (Highlights Chinese tech giants’ push to secure custom silicon supply chains amid ongoing US export restrictions.)

- Microsoft to launch Saudi Arabia East cloud region in Q4 2026 (Reflects the growing geographic expansion of AI infrastructure into the Middle East, driven by sovereign AI ambitions and investment.)

- Why the economics of orbital AI are so brutal (A sobering analysis of space-based AI computing, examining why the physics and costs make satellite-hosted inference extremely challenging.)