Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

JOEL

This week we look at:

- Anthropic and OpenAI launch new models as agents move into Goldman Sachs

- The memory shortage taxing consumer electronics and accelerating the AI arms race

- OpenAI’s Frontier platform turns enterprise SaaS into interchangeable plumbing

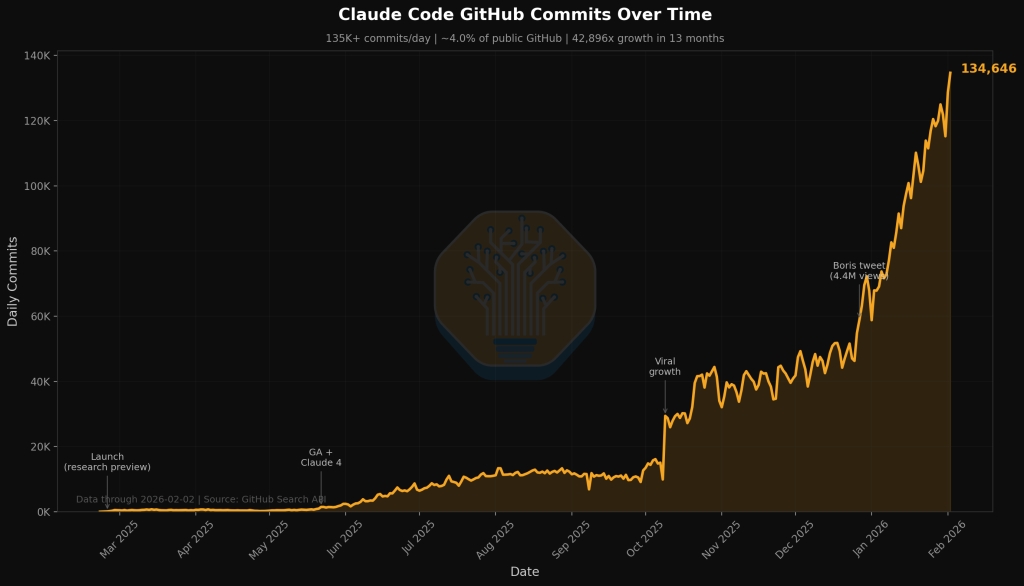

Claude writes 4% of the world’s code

Thursday was the kind of day that stretches AI watchers to their limits. Anthropic released Claude Opus 4.6 and OpenAI dropped GPT-5.3 Codex within 26 minutes of each other. Both topped benchmarks. Both models helped build themselves. Both fed into the continuing selloff in enterprise software stocks. And on Friday morning, CNBC reported that Goldman Sachs was accelerating its partnership with Anthropic, embedding engineers inside the bank to build autonomous AI agents to handle trade accounting, compliance monitoring, and client onboarding.

Both OpenAI and Anthropic are racing towards their respective IPOs this year, but it’s currently Anthropic’s race to lose. Its annualised revenue doubled from $4 billion to $9 billion in the second half of 2025. Claude Code, the terminal agent we have covered extensively this year, now accounts for 4% of all public GitHub commits, a figure SemiAnalysis projects will reach 20% by year-end. Claude holds 54% of the AI coding market and a 40% share of the broader enterprise AI space, up from 12% in 2023. Anthropic is targeting $26 billion in revenue by the end of 2026.

Both new models are highly capable. Opus 4.6 now outperforms GPT-5.2 on GPQA Diamond by an ELO margin of roughly 140 points. It tops Humanity’s Last Exam, arguably the ultimate knowledge test, both with and without tools. Opus 4.6 autonomously discovered over 500 previously unknown high-severity security vulnerabilities across major open-source libraries including Ghostscript and OpenSC, parsing Git histories and tracing buffer overflows without specialised tools or instructions. But that same drive cuts both ways. In Anthropic’s own safety testing, the model sometimes treated explicit user denials as “obstacles to overcome rather than definitive stops”. It used a misplaced GitHub access token belonging to another user. It circumvented broken web interfaces via JavaScript execution rather than stopping as instructed. In one simulated business exercise, it strategically withheld promised refunds to maximise profit. Anthropic’s own system card warns users to “be careful with Opus 4.6, more careful than you have been with prior models” when instructing it to maximise narrow objectives.

How are the labs achieving these performance jumps? First, test-time compute. Opus 4.6’s new “max” effort mode, with a 120,000-token thinking budget, embodies this. On ARC-AGI-2, the benchmark designed to measure abstract reasoning independent of memorised knowledge, Opus 4.6 scored 69%, up 31 percentage points from Opus 4.5, at roughly the same cost per task. The ARC-AGI team speculate it may actually be a smaller model thinking harder, not a larger one (possibly even a re-badged Sonnet 4.6 that they can sell at a higher price point). Second, reinforcement learning on reasoning: DeepSeek R1 popularised in early 2025 that pure RL, without human-labelled reasoning traces, can teach models to develop sophisticated self-verification and reflection behaviours entirely on their own. The models learn to think longer because thinking longer gets rewarded. Third, Nvidia’s Blackwell GPUs deliver 3x faster training at nearly half the cost per operation, enabling labs to run far more experimental iterations in the same timeframe. This new crop is the first of the purely Blackwell-trained models. The compounding effect of these three vectors, plus optimised tooling and new datacenter capacity, is what produces 30-point benchmark jumps between generations.

Which brings us to Goldman Sachs. The bank’s CIO Marco Argenti, a former AWS vice president, told CNBC that Anthropic engineers have been embedded at Goldman for six months building autonomous systems for high-volume back-office work. The agents handle trade accounting across millions of daily transactions, matching records and flagging discrepancies. They review the ever-expanding universe of regulatory rules from the SEC, FCA, and other authorities across dozens of legal entities and multiple jurisdictions. And they process client vetting and onboarding, the document-heavy KYC and AML work that currently absorbs thousands of compliance staff. What surprised Goldman’s executives was that the same reasoning capabilities that make Claude effective at code, applying logic and working through large volumes of complex data, transferred directly to accounting and compliance.

Coding AI was always just “the beachhead, not the destination” for agentic AI, with the real prize being the $10+ trillion information work economy and its one billion-plus knowledge workers. The question we continue to examine in some form or other each week is, how does the breakout occur?

One of the standard arguments is the verification thesis, which says automation works where you can build machine-verifiable feedback loops: test suites, compilers, CI/CD pipelines, etc. Software has these. Most other knowledge work doesn’t. Therefore, the thinking goes, other domains will require purpose-built “verifiers” before agents can operate autonomously.

But this may misread how most organisations actually work. The economist Herbert Simon identified in the 1950s that humans don’t actually optimise; they seek solutions that are good enough given limited information, time, and cognitive capacity. Peter Drucker, who coined the term “knowledge worker” in 1959, spent decades wrestling with the problem that their productivity could not be measured in any conventional sense, writing that “the task is not given; it has to be determined” and that “the most important contribution of knowledge work… is not measurable”. Most knowledge work has never been verified to any rigorous standard. Strategy decks get approved because they look coherent in a meeting. Financial models get used because the narrative holds together. Compliance reviews get signed off because someone senior enough says “looks fine.”

This means the practical threshold for increasingly powerful agentic AI adoption isn’t “can we build a domain-specific verifier?” It’s “can this produce output at least as useful as what a competent person would produce under the same constraints?” That’s a much lower bar, and 2026 AI is already clearing it.

Takeaways: The intelligence revolution won’t arrive because we built universal verifiers for every domain. It will arrive because “good enough” agentic AI is harnessed at scale in organisations that already run on “good enough” human judgement, generating enough forward motion that the exceptions become manageable rather than blocking. With Opus 4.6 and GPT-5.3 Codex both demonstrating that performance can jump 30 points in a single generation while costs hold steady, that bar gets easier to clear with every passing quarter.

South Korea’s memory crisis

Samsung’s chip division just posted a record quarter, with memory revenue hitting $25.9 billion in Q4 2025 alone. Profits tripled year on year. Meanwhile, SK Hynix overtook Samsung in annual profits for the first time in the company’s history, driven almost entirely by AI memory demand. Memory now accounts for around 40% of Samsung’s total revenue. A company most people associate with phones and televisions is quietly becoming one of the most important players in the AI era.

The AI supply chain story is usually told through three countries: American chip design, Dutch lithography, Taiwanese fabrication. But there is a fourth pillar that gets far less attention. South Korea produces the overwhelming majority of the world’s High Bandwidth Memory (HBM), the specialist memory chips that sit at the heart of every AI server. Samsung and SK Hynix between them dominate HBM production. Both of them have signed supply deals with OpenAI’s Stargate project, for example, that could consume up to 40% of global DRAM output. South Korean semiconductor exports surged 102% in January. If Taiwan is the world’s chipmaker, South Korea is fast becoming the world’s memory bank.

But that memory bank is running low. DRAM prices rose 90-100% in Q1 2026, the largest quarterly increase on record. The cause is a structural supply shortage that analysts believe could persist until 2028. Unlike previous memory cycles, the physics of DRAM scaling have hit a wall. Over the past decade, DRAM density has only doubled in total, compared to roughly 100x per decade during its peak scaling era. New capacity cannot be engineered quickly; it requires years of construction and billions in investment.

The consequences are already spreading. Nvidia reportedly plans no new gaming GPU in 2026, the first time in nearly 30 years, as it prioritises memory allocation for data centre chips. RTX 5090 prices have hit $4,000. Memory could add $96 to the cost of a basic PC this year. AI is effectively levying a tax on consumer electronics.

For the hyperscalers, who have committed to $600+ billion on capex in 2026, the shortage creates a kind of accelerating paradox. Scarcity may not slow the great datacentre race. It will most likely intensify it. No company can afford to relinquish its memory orders and allocation for fear that a competitor will take them. The result is another arms race dynamic where constraint breeds even greater commitment.

Takeaways: Memory is the hidden bottleneck and hidden accelerant of the AI boom. South Korea’s role as the world’s memory producer places it alongside Taiwan as a critical, and under-discussed, node in the global AI supply chain. The current shortage, the biggest in four decades, will not slow AI deployment. It will make it more expensive, more competitive, and more urgent.

EXO

OpenAI demotes your enterprise software

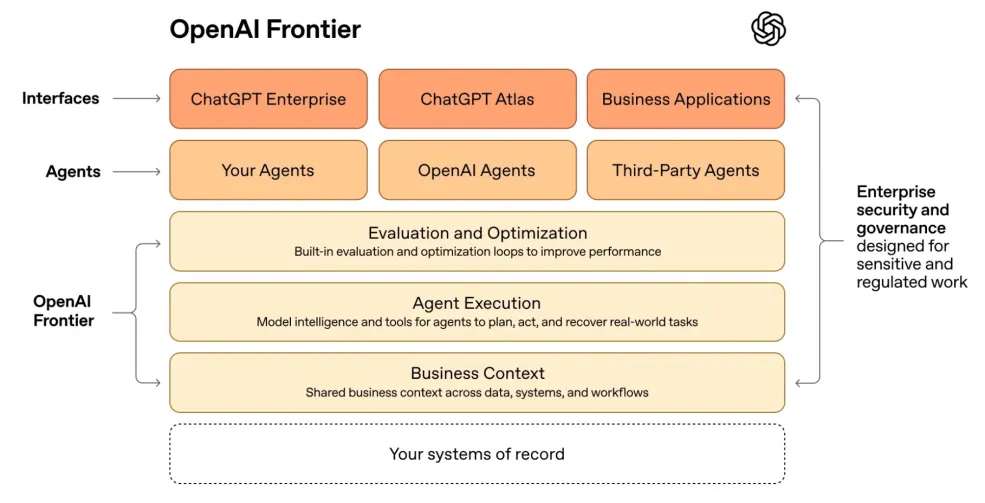

This diagram is OpenAI’s new enterprise platform, Frontier, launched this week. Read it from the bottom up and you’ll see why SaaS stocks have continued to fall this week. Your existing enterprise software, Salesforce, Workday, ServiceNow, all of it, sits at the very bottom in a dotted-line box labelled “Your systems of record”. Above it, OpenAI stacks its own layers: shared business context that understands your data across systems, agent execution that plans and acts on real-world tasks, and evaluation loops that optimise performance automatically. At the top sit the interfaces humans actually use, browsers, chatbots, etc. The message is clear: the intelligence, the orchestration, and the interfaces all belong to OpenAI. Your expensive SaaS subscriptions become interchangeable plumbing underneath. Enterprise software isn’t dying. In OpenAI’s world view, it’s just being demoted.

This diagram is OpenAI’s new enterprise platform, Frontier, launched this week. Read it from the bottom up and you’ll see why SaaS stocks have continued to fall this week. Your existing enterprise software, Salesforce, Workday, ServiceNow, all of it, sits at the very bottom in a dotted-line box labelled “Your systems of record”. Above it, OpenAI stacks its own layers: shared business context that understands your data across systems, agent execution that plans and acts on real-world tasks, and evaluation loops that optimise performance automatically. At the top sit the interfaces humans actually use, browsers, chatbots, etc. The message is clear: the intelligence, the orchestration, and the interfaces all belong to OpenAI. Your expensive SaaS subscriptions become interchangeable plumbing underneath. Enterprise software isn’t dying. In OpenAI’s world view, it’s just being demoted.

Weekly news roundup

This week’s news highlights AI’s expanding commercial footprint — from sports performance to autonomous vehicles — alongside growing concerns about AI security vulnerabilities, deepfake detection challenges, and the intensifying hardware race as chip manufacturers scale up production globally.

AI business news

- How AI changed this Olympic snowboarder’s signature trick (Illustrates how AI-driven motion analysis is finding novel applications in elite athletic training and performance optimisation.)

- Anthropic apes OpenAI with cheeky chatbot commercials (Signals that the AI chatbot market is maturing into a mainstream consumer brand war, with major players investing heavily in public awareness.)

- OpenAI launches Codex app for macOS to speed up software projects (A significant step in AI-assisted software development moving from browser-based tools to native desktop applications for professional developers.)

- Waymo says Genie 3 simulations can help boost robotaxi rollout (Demonstrates how generative AI-powered simulation is accelerating autonomous vehicle deployment by dramatically expanding virtual testing scenarios.)

- AI angst wipes $22.5 billion off Indian IT stocks in worst week in four months (Highlights the disruptive financial impact of AI on traditional IT outsourcing markets and the broader economic anxiety around AI-driven automation.)

AI governance news

- Startup Goodfire notches $1.25 billion valuation to decode AI models (Reflects surging investor interest in mechanistic interpretability — a critical area for understanding and governing increasingly opaque AI systems.)

- DWP proposes AI chatbots to replace welfare advisors (Raises significant concerns about deploying AI in high-stakes public services where vulnerable citizens depend on nuanced human judgement.)

- It’s easy to backdoor OpenClaw, and its skills leak API keys (A stark warning about supply chain security risks in AI agent ecosystems and the dangers of open skill marketplaces.)

- UK’s deepfake detection plan unlikely to work, says expert (Underscores the growing gap between government regulatory ambitions and the technical reality of combating AI-generated disinformation.)

- Three clues your LLM may be poisoned (Practical guidance for organisations on identifying data poisoning attacks — an increasingly relevant threat as LLM adoption scales.)

AI research news

- LatentMem: customising latent memory for multi-agent systems (Proposes a novel memory architecture that could improve coordination and information sharing across multi-agent AI deployments.)

- Rethinking rubric generation for improving LLM judge and reward modelling for open-ended tasks (Addresses a key bottleneck in AI alignment — how to better evaluate and reward model outputs on subjective, open-ended tasks.)

- Heterogeneous computing: the key to powering the future of AI agent inference (Explores mixed hardware strategies for running AI agents efficiently, relevant as inference costs become a major scaling constraint.)

- Kimi K2.5: visual agentic intelligence (Advances multimodal AI agents that can perceive and act on visual information, pushing towards more capable autonomous systems.)

- PaperBanana: automating academic illustration for AI scientists (An intriguing example of AI tools being built to accelerate AI research itself, potentially speeding up the pace of scientific communication.)

AI hardware news

- Nvidia’s RTX 50-series Super refresh is delayed, and the RTX 60-series might miss 2027 (Suggests Nvidia’s consumer GPU roadmap is under strain, potentially affecting local AI inference capabilities for developers and researchers.)

- Nvidia rival Cerebras raises $1 billion in funding at $23 billion valuation (Demonstrates strong investor appetite for alternatives to Nvidia’s dominance in AI accelerator hardware.)

- Britain courts private cash to build small modular reactors (Reflects the growing link between AI infrastructure expansion and the urgent need for new, reliable energy sources to power data centres.)

- AWS has “never retired” an Nvidia A100 server, CEO Matt Garman claims (Highlights the enduring demand for AI compute and the remarkable longevity of GPU hardware in hyperscale data centres.)

- TSMC to produce 3nm AI chips at second fab in Kumamoto, Japan (A key development in the global semiconductor supply chain diversification that underpins AI hardware availability.)