Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

JOEL

This week we look at:

- Cowork, Cursor’s agent experiments, and the battle for agentic orchestration

- AI solving Erdős problems and Terence Tao’s vision for large-scale mathematics

- Which frontier models make the best traitors and faithfuls

The OS for Intelligence

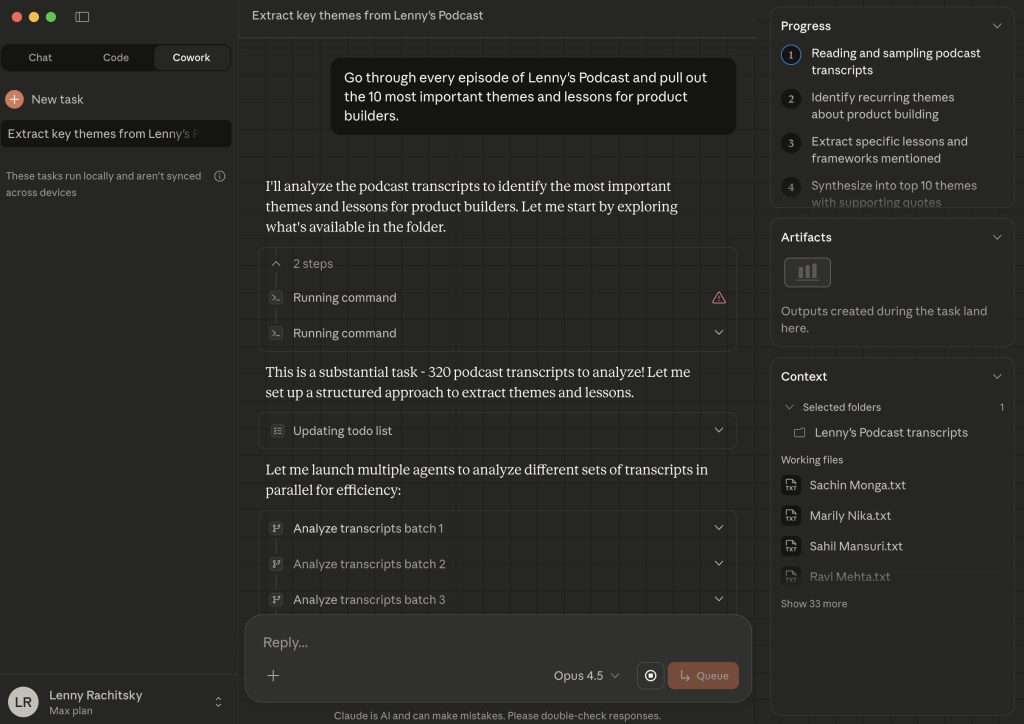

For years, we’ve organised digital work around applications. Open this app for documents, that one for spreadsheets, another for email. But as the Claude Code breakout continues, we’re seeing an inversion of this old model, where you describe outcomes and AI constructs its own solutions. Earlier in the week, Anthropic released Cowork, effectively “Claude Code without the code”. It allows users to give the model access to a specific folder, where it can organize files, create reports, edit documents, and handle multi-step workflows with minimal supervision. Anthropic frames it as moving from “chatty back-and-forth to agentic execution that feels more like a helpful colleague working in the background”. The folder becomes context. Claude becomes the brains of the operating system.

Incredibly, Boris Cherny, head of Claude Code at Anthropic, confirmed that Cowork was built in roughly ten days, and all of its code was written by Claude Code itself. An AI coding tool built an AI productivity tool in less than two weeks. This is the recursive improvement loop, often discussed in abstract terms, actually happening and landing on your desktop (if you have a Mac and a $200 Max subscription that is.)

Cursor, meanwhile, has been running experiments that push the boundaries of extended autonomy. They pointed GPT-5.2 at an ambitious goal: building a web browser from scratch. The agents ran for close to a week, writing over a million lines of code across 1,000 files. Other experiments included a Windows 7 emulator (1.2 million lines), an Excel clone (1.6 million lines), and a Java language server (550,000 lines, 7,400 commits). Coordination, not raw intelligence, proved to be the bottleneck. Cursor’s initial approach gave agents equal status and let them self-coordinate through a shared file. This failed. Agents became risk-averse, avoiding difficult tasks and making small, safe changes. “No agent took responsibility for hard problems or end-to-end implementation.” The solution was hierarchy: planners that explore the codebase and create tasks, workers that grind on assigned tasks until done, and a judge that decides whether to continue or start fresh. The prompts, Cursor found, mattered more than the harness or even the model.

This points to where the new moat lies. Models are increasingly commoditised: GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro are roughly comparable for most tasks. Harnesses add little differentiation, skills are formed and improved on-demand. What can’t be downloaded is your accumulated domain knowledge: the specifications, conventions, and institutional context that tell agents what to build and how. These spec files become the new source code. They inform each fresh loop, ensuring agents start with the right constraints rather than drifting into generic solutions.

This is where patterns like Geoff Huntley’s “Ralph Wiggum Loop”, which we covered last week, and his new Loom concept, become foundational. The key insight is combating “context rot”: as agents work longer, they accumulate stale context and start drifting. Starting fresh each cycle, with deterministic context allocation, avoids compaction and keeps agents productive by externalising context. Steve Yegge’s Gas Town orchestrator builds on this foundation, managing 20-30 parallel AI coding agents through what he calls “Molecular Expression of Work” (MEOW), defining tasks in such granular steps that they can be picked up, executed, and handed off by ephemeral workers.

But tokens still aren’t free. Heavy Claude Code users can easily consume $1,000+ worth of API tokens in a month. The $200/month subscription enables this through what is effectively a subsidy. Third-party tools like OpenCode exploited this gap, reverse-engineering Anthropic’s OAuth endpoints to run overnight agent loops at consumer prices rather than enterprise rates. Anthropic shut it down overnight. Tools like OpenCode stopped working with no warning and no migration path. The backlash was fierce. DHH called it “very customer hostile”. Users who’d invested in OpenCode workflows found themselves locked out mid-project.

What we’re witnessing is the early territorial battle over who controls the agentic orchestration layer. Anthropic is building an exoskeleton: Claude Code, Cowork. Their approach is vertical integration. The open-source ecosystem, with tools like OpenCode, Gas Town, and the Ralph Loop pattern, represents an alternative philosophy: interoperable shells you can assemble yourself, with any model.

Takeaways: 2026 LLMs plus well organised files and folders are turning out to be a powerful combination. Agents don’t need apps; they need a flexible substrate they can navigate with fluidity. Models are commoditised; harnesses add little. The moat is your accumulated domain knowledge in structured specification files that drive each fresh loop, and the learning you do along the way captured in diverse skills. Coordination, not raw intelligence, is the bottleneck, and hierarchy beats self-organisation. Fresh context beats long context. There’s a glimmer here of something extraordinarily powerful: when this scales, it will replicate most of what we call knowledge work.

The age of large-scale mathematics

As we cover above, software development and personal productivity are being transformed by agentic AI tools that can run for hours. But the same pattern is now arriving in a field that underpins all of science: mathematics.

In the space of days, AI systems have solved multiple Erdős problems (long-standing puzzles posed by the legendary mathematician Paul Erdős), proved a new theorem in algebraic geometry alongside Stanford and DeepMind researchers, and OpenAI’s GPT-5.2 set a new record on Moser’s worm problem, a geometry optimisation challenge from 1966. Fifteen Erdős problems have moved from “open” to “solved” since Christmas, with eleven crediting AI involvement.

The president of the American Mathematical Society, Professor Ravi Vakil, described one AI contribution as “the kind of insight I would have been proud to produce myself.” AI models can now work with formal verification systems like Lean, which check proofs for correctness. This means even if we don’t fully understand how the AI reached an answer, we can confirm the answer is right. The trust problem that plagued earlier attempts has a workaround.

Kevin Buzzard at Imperial College London notes that most problems solved so far are either relatively straightforward or had received little attention. “That is progress, but mathematicians aren’t going to be looking over their shoulders just yet,” says Buzzard. “It’s green shoots.”

But even if the models’ capability stays static, the implications could be profound. Thomas Bloom points out that mathematicians are typically limited to tools from their own discipline because learning adjacent fields takes months. AI changes that equation. “The fact that you can just get an answer instantly, without having to bother another human, without having to waste months learning potentially useless knowledge, opens up so many connections,” says Bloom. “That’s going to be a huge change: increasing the breadth of research that’s done.”

Terence Tao, who has helped validate some of the AI-assisted Erdős solutions, sees an even bigger possibility: a new way of doing mathematics entirely. Mathematicians typically focus on a small number of difficult problems because expert attention is scarce. Less difficult but still important problems go unstudied. If AI tools can be applied to hundreds of problems at once, it could lead to a more empirical, scientific approach. “We don’t do things like survey hundreds of problems, trying to find one or two really interesting ones, or do statistical studies like, we have two different methods, which one is better?” says Tao. “This is a type of mathematics that just isn’t done. We don’t do large-scale mathematics because we don’t have the intellectual resources, but AI is showing that you can.”

Takeaways: Mathematics is seeing meaningful progress, but not yet a revolution. Leading mathematicians are starting to appreciate frontier AI systems. The problems solved are real but modest. What’s hard to predict is what happens next. AI as a bridge between disciplines, enabling mathematicians to draw on tools they never had time to learn, or further out, Tao’s vision of “large-scale mathematics”, surveying problem spaces empirically rather than picking limited targets based on intuition and prestige. If that arrives, the implications ripple outward into every field that depends on mathematical foundations, which is to say, essentially all of science.

EXO

LLM traitor or faithful?

Series 4 of BBC’s The Traitors is gripping the nation, with millions watching humans lie, scheme and betray at Claudia Winkleman’s Scottish castle. The format is based on Mafia, the classic party game where hidden “killers” must deceive the group while innocents try to identify them. It turns out AI researchers have been using the same game to test how models handle deception, trust and social reasoning.

A recent paper ran hundreds of AI-vs-AI Mafia games under controlled conditions. GPT-4o survived as a traitor 93% of the time, yet when playing as a faithful, it correctly identified traitors only 10% of the time. DeepSeek-V3 showed the opposite pattern: better at detecting traitors (56% accuracy) but far worse at being one (33% survival). This suggests that LLM deception skills may scale faster than detection abilities. We’re building systems that are increasingly persuasive but not correspondingly sceptical.

This YouTube stream, depicted in the screenshot, brings this experiment to life. One human plays Mafia against ten frontier models. In the first round, the human expresses sadness that Claude Sonnet “was my best friend” after it dies. The AIs immediately attack him. “The best friend bit reads like they want actual info,” says GPT-5.2. He is voted out for having emotions. The pattern repeats throughout: models explicitly follow consensus rather than reason independently. “I believe sticking with the consensus helps clarify things for the town,” says one. Once a narrative forms, the AIs reinforce it rather than probe it. They coordinate voting blocs instantly but lack the individual scepticism to question whether their coalitions are built on solid ground.

The Traitors format tests some of the capabilities that matter for agentic AI: reading social signals, maintaining consistency under pressure, coordinating with allies, and detecting manipulation. Perhaps AI evaluation should take more cues from popular TV.

Weekly news roundup

This week’s news highlights major partnerships reshaping the AI landscape, regulatory pressures mounting globally,

and significant infrastructure investments as companies race to secure computing resources.

AI business news

- Google’s Gemini to power Apple’s AI features like Siri (A landmark partnership that could reshape the consumer AI landscape and signals Apple’s strategic approach to competing in the AI race.)

- OpenAI to test ads in ChatGPT in bid to boost revenue (Marks a significant shift in OpenAI’s business model with implications for how AI assistants may be monetised across the industry.)

- Investors sue over Oracle’s borrowing spree (Highlights the financial risks companies face when aggressively pursuing AI infrastructure investments.)

- Two Thinking Machines Lab cofounders are leaving to rejoin OpenAI (Illustrates the ongoing talent wars in AI and the gravitational pull of well-resourced labs.)

- 2026 may be the year of the mega I.P.O. (Signals potential market maturation for leading AI companies and opportunities for broader investment access.)

AI governance news

- California AG sends letter demanding xAI stop producing deepfake content (Demonstrates increasing regulatory enforcement around AI-generated synthetic media.)

- Sadiq Khan says AI could destroy London jobs if not controlled (Reflects growing political concern about AI’s workforce impact and the push for proactive governance.)

- Song banned from Swedish charts for being AI creation (Sets precedent for how creative industries may distinguish between human and AI-generated content.)

- Elon Musk’s fraud claims against OpenAI set to go to trial (Could have significant implications for AI company governance structures and non-profit to for-profit transitions.)

- Anthropic funds Python Foundation to help improve security (Shows AI labs investing in foundational infrastructure security that underpins much of the AI ecosystem.)

AI research news

- Scaling laws for economic productivity: experimental evidence in LLM-assisted consulting, data analyst, and management tasks (Provides empirical data on how AI capabilities translate to real-world productivity gains across knowledge work.)

- DroPE: extending the context of pretrained LLMs by dropping their positional embedding (Offers a practical technique for extending context windows without retraining, relevant for long-document applications.)

- What work is AI actually doing? Uncovering the drivers of generative AI adoption (Helps organisations understand where AI delivers genuine value versus hype in workplace settings.)

- When single-agent with skills replace multi-agent systems and when they fail (Provides guidance for architects choosing between single and multi-agent approaches for AI systems.)

- Agentic memory: learning unified long-term and short-term memory management for large language model agents (Advances AI agent capabilities with improved memory systems crucial for complex, ongoing tasks.)

AI hardware news

- TSMC sees no signs of the AI boom slowing (Indicates sustained demand for AI chips and validates continued infrastructure investment strategies.)

- From tokens to burgers – a water footprint face-off (Provides important context on AI’s environmental impact for sustainability-conscious organisations.)

- Chinese AI developers say they can’t beat America without better chips (Highlights how chip restrictions are shaping the global AI competitive landscape.)

- Trump to direct key US grid operator to hold emergency auction (Signals government recognition that power infrastructure is becoming a critical bottleneck for AI growth.)

- OpenAI signs $10 billion computing deal with Nvidia challenger Cerebras (Suggests diversification away from Nvidia dominance and validates alternative AI chip architectures.)