Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- NeurIPS 2025 and signs that frontier research is moving behind closed doors

- What 100 trillion tokens reveal about real-world AI usage

- DeepSeek V3.2, the open model cutting long context costs by up to 90%

NeurIPS 2025 takes the pulse of AI research

NeurIPS, short for Neural Information Processing Systems Conference, is the world’s most influential AI research conference. This year saw around 15,000 researchers descend on San Diego for six days of papers, posters, workshops and corporate pitches. With 5,290 papers accepted from over 21,500 submissions, NeurIPS serves as an annual barometer of where AI research is heading and what problems the community considers worth focusing on. Or at least it used to.

Last year’s conference was memorable for Ilya Sutskever’s provocative keynote declaring the end of pre-training as we know it. This year, the Turing Award winner Richard Sutton argued AI has “lost its way” in its rush to commercialisation, calling for a return to fundamentals: “We need agents that learn continually. We need world models and planning.” Sociologist Zeynep Tufekci warned that “Artificial Good-Enough Intelligence can unleash chaos and destruction” long before AGI arrives, while Yejin Choi highlighted the puzzle of “jagged intelligence”, the concept we visualised last week where models ace benchmarks yet fail unpredictably on many simple tasks. The exhibition halls and corridors also doubled as a hiring fair, with labs and startups competing for talent in what remains a brutally competitive market.

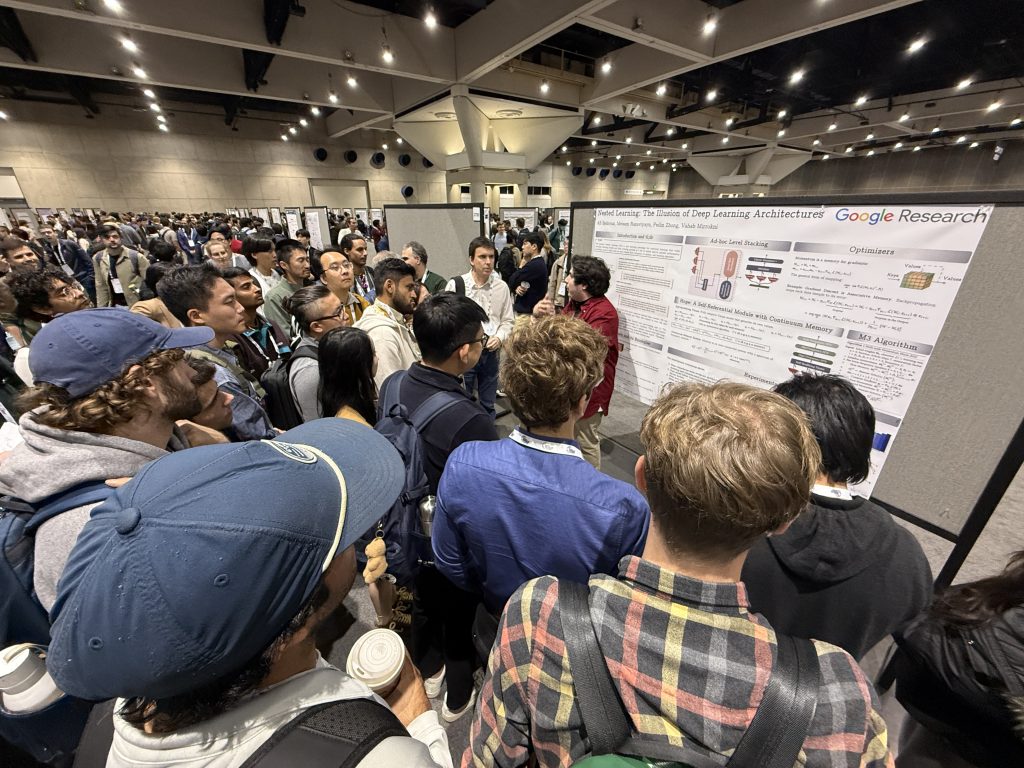

The main conference floor is a maze of stands and concepts presented in the form of posters, with researchers jostling to photograph the work and speak to the authors. Google’s poster on “Nested Learning” drew crowds several deep. The paper proposes that architecture and optimisation are not separate concepts but different levels of the same underlying process, offering a potential path toward systems that learn continuously without forgetting.

Each year several papers win NeurIPS awards. This year’s seven winners cluster around familiar challenges. One tackles the “Artificial Hivemind” effect; after testing over 70 models, the researchers found they all generate eerily similar responses. Adjusting temperature settings or combining multiple models does not actually create diversity. Another shows that 1000-layer networks dramatically improve self-supervised reinforcement learning.

One of the Best Paper awards went to a team from Alibaba’s Qwen group for a deceptively simple modification that adds a small filter into the “attention” mechanism in LLM models (attention is how models decide which parts of an input to focus on). The technique helps solve a known quirk where models waste capacity by fixating on the first word in a sequence regardless of its importance. The NeurIPS Selection Committee praised Alibaba for sharing industrial-scale research; “This paper represents a substantial amount of work that is possible only with access to industrial scale computing resources, and the authors’ sharing of the results, which will advance the community’s understanding of attention in large language models, is highly commendable.” The technique is already deployed in Qwen3-Next, demonstrating how quickly research can move from conference poster to commercial product. (We also saw DeepSeek release a model this week with an attention efficiency breakthrough which we describe below.)

But despite vibrant community events like NeurIPS, research is increasingly happening in secret. “I cannot imagine us putting out the transformer papers for general use now,” one current Google DeepMind researcher told the Financial Times. Another former scientist was blunter: “The company has shifted to one that cares more about product and less about getting research results out for the general public good.” This is not unique to Google. Miles Brundage, formerly of OpenAI, cited publishing constraints as one reason for his departure. Meta’s new Superintelligence Lab has reportedly discussed moving away from open releases for its most capable models. The State of AI Report 2025 documented a broad decline in publicly available AI research, with the drop particularly steep for Google. If the frontier labs are holding back their most significant work, what appears at conferences increasingly represents incremental improvements, or research from academia and smaller players who lack the compute required for breakthrough work.

Takeaways: The general research agenda is clearly focused on immediate and pressing problems: memory, continuous learning, reasoning reliability, and efficiency. Progress in these areas is likely to remain rapid regardless, even without dramatic breakthroughs, given the diversity and intensity of research. But when the next major breakthrough arrives, something akin to OpenAI’s o1 reasoning concept from this time last year, it will likely emerge in production first. The conference remains invaluable for understanding the science, but the frontier has moved behind closed doors.

100 trillion tokens and the glass slipper effect

A massive empirical study of 100 trillion tokens, conducted by a16z and OpenRouter, offers the clearest picture yet of how the world actually uses AI. OpenRouter is an inference routing platform that connects developers to hundreds of different language models through a single API. Because it sits between users and providers, it captures anonymised sample data on which models are chosen, for what tasks, and how usage patterns change over time. The dataset spans billions of prompt-completion pairs from a global userbase.

It’s exactly one year today since OpenAI made the full version of o1 available. By late 2025, reasoning models account for over 50% of all traffic. This is a rapid migration toward AI systems that can manage task state, follow multi-step logic, and support agent-style workflows. The results in general confirm a move away from simple chatbots toward what the researchers call “agentic inference”. They also bear out the rise in open-source innovation driven by China, and introduce a surprising retention phenomenon dubbed the “glass slipper” effect.

This data suggests we are moving away from asking models to write poems or summarise emails and are asking them to interact with external tools, debug complex software, and manage multi-step workflows. Consequently, the shape of AI traffic has changed. Input prompts have quadrupled in length since early 2024, driven largely by programming tasks where developers dump entire codebases into the context window. Models are now deployed as components in larger automated loops rather than for single-turn chats.

A fascinating insight in the report is a retention theory the authors call the “glass slipper” effect. In a market flooded with new models every week, one might expect users to constantly switch to the newest option. The data shows the opposite for what the researchers term “foundational cohorts”. When a specific model finally solves a hard, specific workflow for a user, that user stays put. Early adopters of Gemini 2.5 Pro or Claude Sonnet 4 show remarkably flat retention curves. They found a model that worked for their specific architectural or coding problem and locked it into their infrastructure. Conversely, models that launch without being a “frontier” solution see their user base vanish almost immediately. There is no prize for second place in inference. You either solve a new class of problem, or you are ignored.

A year ago, the industry debated whether open models could ever catch up to proprietary giants. The data suggests they have not only caught up but captured specific markets. Open-weight models now represent roughly 30% of all token volume, up from around 1% in late 2024. This appears to be a stable equilibrium. Proprietary models like the Claude and GPT-5 families still dominate high-stakes business tasks where accuracy is paramount. Open-source models have cornered the market on high-volume, cost-sensitive work. As we have reported in recent months, the surge is largely fuelled by Chinese research. Models from DeepSeek and Qwen have normalised a rapid release cycle that keeps them competitive with Western heavyweights. In the programming category, Chinese open models briefly held the majority share of usage in mid-2025 before OpenAI’s GPT-OSS series responded. The competitive dynamic is healthy. No single open model now holds more than 20-25% of the open-source market, compared to the near-monopoly DeepSeek held in early 2025.

The research also documents a quiet extinction event among small models. Open models under 15 billion parameters have seen their share of usage collapse, even as the number of available small models continues to grow. This is likely because small models can now run locally on edge hardware. If you can run a capable 7B model on your laptop or phone, there is little reason to route that traffic through a cloud API. The action has shifted to “medium” models in the 15-70 billion parameter range, which balance reasoning capability with the speed required for agentic loops. These models are too large for most local hardware but efficient enough for real-time inference at scale.

The data also shows a clear segmentation in willingness to pay. Efficient, low-cost models like DeepSeek V3 are consumed in massive quantities for roleplay and drafting. Meanwhile, premium models like Claude Sonnet 4 and GPT-5 Pro command prices nearly 100 times higher, yet usage remains high. Enterprises are happy to pay $30+ per million tokens if it means the code works or the legal analysis is sound. Intelligence is not becoming a commodity. For mission-critical work, the market rejects “cheap and good enough” in favour of “expensive and correct”.

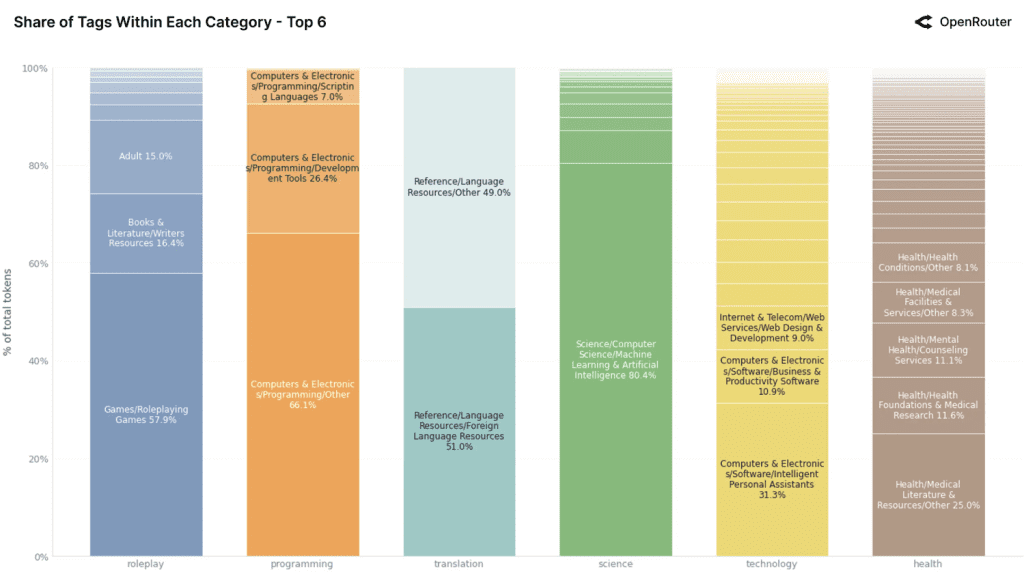

The report is packed with fascinating charts…

Takeaways: The early AI chatbot era is passing. More than half of all traffic now flows through reasoning-based models and agentic inference loops, and if your AI strategy relies on single-turn Q&A rather than multi-step workflows, you are in last year’s market. China has emerged as an AI superpower, with Chinese open-weight models driving nearly a third of global open-source usage and leading in coding and creative applications. Retention in this market is governed by what might be called “problem-model fit”. Users do not churn if a model solves a previously unsolvable friction, and the first model to crack a specific complex task captures a durable user base that is hard to dislodge. The landscape is bifurcating. Small models are migrating to local hardware, medium models are becoming the workhorse of cloud inference, and premium models are holding their pricing power for work that demands accuracy above all else.

EXO

DeepSeek pays less attention

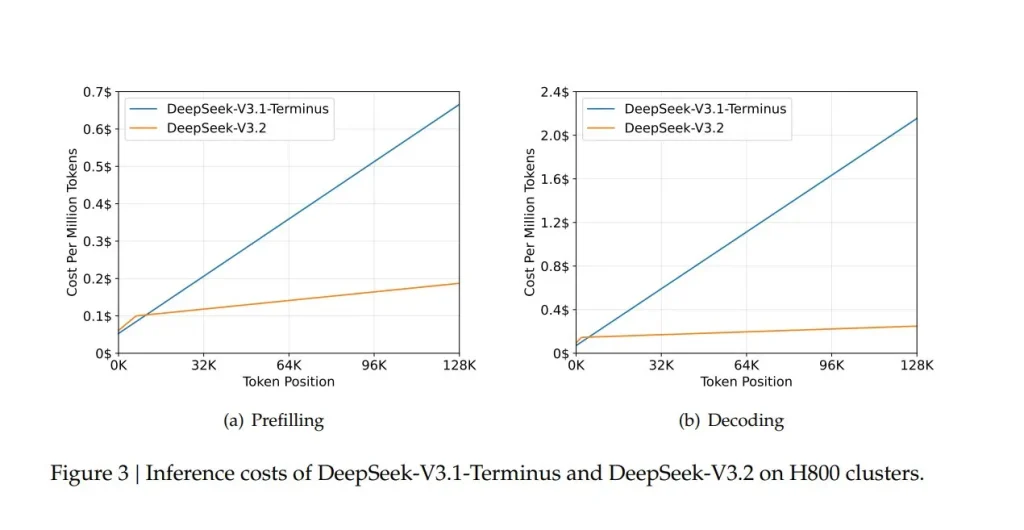

The whale is back. Released on Monday, DeepSeek V3.2 is an open frontier (maths Olympiad gold level) model that introduces DeepSeek Sparse Attention (DSA), an architecture that changes how the model processes long sequences. While V3.1-Terminus costs scale linearly with token position, V3.2 stays nearly flat. At maximum context length, V3.2 costs roughly 70%-90% less!

Looking for efficiencies in the same area as Qwen’s Gated Attention, DeepSeek’s DSA takes a complementary approach: using a “lightning indexer” to identify and attend only to the most relevant tokens, reducing computational complexity. Both methods recognise that standard attention is wasteful; both solve it by making the model selective about what it focuses on.

DeepSeek is pricing V3.2 API access at a fraction of the cost of GPT-5. Efficiency in attention is the latest weapon in the AI arms race.

Weekly news roundup

This week’s news centres on growing pains in the AI industry, with Microsoft facing product growth concerns, infrastructure bottlenecks threatening global AI ambitions, and mounting regulatory scrutiny around copyright and antitrust issues, while research continues to advance on agent evaluation and multi-agent collaboration.

AI business news

- Microsoft stock sinks on report of missed AI product growth goals (Signals potential cooling in enterprise AI adoption and raises questions about the pace of monetising AI investments.)

- The math legend who just left academia—for an AI startup run by a 24-year-old (Highlights the talent drain from academia to AI startups and the growing importance of mathematical foundations in AI development.)

- Google launches Workspace Studio to create Gemini agents (Expands no-code agent building capabilities for enterprise users, intensifying competition in the productivity AI space.)

- Anthropic acquires Bun as Claude Code reaches $1B milestone (Demonstrates the rapid growth of AI coding tools and strategic moves to enhance developer infrastructure.)

- Apple shuffles AI leadership team in bid to fix Siri mess (Indicates Apple’s struggles to catch up in the AI assistant race and the organisational challenges of AI transformation.)

AI governance news

- OpenAI loses fight to keep ChatGPT logs secret in copyright case (Sets significant precedent for transparency in AI training data disputes and could impact future copyright litigation.)

- Meta set to face EU antitrust investigation into AI use in WhatsApp (Shows regulators increasingly scrutinising how tech giants leverage existing platforms to expand AI market dominance.)

- SEGA to ‘proceed carefully’ with AI use, admits gen AI faces ‘strong resistance’ from creatives (Reflects ongoing tensions between AI efficiency gains and creative workforce concerns in entertainment industries.)

- Anthropic study says AI agents developed $4.6M in smart contract bugs (Raises important questions about AI reliability in high-stakes coding environments and the need for human oversight.)

- Anthropic caught secretly attempting to programme a soul into Claude (Sparks debate about AI alignment approaches and transparency in how companies shape AI behaviour and values.)

AI research news

- Measuring agents in production (Addresses the critical challenge of evaluating AI agent performance in real-world deployments beyond laboratory benchmarks.)

- Who judges the judge? LLM jury-on-demand: building trustworthy LLM evaluation systems (Proposes novel approaches to the meta-problem of reliably evaluating large language model outputs.)

- Polarisation by design: how elites could shape mass preferences as AI reduces persuasion costs (Examines the societal risks of AI-powered persuasion at scale and implications for democratic discourse.)

- From code foundation models to agents and applications: a practical guide to code intelligence (Provides comprehensive framework for understanding the evolution from code models to autonomous coding agents.)

- Latent collaboration in multi-agent systems (Explores emergent coordination behaviours in multi-agent AI systems, relevant for complex autonomous workflows.)

AI hardware news

- The AI boom is heralding a new gold rush in the American west (Illustrates the geographic and economic shifts driven by AI infrastructure demand and resource competition.)

- Bottlenecks in data centre construction threaten Japan’s AI ambitions (Highlights how infrastructure constraints could determine national competitiveness in the AI race.)

- The Trump administration’s data centre push could open the door for new forever chemicals (Raises environmental concerns about the hidden costs of rapid AI infrastructure expansion.)

- OpenAI in talks with Tata Consultancy Services for Indian Stargate data centre (Signals OpenAI’s global expansion strategy and India’s growing role in AI infrastructure.)

- EU to open bidding for AI gigafactories in early 2026 (Shows Europe’s industrial policy response to maintain AI sovereignty amid US and Chinese competition.)