Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- OpenAI’s multi-billion restructuring and $1.4 trillion compute plans

- Ultra-fast coding AI models racing beyond human supervision speeds

- Mid-sized firms outperforming enterprises on ROI

OpenAI’s trillion-dollar pivot

In a relatively quiet week for major launches, we learnt a good deal about OpenAI’s near future, and the ongoing expansion of AI compute. In a wide-ranging livestream OpenAI CEO Sam Altman addressed the new company restructuring. OpenAI’s for-profit arm, now called OpenAI Group PBC, will be controlled by the nonprofit OpenAI Foundation. The nonprofit receives a 26% stake worth $130 billion, potentially increasing through performance warrants. Microsoft retains 27% valued at $135 billion, nearly ten times its $13.8 billion investment. The arrangement removes prior fundraising constraints and positions OpenAI for a $1 trillion plus IPO.

This wasn’t how OpenAI was supposed to work. Founded in 2015 the organisation promised to develop AI openly and safely, free from profit motives. The nonprofit structure was meant to ensure that artificial general intelligence would benefit everyone, not just shareholders. By 2019, facing the astronomical costs of AI development, they created a capped-profit subsidiary. Now the pretence has been dropped, with Microsoft’s results quarterly filing this week exposing an OpenAI loss last quarter of some $11.5 billion they need more capital.

Critics see this as perhaps Silicon Valley’s biggest heist to date. A nonprofit funded by public goodwill and academic partnerships has been converted into a vehicle for private profit. Altman frames this as essential for OpenAI’s mission. During the livestream, he emphasised that building AGI requires infrastructure investments that only private capital can provide. The $1.4 trillion commitment to data centres and computing power he announced wouldn’t be possible under nonprofit constraints. The public benefit corporation structure, he argues, balances commercial success with societal interests better than pure nonprofit or for-profit models.

Much of the capital is earmarked for compute. Altman has set out plans to build-out 30GW of compute (six London’s worth) at an eventual cost of $1.4 trillion over 5 years. To understand the scale, consider that no country outside the US or China has more than a single GW in total today. Europe as a whole has perhaps 2GW, Asia pacific ex-China <1GW. France recently announced plans for a 1GW facility costing €30-50 billion. Saudi Arabia’s Neom Oxagon targets 1.5GW. South Korea’s Haenam cluster aims for 3GW by 2028. The UK’s Teesside AI Growth Zone hopes for 6GW by 2030. OpenAI wants to build 1GW every week and bring the cost down from $40 billion/GW to $20 billion. The growth becomes clearer when compared to the world’s most power-hungry industrial facilities. xAI’s Colossus in Memphis currently only consumes 0.35GW whilst OpenAI’s Abilene Stargate sits at around 0.24GW. At full capacity, these single data centres will match the 1.2GW Maaden aluminium smelter in Saudi Arabia or approach the 1.6GW Bahrain aluminium facility, traditionally the most energy-intensive operations on Earth. We’re now entering a phase where these planned “cathedrals of compute” are becoming fully operational following the surge in spending… Amazon this week booted up their 0.5GW Ranier facility less than one year after it was first announced (to power Claude with 500,000 custom Tranium chips). They plan to have 1,000,000 chips in place by year end.

And yet the power delivery to these single sites is still the biggest limiting factor, especially for >1GW facilities. The solution might be decentralisation: rather than single massive sites, companies could distribute training across dozens of smaller data centres near existing power plants with spare capacity, connected by high-speed fibre networks. Epoch AI calculates that a 10GW training run could be orchestrated across 23 sites spanning 4,800km, using spare capacity from gas plants between Illinois and Georgia. The network would cost $410 million, at $41 million per GW that seems extremely attractive.

Meanwhile CEO Jensen Huang kicked-off Nvidia’s October tech conference with another vision for compute expansion… reaching out what is termed the “edge”. A new partnership with Nokia for 6G networks will transform every mobile tower into a mini-data centre. The new Arc Aerial RAN Computer combines wireless communication with Grace CPUs and Blackwell GPUs, creating what Huang calls “AI on RAN,” where AI can run locally rather than phoning home to distant servers.

So, what does OpenAI envision all of this compute will power? Firstly, there was a clear indication from the livestream that despite a flurry of product releases in recent weeks such as the Atlas browser, OpenAI will focus more on building a platform for third party developers. Altman and Jakub Pachocki, OpenAI’s Chief Scientist also set out some pretty specific timelines: an AI research intern by September 2026, a legitimate autonomous AI researcher by March 2028, and systems capable of recursive self-improvement shortly after. These are their “planning assumptions”. OpenAI expects small scientific discoveries by 2026, medium to large discoveries by 2028. By 2030-2032, the implications become unpredictable. They’re not talking about better chatbots but about systems that accelerate human knowledge faster than humans can comprehend. Two hundred years of scientific progress in twenty years, or possibly two. None of the firms on the frontier of the AI race are indicating that they are about to slow down.

Takeaways: OpenAI’s transformation from nonprofit to trillion-dollar corporation represents a victory for capital requirements over founding ideals. The infrastructure battle has become existential, with nations and companies racing to control the means of intelligence production. Whether through centralised cathedrals, distributed edges, or mobile swarms, whoever controls the compute infrastructure of 2030 will largely determine humanity’s trajectory through the age of AGI.

Code speeds past human oversight

One field that isn’t slowing down is AI-powered software engineering. This week saw Cursor’s Composer, Cognition’s (Devin and Windsurf) SWE-1.5, and China’s MiniMax M2 all launching within days of each other. Each promises blazing speeds: Composer at 250 tokens per second, SWE-1.5 at 950 tokens per second via Cerebras, and MiniMax costing just 8% of Claude Sonnet’s price.

These mixture-of-experts architectures activate only a fraction of their parameters when running. MiniMax activates just 10 billion of its 200 billion parameters per request. Using RL (reinforcement learning) on the code AI-powered developers are generating, rather than historic datasets, these models achieve near-frontier intelligence at very high efficiency.

This mini-explosion of small, fast models from vendors outside the major labs could reshape the specialised model business. If companies can train competitive models for specific domains, the dominance of GPT-5, Claude and Gemini becomes less certain.

But speed creates new challenges. Testing Cursor’s Composer at ExoBrain we found the model works so quickly it’s hard to follow its reasoning. With Claude Opus or GPT-5 Codex High, developers can oversee the process step-by-step, spot issues, and intervene. Composer can restructure entire codebases in seconds, for better or worse, before you realise what’s happening.

As these models get faster, they move well beyond human supervision speed. The philosophical divide between Cursor’s and Windsurf’s hybrid approach and Devin’s async model becomes critical: do we want AI that augments our real-time engineering, or autonomous agents we dispatch and hope return with working solutions?

The new Cursor 2.0 tackles this with a redesign that abandons the traditional file-explorer-first layout for an agent-centric workspace, where developers manage parallel AI processes rather than individual files. You can now run up to eight agents simultaneously, each in isolated git “worktrees”, comparing different approaches to the same problem before selecting the best solution. Is this the future UI for the hybrid human-agent manager?

Takeaways: The simultaneous arrival of multiple fast coding models suggests we may be reaching a point where training competitive AI has become accessible to specialised vendors, not just tech giants. But as speed increases, so does the requirement for trust, and that trust must be earned through reliability that will take some time to demonstrate.

EXO

Mid-sized firms winning the ROI race

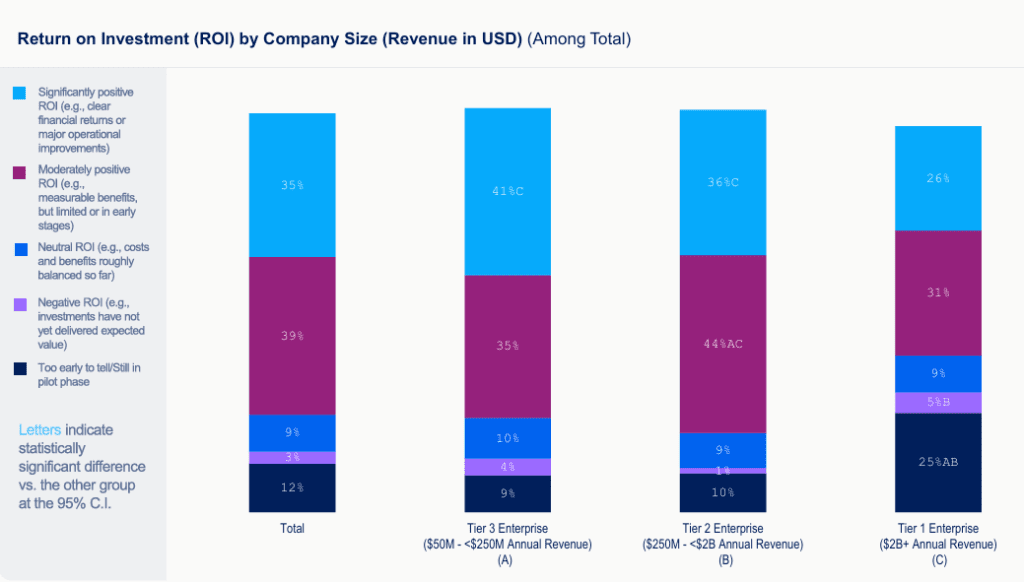

This is a chart from the latest 2025 Wharton-GBK AI Adoption Report with 74% of companies reporting positive AI returns. However, the largest enterprises with revenues exceeding $2 billion show the most caution, with 25% saying it’s “too early to tell”. This hesitancy amongst the biggest spenders contrasts sharply with smaller firms who report quicker wins, suggesting that scale creates complexity that delays value realisation. The data shows AI’s impact isn’t uniform across business size and nimbler organisations are proving that sometimes less infrastructure means faster returns.

This new report represents the third annual comprehensive study tracking enterprise AI adoption across US businesses. Conducted by Wharton’s Human-AI Research initiative and GBK Collective, this survey of 800 senior decision-makers from companies with 1,000+ employees and $50 million+ revenue.

Several fascinating findings emerge from the report beyond the ROI patterns. Most notably, data analytics has overtaken meeting summarisation as the top use case, with 73% of organisations now using AI for data analysis compared to 70% for document and meeting summarisation.

60% of enterprises now have Chief AI Officers, with executive ownership rising 16 percentage points year-on-year. However, over half of these CAIO roles are additions to existing responsibilities rather than dedicated positions. Companies are also investing heavily in internal R&D, allocating 30% of AI technology budgets to custom solutions, suggesting a shift from off-the-shelf tools to bespoke capabilities.

Weekly news roundup

This week’s developments showcase AI’s accelerating integration into everyday business tools, growing safety concerns prompting governance responses, and massive infrastructure investments signalling long-term industry commitment.

AI business news

- Canva launches its own design model, adds new AI features to the platform (Shows how creative tools are building proprietary AI models to differentiate and better serve specific user needs.)

- Octoverse: A new developer joins GitHub every second as AI leads TypeScript to #1 (Highlights the explosive growth in AI-driven development and changing programming language preferences.)

- Anthropic’s Claude is learning Excel so you don’t have to (Demonstrates how LLMs are tackling mundane but essential business tasks, potentially transforming workplace productivity.)

- Meet 1X Neo: Your AI humanoid robot housekeeper for modern homes (Signals the approaching reality of AI-powered robotics entering consumer markets and daily life.)

- Uber to deploy Lucid-powered robotaxi fleet in San Francisco (Marks a significant step toward autonomous transport becoming mainstream in major cities.)

AI governance news

- Emergent introspective awareness in large language models (Reveals concerning new capabilities where AI models can analyse their own internal processes, raising questions about consciousness and control.)

- Anthropic’s pilot sabotage risk report (Provides crucial insights into potential AI misuse and deception risks that organisations must consider.)

- The Gen Z job crisis is real: 1.2 million recent grads in the U.K. competed for just 17,000 open roles (Illustrates the urgent need to address AI’s impact on employment and workforce planning.)

- Character.ai to ban teens from talking to its AI chatbots (Reflects growing concerns about AI safety for vulnerable users and potential regulatory pressures.)

- Introducing ControlArena: A library for running AI control experiments (Offers practical tools for organisations to test AI safety and control mechanisms.)

AI research news

- ReCode: Unify plan and action for universal granularity control (Advances AI’s ability to seamlessly integrate planning and execution across different levels of detail.)

- DeepAgent: A general reasoning agent with scalable toolsets (Pushes toward more versatile AI agents that can adapt to diverse tasks and environments.)

- Video-Thinker: Sparking “thinking with videos” via reinforcement learning (Opens new possibilities for AI to understand and reason about visual information in dynamic contexts.)

- Deploying interpretability to production with Rakuten: SAE probes for PII detection (Demonstrates practical applications of AI interpretability for critical data privacy compliance.)

- In-the-flow agentic system optimisation for effective planning and tool use (Improves how AI agents dynamically adapt their strategies and tool usage in real-time.)

AI hardware news

- Nvidia is officially a $5 trillion company (Underscores the massive economic value being created by AI infrastructure and compute providers.)

- Trump says he and Xi discussed Nvidia, but not the Blackwell chips (Highlights the geopolitical tensions surrounding AI chip technology and international competition.)

- Intel in talks to acquire AI chip startup SambaNova (Shows traditional semiconductor giants racing to catch up in specialised AI hardware markets.)

- Extropic aims to disrupt the data centre bonanza (Introduces potentially game-changing approaches to AI computing infrastructure efficiency.)

- Amazon opens $11 billion AI data centre Project Rainier in Indiana (Demonstrates the massive infrastructure investments required to support growing AI compute demands.)