Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Sora 2, OpenAI’s video generator that doubles as a social app

- Microsoft’s unified Agent Framework and new Copilot capabilities for businesses

- CraftGPT, a working language model built from 439 million Minecraft blocks

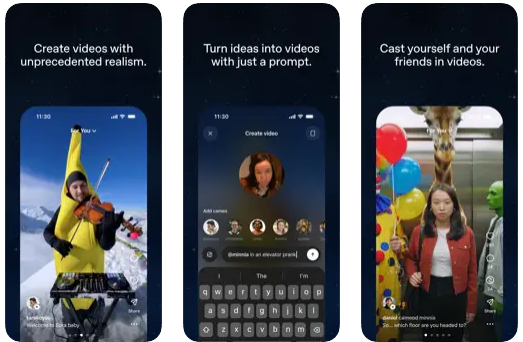

Infinite video generation meets social media

OpenAI released Sora 2 this week as both an upgraded video model and an iOS social app, complete with a TikTok-style feed. By today, despite being invite-only, the Sora app has hit number one on the US App Store. It ships with watermarks and C2PA metadata on every video, plus a Cameo feature to include personal likenesses, requiring users to record verification phrases before anyone can insert them into content. Early access is US and Canada only, with OpenAI stating they will have to reduce generation limits as adoption grows. There’s a higher-quality Sora 2 Pro coming for premium users, and most of the examples we’ve seen filling our feeds likely showcase Pro. The synthetic media landscape is starting to evolve quickly. Meta recently launched Vibes, a synthetic AI-only video feed inside its Meta AI app. Google’s Veo 3 now supports vertical formats at roughly $0.40 per second, down from $0.75 and xAI’s Grok Imagine offers a “spicy” mode for subscribers.

From a technical perspective Sora 2 uses a video diffusion transformer architecture. The model starts with random static, then gradually refines it into coherent video frames by predicting what should appear at each step. A transformer processes these frames as chunks of spacetime information, similar to how language models handle words, but operating across both space and time simultaneously. It generates clips at 1080p with synchronised audio, including dialogue and sound effects. The model can specify camera angles, shot types and maintains object permanence across frames. Claims about physics understanding appear overstated. Early tests show motion that’s “more video game than reality,” impressive but not physically accurate in the way OpenAI’s marketing suggests.

But what exactly is Sora 2 for? The answer depends on who you ask. OpenAI frames it as progress toward world models, general intelligence and even scientific simulation. Fans find it hugely entertaining. Sceptics see it as social media “slop” platform with more attention lock-in and the Cameo system causing people to disconnect from reality to an ever-greater extent. Studios view it as an automation tool in a year where many entertainment executives are cutting jobs. Copyright holders will be speaking to their lawyers. Will this new flood of synthetic content break social media? Probably not; social feeds already contain infinite scrollable material… you can’t make infinity more infinite. But we may see new dynamics in the creator economies, both from the use of this technology and the changing perception of consumers. AI-generated videos will excel in paid advertising where the goal is click-through rate, not sustained engagement. Marketers may find synthetic content generates effective hooks for Meta and TikTok ads whilst the same clips fail in organic feeds that reward watch time over immediate clicks. The economics favour agencies optimising for attention capture rather than retention.

What we’ve seen less of in the products and in the discourse is exploration of how AI can become an effective knowledge communication tool. Quickly visualising complex ideas in three dimensions with narration could extend how we explain many concepts. The proposed Sora 2 API could facilitate all manner of new tools for visualisation.

OpenAI’s control mechanisms show some degree of thought. Age restrictions on infinite scroll, wellbeing prompts, classification filters, mandatory watermarks. Yet open-source models with similar capabilities will soon emerge. The controls create short-term friction but won’t prevent proliferation. Synthetic content is only set to increase in quality, length (although this remains the real technical barrier), creative and malicious use and unpredictable disruption of our information landscape.

Takeaways: Sora 2 represents mature short-form consumer friendly video generation, and this is no longer just a research demo or even pro tool. There are some mechanisms to enable a degree of controlled use, but these are not intrinsic to the medium. This nascent infrastructure supporting discrete, watermarked files will ultimately be overtaken especially as models move toward streaming and on-device or open generation. The age of zero trust digital content is well and truly here, let’s hope we can use this to communicate without limits, not manipulate.

Microsoft introduces agentic “vibe-working”

Microsoft shipped a new Agent Framework this week, merging Semantic Kernel and AutoGen into one open-source SDK for .NET and Python. The framework supports MCP for tool discovery, A2A for cross-runtime messaging, and OpenAPI integration, with a path to Azure AI Foundry for managed hosting. Meanwhile in a press release entitled “vibe working” Copilot Agent Mode arrived including in Excel and Word, bringing multi-step workflows to Microsoft 365, though only via web apps and gated through the Frontier early-access programme for now. Excel Agent Mode scored 57.2% on SpreadsheetBench compared to 71.3% for humans. This feature essentially brings the pro-active style mode from ChatGPT and pairs it with the files and tools on the 365 platform.

Somewhat frustratingly the launch pattern here is familiar. Microsoft announces, the business tech press covers it, but availability is not immediate. Agent Mode requires special access. Desktop app roll-out and parity is unclear. Frustratingly we’ve seen this before with Copilot features that took months to reach general availability by which time competitors have evolved. But these releases really do matter; Microsoft’s platforms constrain how millions of businesses adopt AI. Copilot is embedded across Office, Teams, and Windows. Semantic Kernel and now the unified Agent Framework shape what developers can build. If Microsoft gets this agentic package right, they unlock agentic AI for 300 million paying seats and countless more users of Azure hosted products. If they continue to fumble, they will continue to be a major bottleneck.

The agent framework itself looks like solid engineering. Unified SDK, open protocols, good observability, slick builder UI. But Copilot still lacks the capabilities that make Claude or other agent platforms compelling, like fully enabled long-form reasoning, sophisticated tool use, and long-running multi-turn task completion. The Office agents still feel fragmented and most of the millions of users wouldn’t have a clue what they can or can’t do. Solid document sourcing, security, approvals and other guardrails are there, but the impact still feels underwhelming.

Microsoft needs to go faster; ship software with desktop parity, stick to timelines, and make their products capabilities much clearer. They need to listen to real usage and adapt more quickly. The global economy won’t be “unleashed” by frameworks alone, or compute and data centres, but with truly usable tools that need to come soon (or new AI-native entrants such as Gamma could start to make inroads in Microsoft’s work platform dominance).

Takeaways: Microsoft operates within a unique position of constraint and opportunity. With nearly half-a-billion users, they could accelerate business AI adoption at an unprecedented rate if they got the formula right. This does require a mix of development tools and products as we have seen this week. This also requires actually shipping software to all users, maintaining open protocols, and taking a leap beyond Copilot catch-up. When will a Microsoft AI product be copied somebody else?

EXO

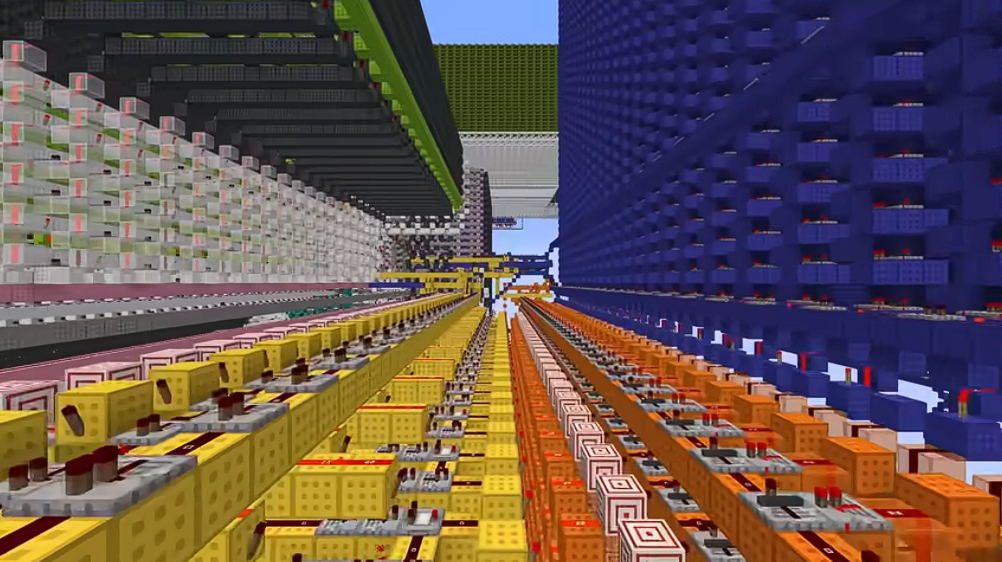

An LLM built in Minecraft

This image shows CraftGPT, a 5-million parameter language model built entirely within Minecraft using redstone circuits! Created by sammyuri, the structure spans 439 million blocks and takes two hours to generate a single response. The construction is so large that the Distant Horizons mod was necessary to capture footage showing the entire structure, as standard Minecraft render distances cannot display it all at once. The model can engage in basic conversations and demonstrates awareness that it’s an AI, can provide suggestions, and varies responses based on its seed value. LLMs are fundamentally mechanical systems, matrix multiplications, tokenisers, attention mechanisms, all reducible to ones and zeros. Whilst we debate whether AI “thinks” or “understands”, sammyuri has constructed the answer in blocks.

Weekly news roundup

This week’s developments showcase unprecedented investment scales in AI companies, the emergence of specialised AI agents across industries, and growing regulatory attention alongside concerns about AI’s potential risks in biology and creative industries.

AI business news

- Anthropic launches Claude Sonnet 4.5, capable of 30-hour continuous coding (Demonstrates the rapidly expanding context windows and endurance of LLMs for complex technical tasks.)

- OpenAI hits $500 billion valuation after share sale to SoftBank, others (Shows the extraordinary scale of investment in AI and the financial expectations for the technology’s impact.)

- Agentforce Vibes is a new AI-assisted IDE for building Salesforce apps and agents (Illustrates how enterprise software companies are integrating AI to accelerate development and lower technical barriers.)

- Opera launches Neon AI browser to join agentic web browsing race (Signals the shift towards AI agents that can autonomously navigate and interact with the web on users’ behalf.)

- ServiceNow’s new AI Experience puts agentic automation at the fingertips of every office worker (Highlights the democratisation of AI automation tools for knowledge workers across organisations.)

AI governance news

- Gavin Newsom signs first-in-nation AI safety law (Marks a significant milestone in AI regulation and could set precedents for other states and countries.)

- Microsoft says AI can create “zero day” threats in biology (Raises critical biosecurity concerns about AI’s dual-use potential and the need for careful governance.)

- Hollywood celebrities outraged over new ‘AI actor’ Tilly Norwood (Illustrates the growing tensions between AI capabilities and creative industry workers’ livelihoods.)

- Meta Will Begin Using AI Chatbot Conversations to Target Ads (Highlights privacy concerns and the monetisation strategies for conversational AI platforms.)

- Google’s Latest AI Ransomware Defense Only Goes So Far (Shows both the potential and limitations of AI in cybersecurity applications.)

AI research news

- Meta Agents Research Environments (Provides tools for advancing multi-agent AI systems research, crucial for understanding complex AI interactions.)

- LoRA Without Regret – Thinking Machines Lab (Advances efficient fine-tuning methods that could make AI model customisation more accessible and cost-effective.)

- Unifying machine learning and interpolation theory via interpolating neural networks (Bridges theoretical gaps in understanding neural networks, potentially improving model design and reliability.)

- Document Pre-Trained Transformers (Parsing Models) (Demonstrates specialised models for document understanding, critical for enterprise AI applications.)

- ReasoningBank: Scaling Agent Self-Evolving with Reasoning Memory (Explores how AI systems can improve themselves through accumulated reasoning experience.)

AI hardware news

- Samsung and SK Hynix to feed OpenAI’s megaproject (Shows the massive hardware requirements for next-generation AI systems and supply chain implications.)

- Taiwan will not agree to 50-50 chip production deal with US, negotiator says (Highlights geopolitical complexities in securing AI hardware supply chains.)

- AI chip firm Cerebras raises $1.1 billion, adds Trump-linked 1789 Capital as investor (Demonstrates continued investment in AI chip alternatives to Nvidia and political connections in tech funding.)

- Huawei’s AI Chip Secret: Stockpiles of Foreign Tech Fuel Its Nvidia Challenge (Reveals strategies for circumventing technology restrictions and maintaining AI hardware development.)

- AI Unicorn Groq Charts Data-Center Expansion Plan (Shows the infrastructure scaling needed to support growing AI compute demands.)