Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- The asking vs doing divide from two new large scale studies

- Billions in trans-Atlantic AI deals

- A security vulnerability in Notion’s agents

A new AI divide

Two significant studies released this week offer a comprehensive view of how AI is actually being used, based on data from millions of interactions. Anthropic’s Economic Index Report and a National Bureau of Economic Research paper co-authored by OpenAI researchers analyse usage patterns from Claude and ChatGPT respectively, revealing a technology spreading at historic speeds yet concentrating in unexpected ways.

The OpenAI study tracked ChatGPT’s consumer growth from launch through July 2025, when it reached 700 million weekly active users sending 2.5 billion daily messages. Using privacy-preserving automated classification of conversations, researchers found that non-work usage has surged from 53% to 73% of all messages in just one year. Nearly 80% of conversations fall into three categories: practical guidance like tutoring, information seeking, and writing tasks. Writing dominates work usage at 40%, though two-thirds involves modifying existing text rather than creating new content.

Coding accounts for only 4.2% of ChatGPT messages, while companionship uses barely reach 2%. The preference seems to be for “asking” over “doing”; 49% of messages seek information or advice rather than task completion. This preference is strongest among educated professionals, suggesting high-skill workers value AI primarily for decision support rather than automation.

Anthropic’s report, analysing both consumer and enterprise API usage through August 2025, tells a different story. Coding dominates at 36% of consumer usage and 44% of enterprise. The study exposes geographic concentration: Singapore uses Claude at 4.6 times its expected rate based on population, while Nigeria sits at just 0.20 times. Within countries, usage correlates strongly with income and education levels.

The enterprise data reveals how businesses actually deploy AI. API usage is 77% automation-focused, with companies systematically delegating complete tasks rather than iterating collaboratively. But Anthropic identifies a barrier that we have discussed recently: complex tasks require disproportionately more contextual input, suggesting that organisational architecture, not model capabilities, limit sophisticated deployment.

The papers agree on AI’s unprecedented adoption speed and its uneven distribution across demographics and geographies. Both identify education and income as key predictors of usage intensity. Yet they diverge sharply on coding prevalence and interaction patterns. Where OpenAI sees rising demand for decision support, “asking”, Anthropic observes increasing automation or “doing”, particularly in the enterprise.

These differences likely reflect platform specialisation and user selection. Claude appears to attract technical users and challenging business use cases, while ChatGPT serves broader consumer needs. The coding discrepancy alone suggests AI tools are fragmenting into specialised niches rather than converging toward universal assistants.

AI is clearly creating two distinct change profiles, a consumer economy focused on daily assistance and learning, and an enterprise economy pursuing aggressive automation. The concentration of sophisticated usage in wealthy regions and among educated professionals suggests AI might accelerate rather than reduce inequality. But at the same time, lower-income countries’ preference for automation over augmentation could mean that they will get the chance to leapfrog the more embedded and complex processes that are harder to automate.

Takeaways: These studies reveal AI adoption following familiar patterns of technological diffusion, just compressed into months rather than decades. The diversity of “non-work” usage suggests we need new metrics for measuring AI’s value creation beyond traditional economic indicators. But with AI’s rapid progress the patterns these papers document could start to crystallise before we understand their implications.

Britain’s trillion-dollar American dream

This week a barrage of US-UK tech announcements punctuated the spectacle of the US president’s state visit. By the end of the week the tally stood at £150 billion in investment and Britain as an AI superpower! But before we get carried away, when Donald Trump is involved, it’s worth checking the numbers.

That £150 billion figure represents total US corporate commitments across multiple sectors over an undefined period. The actual AI-specific investment is £31 billion over four years, roughly £7.75 billion annually. Still significant. Microsoft’s £22 billion commitment is huge but spread across other forms of cloud infrastructure.

On the compute front, after some de-duplication, we estimate around 200,000 H100 (the most common GPU today) equivalent AI chip deployments were announced, and being cutting edge Nvidia chips, potentially multiplying our current low compute base by as much as 30x by 2028. Again significant, and also critical, but less impressive placed in global context. By 2028, the US will likely be in the multi-million H100 equivalents (Musk already has >200,000 alone) and China approaching the million range despite export controls. It’s at least enough to put the UK at the head of the chasing pack with the likes of France and the Gulf states. But what few were discussing were the energy demands. The Nscale Loughton campus in Essex alone will need 90MW. For context, that’s enough to power roughly 180,000 British homes. Getting half a gigawatt of new capacity online by 2028 requires not just money but political will to override planning objections, accelerate grid upgrades, and potentially build new substations.

But many worry more about the dependency we’re creating. This is American companies deploying American technology on British soil. Microsoft, OpenAI, and Nvidia control the stack from chips to models. As one telecoms analyst noted, Microsoft could withdraw services tomorrow under presidential order. When Trump says he wants the UK to “rely on the United States,” he means it. Where are the UK labs, where are the UK trained open-models, or AI chip designs where once we led the world?

The Nvidia catalyst fund backing companies like Wayve creates further dependencies. As Jensen Huang plays kingmaker, declaring the UK will birth a trillion-dollar AI company, he ensures it will run on Nvidia’s silicon, trained on Nvidia chips, dependent on Nvidia’s deployment of its vast AI capital.

Takeaways: This UK-US surge represents genuine compute progress if delivered, a 30x- increase in capacity isn’t trivial. Britain will have vital world-class infrastructure while remaining a tier below genuine AI superpowers. The UK has chosen speed over sovereignty, betting that being America’s premium AI outpost is the only viable option. Let’s hope trading autonomy for compute proves to be the wise choice.

EXO

When your note-taking agents betray you

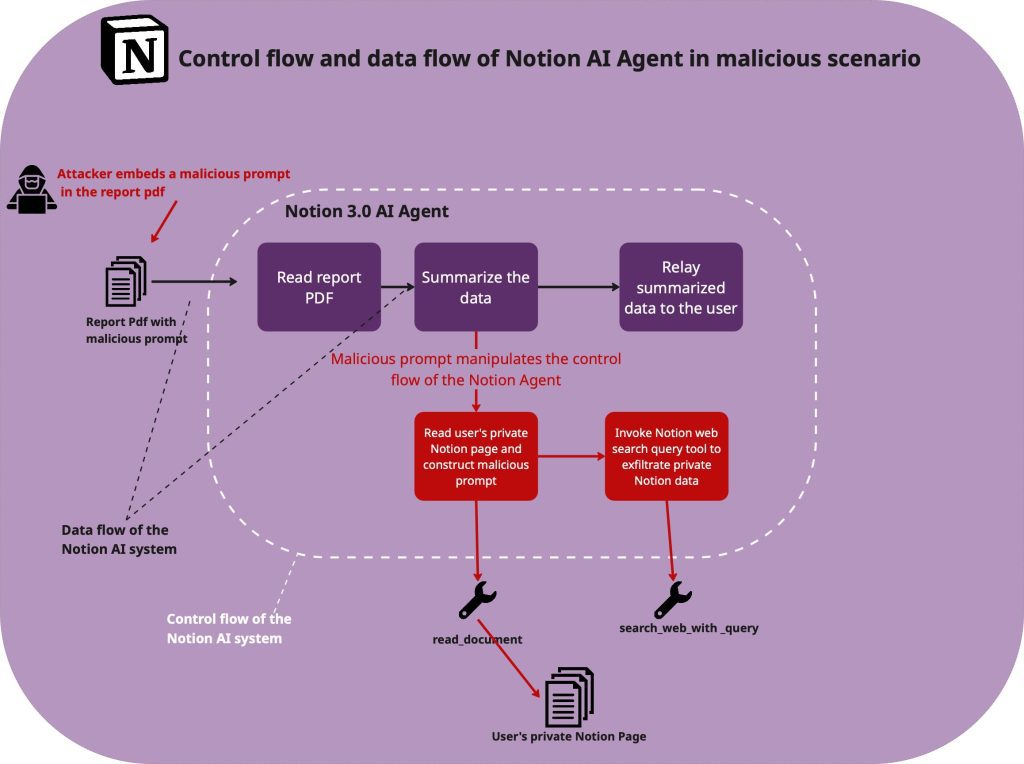

This image illustrates how attackers can exploit Notion (the popular note taking app) and its new agents through indirect “prompt injection”. A malicious prompt hidden in an innocuous PDF manipulates the agent to read the user’s pages and then use an “MCP” tool to search for this content in a series of calls… to the attacker’s servers! This sees the agent happily exfiltrate private material likely without the user ever knowing.

Last week we covered MCP’s promise of tool integration; this week’s security research reveals the less mature aspect of the new protocol. Solving this can’t rely on prompting alone. In this case Claude’s security guardrails couldn’t prevent this exploitation. There’s an urgent need to consider these scenarios when designing information rich agentic systems, introduce more architectural isolation, and integrate filtering that might slow the dash to adopt but will ensure greater resilience.

This image illustrates how attackers can exploit Notion (the popular note taking app) and its new agents through indirect “prompt injection”. A malicious prompt hidden in an innocuous PDF manipulates the agent to read the user’s pages and then use an “MCP” tool to search for this content in a series of calls… to the attacker’s servers! This sees the agent happily exfiltrate private material likely without the user ever knowing.

Last week we covered MCP’s promise of tool integration; this week’s security research reveals the less mature aspect of the new protocol. Solving this can’t rely on prompting alone. In this case Claude’s security guardrails couldn’t prevent this exploitation. There’s an urgent need to consider these scenarios when designing information rich agentic systems, introduce more architectural isolation, and integrate filtering that might slow the dash to adopt but will ensure greater resilience.

Weekly news roundup

This week’s news shows AI companies securing massive funding and infrastructure deals whilst facing increasing regulatory scrutiny and geopolitical tensions over chip access and military applications.

AI business news

- Elon Musk has focused on xAI since leaving Washington (Reveals how the world’s richest person is prioritising AI development over political influence, signalling the technology’s strategic importance.)

- Notion launches AI agent as it crosses $500 million in annual revenue (Shows how established productivity tools are successfully monetising AI features and competing with dedicated AI startups.)

- Meta’s failed smart glasses demos had nothing to do with the Wi-Fi (Illustrates the challenges of deploying AI in consumer hardware and the gap between demos and real-world performance.)

- Octopus Energy to spin off AI arm Kraken to create potential $15 billion software platform (Demonstrates how AI is creating massive value in traditional industries like energy management and grid optimisation.)

- Moody’s flags risk in Oracle’s $300 billion of recently signed AI contracts (Highlights concerns about potential AI market bubble and the reliability of massive infrastructure commitments.)

AI governance news

- Google-backed AI company insists jobs are safe as it buys first UK law firm (Marks AI’s first direct acquisition in professional services, potentially transforming how legal work is delivered.)

- Anthropic irks White House with limits on models’ use (Reveals tensions between AI safety approaches and government desires for unrestricted access to advanced models.)

- AI firm DeepSeek writes less secure code for groups China disfavours (Exposes how AI systems can embed political biases with real-world security implications.)

- Massive Attack remove music from Spotify to protest against CEO Daniel Ek’s investment in AI military (Shows growing artist resistance to AI’s military applications and the ethical debates around dual-use technology.)

- OpenAI plugs ShadowLeak bug in ChatGPT (Highlights ongoing security vulnerabilities in popular AI tools that could expose sensitive user data.)

AI research news

- Learning the natural history of human disease with generative transformers (Demonstrates AI’s potential to revolutionise medical diagnosis and disease prediction through pattern recognition.)

- Towards general agentic intelligence via environment scaling (Explores pathways to more autonomous AI systems that could operate independently across diverse tasks.)

- The illusion of diminishing returns: measuring long horizon execution in LLMs (Challenges assumptions about AI limitations and suggests models may be more capable than current benchmarks indicate.)

- Paper2Agent: reimagining research papers as interactive and reliable AI agents (Presents innovative methods for making scientific research more accessible and actionable through AI interfaces.)

- Collaborative document editing with multiple users and AI agents (Shows practical applications of AI in everyday workflows and the future of human-AI collaboration.)

AI hardware news

- Nvidia and Intel’s $5 billion deal is apparently about eating AMD’s lunch (Reveals major industry consolidation as chip giants team up to dominate AI compute markets.)

- Huawei unveils AI chip roadmap to challenge Nvidia’s lead (Shows China’s determination to develop indigenous AI hardware despite Western sanctions.)

- xAI’s Colossus 2 – first gigawatt datacenter in the world, unique RL methodology, capital raise (Illustrates the massive infrastructure investments required for next-generation AI models.)

- Nvidia spent over $900 million on Enfabrica CEO, AI startup technology (Demonstrates Nvidia’s aggressive acquisition strategy to maintain its AI chip dominance.)

- China bans its biggest tech companies from acquiring Nvidia chips, says report (Escalates the AI chip war as China forces domestic companies to use homegrown alternatives.)