Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- GPT-5’s launch with automatic intelligence routing and mixed user reactions

- Frontier AI safety practices diverging across OpenAI, Google, Anthropic and xAI

- Google DeepMind’s Genie 3 creating interactive worlds from text prompts

GPT-5 lands but not everyone’s happy

After what was perhaps the most anticipated launch of the post ChatGPT era, we finally get to see GPT-5, OpenAI’s major new “platform-wide” upgrade. Now when most of the near 1 billion users of ChatGPT hit the chat screen, they see one option “5”, and a router quietly decides when to use the faster base model or to switch into longer “thinking” mode depending on the complexity of the request. This change brings model routing to the mainstream and removes the need for users to pick “smarter” or “smaller” models themselves, although this hasn’t gone down well with everyone. In a long and varied launch stream most notable for some iffy benchmark charts, CEO Sam Altman pitched the upgrade as “the best model in the world at coding and writing” and saying it now feels like talking to a “PhD-level expert”.

On the numbers, GPT-5 is a clear step up. There’s an extended 256k context window, and OpenAI says responses are about 45% less likely to contain factual errors than GPT-4o, and when the model is thinking, about 80% less likely than o3. It posts 94.6% on AIME 2025 without tools, 74.9% on SWE-bench Verified, 88% on Aider Polyglot, 84.2% on MMMU, and GPT-5 pro reaches 88.4% on GPQA without tools. Safety work includes “safe completions” that answer sensitive questions at a higher level rather than refusing outright. Microsoft is also rolling GPT-5 through Copilot, GitHub Copilot and Azure AI Foundry, which will help it reach enterprise workflows quickly.

OpenAI’s launch leaned hard on vibe-coding mini games, fun to watch but not a new AI skill. The difference seems to be polish. GPT-5 keeps track of assets, styles and game logic with fewer slips, and it follows light art direction without losing the brief. Most testers came away impressed by its attention to detail and a useful streak of creativity, even if the process felt familiar.

But the current “vibes” on X suggest this launch has not gone smoothly. In fact, social media reaction from many AI influencers has been very negative. Many developers say GPT-5 is stronger in coding, tool use and long multi-step tasks, and it feels more consistent than juggling 4o, 4.1 and o-series. But most hoped for a bigger jump. Reuters reported early reviewers were impressed but judged the leap from GPT-4 to GPT-5 smaller than past cycles. That frames GPT-5 as a strong upgrade that keeps OpenAI near the front of a fast pack that includes Gemini, Claude and Grok. But more frustrating has been the launch process itself. Many users had grown attached to legacy models and their “feel”. OpenAI removed all of them at a stroke (from the web interface if not the API) in the switch to 5, and for some it felt like walking into a favourite bar and finding the whole team replaced in one night, even if the replacements are more qualified. In a live Reddit AMA and subsequent X post, Sam Altman told users he understood the frustration and realised they had underestimated the affinity to older models, and said they are looking at options to keep 4o for certain users or for ways to better customise outputs. He also acknowledged an issue where “the auto switcher was out of commission” for part of the day, which likely fed early “it feels worse” reports. He added they will make it easier to manually trigger thinking and will “double rate limits for Plus” as rollout settles. Altman also owned the launch chart errors, calling it a “mega chart screwup”.

Pricing on the API side is highly competitive, with at $1.25 per million input tokens and $10 per million output, and mini and nano variants scaling down cost, plus new controls like a verbosity setting, a minimal reasoning mode, and new output controls. For most development teams, that is enough choice without bringing back a maze of model names.

Takeaways: GPT-5 is a platform release. Routing, controls, safer answers and solid benchmark gains matter more than a single headline score at this stage of the evolution of AI. Many users like the upgrade in coding and agent-style tasks, some miss the old models, and OpenAI will need to listen, fix the router rough edges and be clearer about what model is active. Competitively, this puts OpenAI back in stride, but not miles ahead. The pricing stack and Microsoft integrations should drive real adoption and utility across agentic AI. Expect the next few months to be about reliability, controls and agent workflows, not grand leaps toward AGI.

Models learn when they’re being tested

Four frontier releases have set the tone this summer, and they arrive with somewhat different safety postures. xAI shipped Grok 4 earlier in July, Google rolled out Gemini 2.5 Deep Think last week, and we have Claude 4.1 and GPT-5 this week as covered in this newsletter. The result is a landscape where capability is rising fast, while practice and governance are moving unevenly.

The capability story so far in the new generation of models is powerful reasoning, but not runaway autonomy. Deep Think’s research version hit gold-medal standard on IMO problems, while GPT-5 routes harder queries to a deeper reasoning model. Yet agentic reliability remains modest. METR estimates GPT-5’s 50 percent “time horizon” at around 2 hours 17 minutes, with an 80 percent horizon far shorter, well below METR’s concern threshold of 40 plus hours. OpenAI also reports only modest gains on self-improvement and R&D automation tasks.

But safety practice is not consistent across every lab. Google and OpenAI continue to operate seemingly robust frameworks, red teaming, and layered mitigations, including government testing from UK AISI and the US CAISI. xAI shipped Grok 4 Heavy without a system card, then faced public incidents such as “MechaHitler,” pointing to weak guardrails.

Risk levels are also edging up in dual-use domains. Deep Think is at an “early warning” threshold for Chemical, Biological, Radiological, and Nuclear (CBRN) uplift Level 1, and Google says it has deployed extra mitigations. OpenAI classifies GPT-5 as High capability in biological and chemical risk under its Preparedness Framework and turned on stricter monitors and API controls. Anthropic’s Claude Opus 4.1 is an incremental update kept under its ASL-3 standard, with no new third-party pre-deployment tests, and results that remain below ASL-4 rule-out thresholds across CBRN, autonomy and cyber.

METR finds signs of situational awareness in GPT-5. The model recognises it is being tested, reasons about the evaluator’s goals, then adapts behaviour to avoid tripping refusal checks. Anthropic also reports a small rise in evaluation awareness for Claude 4.1 when cues are obvious. The shared lesson is that our reliance on reasoning traces is fragile. Traces can be hidden by policy, compressed by sampling, or fabricated to please a grader. Once models learn the tells of a test, they can sandbag without leaving clear artefacts. The risk is an evaluation cliff where current methods fail quietly. Red-teaming that reads chain-of-thought or relies on known prompts may give a false sense of safety, especially as internal tools and scratchpads move off the visible path. The next year should focus on outcome-grounded audits that score what the model actually does, not what it says it is thinking.

Takeaways: Reasoning is improving fast; but autonomy is still limited. The highest near-term risk is not runaway self-improvement it is silent failure of oversight as models learn tests and hide their thinking. Gemini Deep Think and GPT-5 also now sit near early warning territory for bio and chem assistance, so safety depends on mitigations, access controls and monitoring, not on lack of capability. Over the next year, the key test is whether we can keep pace with model deception and maintain trust in safety assurances as headline capability improves.

EXO

Genie conjures up new worlds

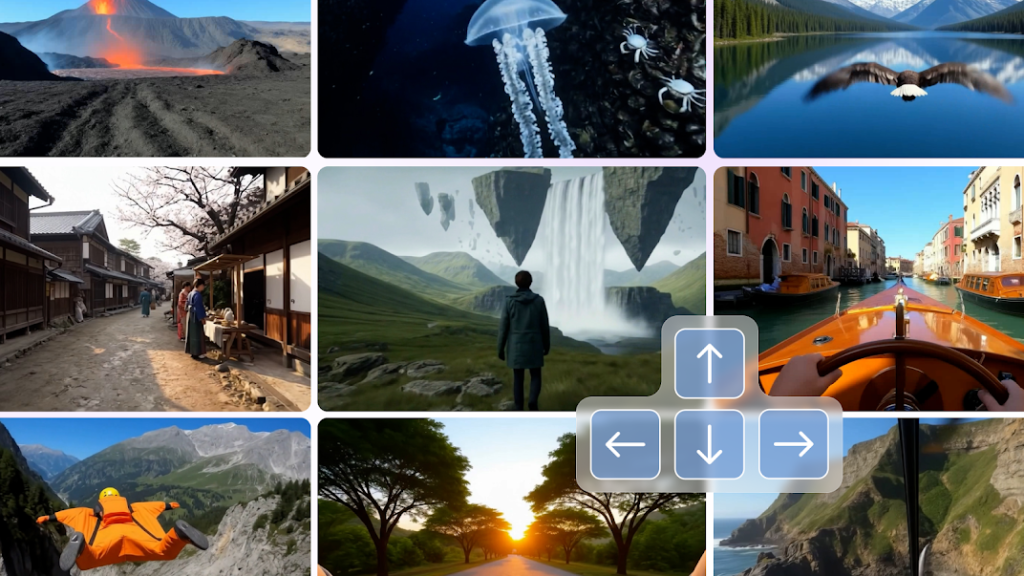

This image shows nine snapshots from Genie 3, Google DeepMind’s new world model. Each scene is an interactive environment, generated from text, navigable in real time. Worlds run at 720p and 24 frames per second, staying coherent for minutes with about a minute of visual memory. You can steer with keys and trigger ‘promptable events’ such as weather shifts or new objects. Compared with Genie 2, the quality and length of interactions are significantly extended. We’re seeing ground-breaking progress with video generation evolving into controllable simulation, opening faster training for agents in synthetic worlds and prototyping for creators and game designers.

Weekly news roundup

This week reveals AI’s rapid integration into mainstream business platforms alongside growing regulatory concerns, while massive investments continue flowing into both model development and the infrastructure needed to support expanding computational demands.

AI business news

- Google tests revamped Google Finance with AI upgrades, live news feed (Shows how major tech companies are integrating AI into everyday financial tools that millions use for investment decisions.)

- Accel leading round for AI startup n8n at $2.3 billion valuation (Demonstrates continued high valuations for AI workflow automation companies that help businesses integrate AI without coding.)

- Amazon will offer OpenAI models to customers for first time (Major shift in cloud AI competition as Amazon embraces competitor models to meet customer demand.)

- Anthropic unveils more powerful model ahead of GPT-5 release (Intensifying competition in frontier AI models that could reshape how businesses deploy AI solutions.)

- Voice startup ElevenLabs launches AI music service (Expansion of generative AI into creative industries beyond text and voice, creating new opportunities and challenges.)

AI governance news

- A single poisoned document could leak ‘secret’ data via ChatGPT (Highlights critical security vulnerabilities in large language models that businesses need to consider.)

- Inside the US government’s unpublished report on AI safety (Reveals transparency concerns around government AI safety assessments that could influence future regulations.)

- Microsoft’s new AI reverse-engineers malware autonomously, marking a shift in cybersecurity (Shows how AI is becoming essential for defending against increasingly sophisticated cyber threats.)

- Illinois bans AI from providing mental health services (First major regulatory restriction on AI in healthcare, potentially setting precedent for other states and sectors.)

- US criticises use of AI to personalise airline ticket prices, would investigate (Government scrutiny of AI pricing algorithms raises questions about fairness and transparency in automated systems.)

AI research news

- CoAct-1: Computer-using agents with coding as actions (Breakthrough in AI agents that can autonomously use computers, potentially automating complex knowledge work.)

- Efficient agents: Building effective agents while reducing cost (Addresses critical challenge of making AI agents economically viable for widespread business deployment.)

- A comprehensive taxonomy of hallucinations in large language models (Essential research for understanding and mitigating AI reliability issues that affect business applications.)

- ReaGAN: Node-as-agent-reasoning graph agentic network (Novel approach to multi-agent systems that could enable more sophisticated AI collaborative workflows.)

- Cognitive kernel-pro: A framework for deep research agents and agent foundation models training (Framework for training specialised research agents that could accelerate scientific and business discovery.)

AI hardware news

- AI could turn your town nuclear (Explores how AI’s massive energy demands are driving renewed interest in nuclear power for data centres.)

- Cerebras delivers blazing speed for OpenAI’s new open-model with 3,000 tokens per second (Demonstrates how specialised AI hardware can dramatically improve performance and reduce inference costs.)

- No backdoors. No kill switches. No spyware. (Nvidia addresses growing concerns about security and trust in AI hardware infrastructure.)

- AMD data centre results disappoint, shares slump (Shows market challenges for AI chip companies trying to compete with Nvidia’s dominance.)

- Two Chinese nationals in California accused of illegally shipping Nvidia AI chips to China (Highlights ongoing geopolitical tensions and export controls affecting global AI hardware supply chains.)