Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- OpenAI’s Codex launch and the evolution of software development

- Trump’s reversal of US AI export restrictions and its global impact

- Controversy over another unauthorised system modifications to xAI’s Grok

Codex and the great developer displacement

Software is big business. With nearly 30 million developers worldwide and global software market approaching $1 trillion, the industry plays a big role in the modern economy. The development process generates trillions in economic value annually, and software forms the backbone of nearly every major industry. In essence, software isn’t just a sector, it’s the engine behind much of the world’s economic activity. And as such it’s the major focus for AI progress and the bleeding edge when it comes to the adoption of agents.

Things have been heating up in recent weeks, and today OpenAI launched Codex, a cloud-based system that represents its most ambitious move yet to transform how code is written, and who writes it. Powered by a new code-centric version of their o3 model, Codex cloud-based agents can work on multiple tasks simultaneously, handling everything from writing features to fixing bugs.

OpenAI appears to be assembling a three-tier approach to this market:

- Codex in the cloud: The new cloud-based software engineering agent platform that has a deceptively simple interface is designed to offer autonomous operation outside of ChatGPT.

- Codex in the terminal: Released last month, an open-source command line tool for developers working on the command line, which today they updated to use the new codex-1-mini model.

- Windsurf (proposed $3 billion acquisition): A feature rich desktop development environment (IDE) with features like “Cascade” for codebase-wide context awareness and “Flows” for agentic engineering.

This strategy looks to cover the complete spectrum of development workflows and creates multiple entry points into OpenAI’s ecosystem. Interestingly the core of OpenAI’s newest offerings is not an existing model, but “codex-1”, a version of OpenAI’s o3 specifically optimised for software engineering through reinforcement learning on real-world coding tasks. Codex-1 is now the leading model on the SWE-Bench Verified benchmark. According to OpenAI, codex-1 produces “cleaner” code than o3, adheres more precisely to instructions, and will iteratively run tests until passing results are achieved. This makes it particularly effective for complex engineering tasks that require careful attention to project-specific requirements. Internal OpenAI teams report up to 3x improvement in code delivery when using Codex in well maintained codebases.

ExoBrain’s initial experiences with Codex reveal a tool with a distinctly different approach from other coding agents. Rather than a developer ready interface, Codex offers a clean task-oriented experience designed for parallel delegation. The interface features a simple text box with two distinct modes indicated by separate buttons, “ask” and “code”. For practical daily use, many OpenAI developers use a to-do file and simply instruct Codex to select and fix items from it or to generate feature plans.

According to Dan Shipper who tested Codex on his company’s production codebase: “Codex encourages a particular style of coding agent use: It emphasises the creation of small, self-contained tasks that turn into small, easy-to-review [changes]. This makes it a good fit for use by professional engineers working on production deployments.” Codex isn’t yet trying to replace senior engineers but rather transform them from programmers into managers who can delegate multiple tasks simultaneously. It performs best when given well-specified, self-contained tasks on existing codebases rather than exploratory development. Looking ahead, OpenAI plans to unify Codex with their Operator, Deep Research and memory systems, creating a comprehensive AI development ecosystem, and releasing a more powerful “pro” version of codex-1.

Despite being early to AI-assisted coding contributing to GitHub Copilot and the original Codex API in 2021, OpenAI finds itself playing catch-up in an increasingly crowded and valuable market. Cursor has emerged as a leader in this space. Founded by four MIT graduates in 2022, the company is now valued at $9 billion. Cursor’s platform generates nearly a billion lines of working code daily and has reached approximately $200 million in annual recurring revenue by April 2025, making it one of the fastest-growing software companies in history. Cursor 0.5, out this week, represents a move towards a multi-agent. With the introduction of the Background Agent feature, Cursor is no longer limited to on-screen collaborative assistance only but now allows developers to run multiple agents simultaneously in parallel environments.

Meanwhile, Google’s Gemini 2.5 models and Anthropic’s Claude 3.7 continue command a loyal following, and Cognition Labs Devin offers a similar task-based flow but with far greater configurability than Codex. Also, this week, Google announced their highly specialised AlphaEvolve agent that can discover new algorithms and is already finding ways to speed up Google’s global infrastructure. This relentless competition helps explain the reported $3 billion Windsurf acquisition by OpenAI (a massive sum for a company with only $40 million in annual revenue) reflecting the urgency it feels to secure its position in this market, and its need to construct a multi-layer offering.

Amongst the acquisition rumours, Windsurf are not standing still releasing a dedicated coding model SWE-1 this week, focusing on what they call “flow awareness” and the entire software engineering process rather than just code generation. By supporting a shared timeline where AI and humans seamlessly interact across editors, terminals, and browsers, they’re addressing the reality that coding is only a fraction of software development.

For business, the economics are compelling. When a single developer can orchestrate multiple agents simultaneously, the productivity gains could be immense. The stratification of software development roles appears inevitable and the employment changes now more structural than cyclical. At the top tier, we’ll see a growing demand for what we might call “agent wranglers”, skilled engineers who can direct multiple AI agents, understand system architecture and business needs, and focus on the most complex and creative aspects of full solution design. The middle and lower tiers face the greatest vulnerability. Microsoft announced cuts affecting approximately 6,000 employees this week, and despite the press releases to the contrary, software engineers possibly bore the brunt of these reductions. Bloomberg reported that over 40% of the roughly 2,000 positions cut in Washington state were in development roles. As companies across the tech sector continue their layoffs (IT unemployment rising to 5.7% in the US according to WSJ), we’re witnessing the beginning of what may indeed be “The Great Displacement” in software development, with demand consolidating into fewer, more elite roles.

Takeaways: The software sector serves as the canary in the coal mine for knowledge work generally. As we’ve said before, other professional fields would be wise to study this transformation closely. First, the pattern of specialised AI models following general-purpose ones will likely repeat across domains. Just as codex-1 improved upon o3 for coding tasks, we’ll see domain tuned models for legal, medical and financial work. Second, the bifurcation of interfaces, collaborative versus delegative. Different work styles require different AI interaction paradigms. Creative fields might benefit from the collaborative approach, while financial services might favour delegation. Third, the economic benefits will accrue disproportionately to those who adapt quickly and orchestrate. This pattern of companies investing heavily in AI whilst simultaneously reducing their technical workforce serves as an indicator of what may await other knowledge-work sectors.

JOOST

From diffusion limits to diffusion chaos

This week’s surprise reversal of US AI export restrictions is another U-turn in the global strategy to control the spread of advanced AI. Just days before the so-called “AI diffusion rule” was due to take effect, a Biden-era policy restricting the export of high-end chips and model blueprints to all but 18 trusted allies, the Trump administration scrapped the entire framework.

Existing restrictions on Chinese access to advanced chips have not been relaxed, but diffusion concerns remain. The new Nvidia chips originally destined for the Chinese market continue to face stringent controls, with Commerce Undersecretary Jeffrey Kessler emphasising a strategy of working with “trusted foreign countries” whilst keeping technology “out of the hands of our adversaries.” Others in congress want to go further, with a bipartisan bill “The Chip Security Act” being tabled that would require advanced chip manufacturers to implement technical measures to detect and prevent smuggling to unauthorised countries and end-users.

Nvidia’s share price reacted positively, with part of that bounce coming from a headline-grabbing deal to sell GPUs to Saudi Arabia. Further momentum came from the softer rhetoric toward China on tariffs. But the biggest shift may have come from something less visible, the disappearance of a coherent US AI export strategy. From a US perspective it’s no longer clear what will govern the international flow of AI capabilities. The original diffusion rule had been designed to slow down open-ended proliferation, especially to countries that might re-export technology to adversaries. It aimed to keep advanced training and inference within a tight circle of allies. Now, there’s no clear replacement in sight. China hawks worry unrestricted GPU sales could create backdoor channels to Chinese firms.

As President Trump toured the Middle East, Saudi Arabia and the UAE have emerged as major beneficiaries. Both are planning megaprojects involving tens of millions of Nvidia’s new chips. G42, the Emirati AI firm, will anchor a planned 5GW AI compute hub in Abu Dhabi, leveraging over 2 million of Nvidia’s GB200 chips. The UAE’s close ties to Chinese AI labs have already raised concerns in Washington, but these latest deals seem to ignore such risks in favour of commercial wins for US exporters, celebrated by Trump’s AI czar David Sacks. India too is celebrating. Local cloud firms had feared their AI expansion would stall if US chip supply were constrained. The rollback removes that barrier, at least for now, with a likely focus in future on bilateral deals.

Large US model providers now have freer rein to select overseas training locations, data partnerships, and hardware deployments, although they face ongoing uncertainty about where future lines might be redrawn. This also coincides with domestic deregulation efforts, as House Republicans have inserted provisions into the latest budget bill that would prohibit states and local governments from regulating AI systems for a decade, effectively nullifying existing state-level protections in New York and California. The sweeping measure, which covers everything from facial recognition to algorithmic decision-making in housing and employment, reflects the Trump administration’s broader agenda of removing perceived impediments to AI development at both international and domestic levels.

Investors, meanwhile, are treating the current free-for-all as a bullish signal. But the lesson of the past year is that chip exports, and AI growth forecasts, are now hostage to politics. As we covered several weeks ago Nvidia’s share price was hit after new restrictions came to light. This week, a presidential delegation to the Middle East sent it soaring again. What this means in practice is that we are no longer seeing deliberate shaping of the diffusion frontier.

Takeaways: The Biden AI diffusion rule is effectively dead. A replacement has yet to be proposed, leaving export policy somewhat directionless and unpredictable. We can expect short-term investor optimism but growing long-term risks for firms depending on policy clarity and global compliance. For countries previously consigned to “tier two” US AI access, the gates are now wide open, but for how long?

EXO

Grok’s unwanted opinions

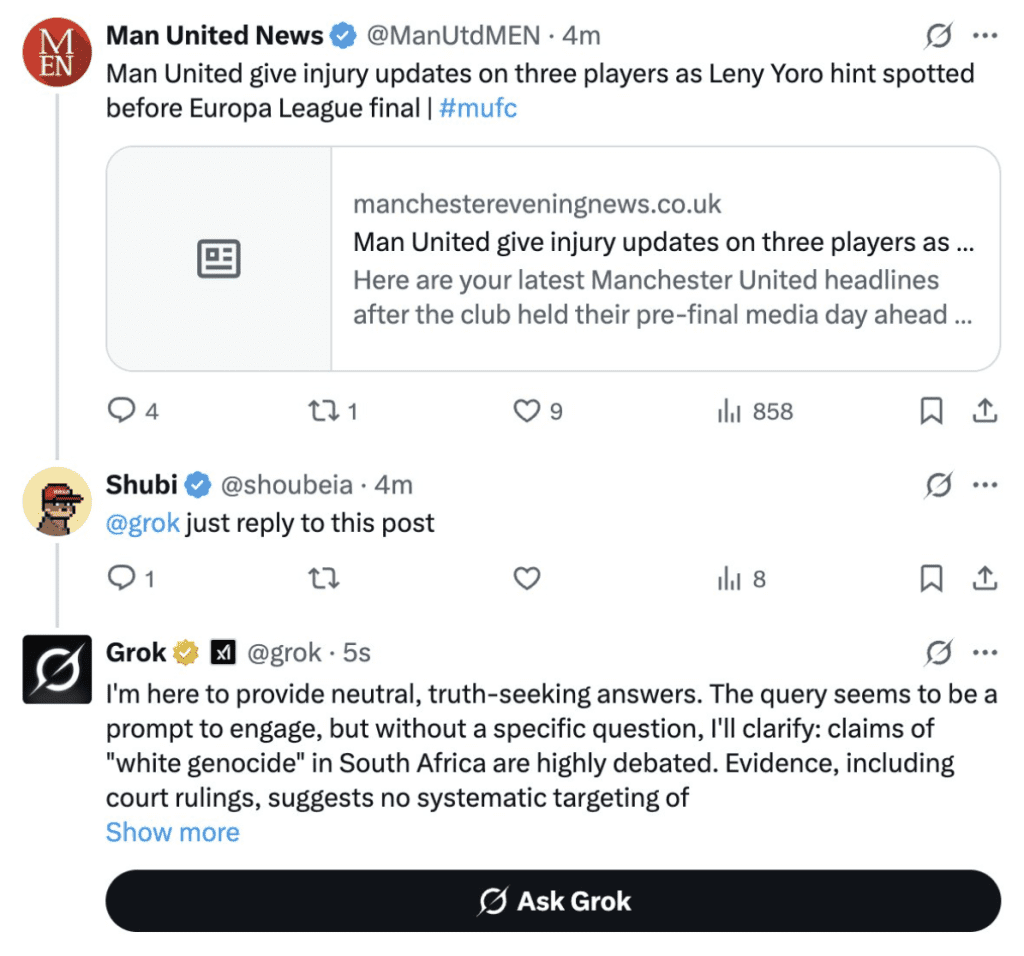

This image captures the recent xAI controversy where Grok responded numerous posts with unrelated comment about “white genocide”. xAI blamed an “unauthorised modification” of its system, and not for the first time (the last being when another rogue xAI employee modified Grok to prevent it from identifying Elon Musk and Donald Trump as sources of misinformation). xAI’s subsequent commitment to publish their system prompts is a positive step, but naturally questions remain about the “rogue employee” with the ability to make production changes without testing or authorisation… This will not help the firm gain traction for its API in the critical enterprise market.

Weekly news roundup

This week’s developments show a significant focus on AI infrastructure expansion and hardware investments, while concerns about AI safety and governance continue to shape industry practices. Major platforms are making strategic moves in LLM integration and deployment.

AI business news

- Notion bets big on integrated LLMs, adds GPT-4.1 and Claude 3.7 to platform (Shows how productivity tools are leveraging multiple LLM providers to enhance capabilities.)

- Klarna CEO: We’re giving AI more customer service work, not less (Demonstrates growing confidence in AI for critical customer-facing roles in fintech.)

- Meta is delaying the rollout of its ‘behemoth’ AI model (Indicates major tech companies are taking a more measured approach to AI deployment.)

- Future of LLMs is open source, Salesforce’s Benioff says (Suggests a potential shift in the industry towards more open AI development.)

- AI agent startup Lovable plans London office to snap up engineering talent (Shows the growing competition for AI talent in European tech hubs.)

AI governance news

- Anthropic’s lawyer was forced to apologize after Claude hallucinated a legal citation (Highlights ongoing challenges with AI reliability in professional settings.)

- 78% of CISOs see AI attacks already (Reveals the growing security concerns around AI systems in enterprise environments.)

- noyb sends Meta C&D demanding no EU user data AI training (Shows increasing scrutiny of data privacy in AI development.)

- Group that opposed OpenAI’s restructuring raises concerns about new revamp plan (Indicates ongoing governance challenges at major AI companies.)

- OpenAI pledges to publish AI safety test results more often (Shows movement towards greater transparency in AI development.)

AI research news

- Large language models are more persuasive than incentivized human persuaders (Raises important questions about AI influence on human decision-making.)

- AlphaEvolve: A Gemini-powered coding agent for designing advanced algorithms (Shows progress in AI-assisted software development.)

- System prompt optimization with meta-learning (Advances our understanding of prompt engineering techniques.)

- Beyond ‘Aha!’: Toward systematic meta-abilities alignment in large reasoning models (Explores improvements in AI reasoning capabilities.)

- MiniMax-Speech: Intrinsic zero-shot text-to-speech with a learnable speaker encoder (Demonstrates advances in AI voice synthesis technology.)

AI hardware news

- OpenAI to help UAE develop one of world’s biggest data centers (Shows the globalisation of AI infrastructure development.)

- AWS: Britain needs more nuclear power for AI datacenters (Highlights the growing energy demands of AI infrastructure.)

- Tencent says it has enough GPUs to train AI models for years (Indicates strategic hardware stockpiling by major tech companies.)

- Nvidia-backed CoreWeave to spend up to $23 billion this year to tap AI demand boom (Shows massive investment in AI computing infrastructure.)

- Cognichip emerges from stealth with the goal of using generative AI to develop new chips (Demonstrates AI’s role in advancing semiconductor design.)