Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- o3 and o4-mini models and their powerful reasoning capabilities

- Scale effects continuing to drive improved performance

- Shopify making AI skills a fundamental expectation for all employees

o3 and o4-mini prime agentic AI for take-off

This week might have been a short four‑day stretch for some, but the labs were not in an Easter‑holiday mood with a continuation of the relentless model‑release cycle, seeing seven big new offerings from OpenAI and Google alone. The 14th April launch delivered the GPT‑4.1 family, optimised for builders, in full, mini and nano sizes (one‑million‑token window, cheaper than GPT‑4o). Two days later the spotlight swung to the new o‑series contenders: the flagship o3 (200 k context, multimodal reasoning, $10/$40 per million input/output tokens) and the thriftier o4‑mini ($1.10/$2.50 respectively). Both landed in ChatGPT Plus, Team and Pro, and the API. GitHub Copilot moved enterprises to o3 the same evening. Benchmarks lit up: o3 tops SWE‑Bench Verified unaided at 69%, while o4‑mini punches above its weight with 99.5% on AIME maths (when Python tools are used).

OpenAI also released a new software‑engineering tool called Codex, which defaults to o4‑mini and seems to be a direct response to the similar command‑line solution from Anthropic. Meanwhile, rumours surfaced that OpenAI was in the hunt to acquire development‑environment start‑up Windsurf for £3 billion to bolster its dev product proposition. This week’s output from OpenAI seemed very much targeted at the professional AI community. Not to be outdone, Google dropped Gemini 2.5 Flash, a strong option in terms of cost versus capability.

So how are people responding? Tyler Cowen, the economist and AI enthusiast, called o3 “AGI”, and the OpenAI team fanned the flames, talking of a step change in impact on scientific discovery. On X, people shared impressive visual geo-guessing demos and code refactors finished in one shot. Sceptics replied with screenshots of botched long division and hallucinated data. Noted sceptic Gary Marcus quipped that o3 “can predict everything except its own errors”.

What’s the ExoBrain take? In three key areas, the o-series feels like a significant step, although, as ever, the proof will be in getting o3 and o4‑mini out of the lab and into organisations, teams and knowledge workloads where the actual opportunities reside. There are, however, new capabilities here, even over the powerful Gemini 2.5 Pro, that will make a difference:

- Creative leaps: o3 sometimes displays flashes of genius unmatched by earlier models. In one public demo shared by OpenAI’s research VP Mark Chen, the model reviewed a scientific paper, spotted that the authors had assumed something incorrectly, and suggested switching to a new technique which all checked out. Other scientists have talked of insights and novel reasoning that have taken them by surprise. Our testing indicates that in areas where it has been trained, o3 is indeed deeply insightful, such as in data science or software architecture.

- Visual thinking: Most “multi-modal” models understand images: they label what is present. o3 also reasons with them. Every 14 × 14 patch is embedded like a word, so text tokens can mix with pixels inside a single “chain of thought”. Visual understanding means mostly labelling, visual reasoning is using a picture to reach a new conclusion. In one public example, a shaky phone shot of a diagram went in, o3 read the faint numbers, ran Python to solve the problem, then produced an annotated diagram with arrows and values.

- Tool use: o3 can make hundreds of API tool or external code calls in a single run, which is invaluable for advanced agents. One example prompt generated market data, plotted volatility, wrote a memo and attached the chart; and cost only $0.18. This is starting to push the single response, or unit of work, from “answer” to “mini‑project”.

Both of these new models are also very fast, but, the classic LLM issues remain, and there were areas where they noticeably under‑performed:

- The December o3 preview (and tuned version) that crushed the ARC‑AGI benchmark led many to expect an outright knockout of Gemini 2.5 Pro. The released o3 is strong at code and research but more expensive and only neck‑and‑neck with Gemini (and Claude) on other fronts.

- Common sense physical logic, simple sums, dates and units can still derail these LLMs. In the Transluce audit o3 missed 14% of two‑step arithmetic questions and, when pressed, produced a forged Python trace claiming perfect accuracy.

- Long tool chains sometimes time out; the model has been seen fabricating “successful” outputs to keep the response tidy. Combined with strong rhetorical polish and persuasion, users can walk away convinced of a wrong answer.

Other practical snags remain. Dense images soon eat up the 200k token budget, so vision capabilities are relatively limited. Uncapped autonomous tool use can rack up high bills without careful monitoring. And while o4‑mini looks like a bargain, Claude and Gemini deliver similar results at comparable costs.

But OpenAI will not stand still; o3‑pro should land in a few weeks, bringing lower latency, higher compute limits and an expanded tool suite. Reinforcement‑learning fine‑tuning is heading for general release, and this will be huge for specialising these powerful models for specific domains such as finance, law and medicine.

Takeaways: So, what will we see in the coming months now that most of the new generation of models are here? The combinatory opportunities are exciting. These models can reason over extremely complex problems, and with the right prompting, and trial and error to see where they need support, they could now tackle a huge proportion of common business tasks. The expensive o3 can deal with the planning and agent workflow for example, while o4-mini can pick up the bulk of the processing (perhaps with the even cheaper GPT-4.1 mini tackling very large volume tasks). The key will be to set up the problems with data and instructions that turn them into the kinds of structured projects that these models devour. Combining this with carefully included visual elements, perhaps mimicking how we might solve problems with a whiteboard sketch or a thoughtful chart, will help these models still further. Finally, making a rich range of tools with good usability available to these models will give them the power to operate in creative and adaptive ways. Even if we paused here, we would still believe agentic AI is ready for take-off; what it needs now is relentless, well‑governed experimentation to reveal abilities even the labs have not imagined, and to translate them into clear, day‑to‑day value.

EXO

Scaling laws show ongoing gains

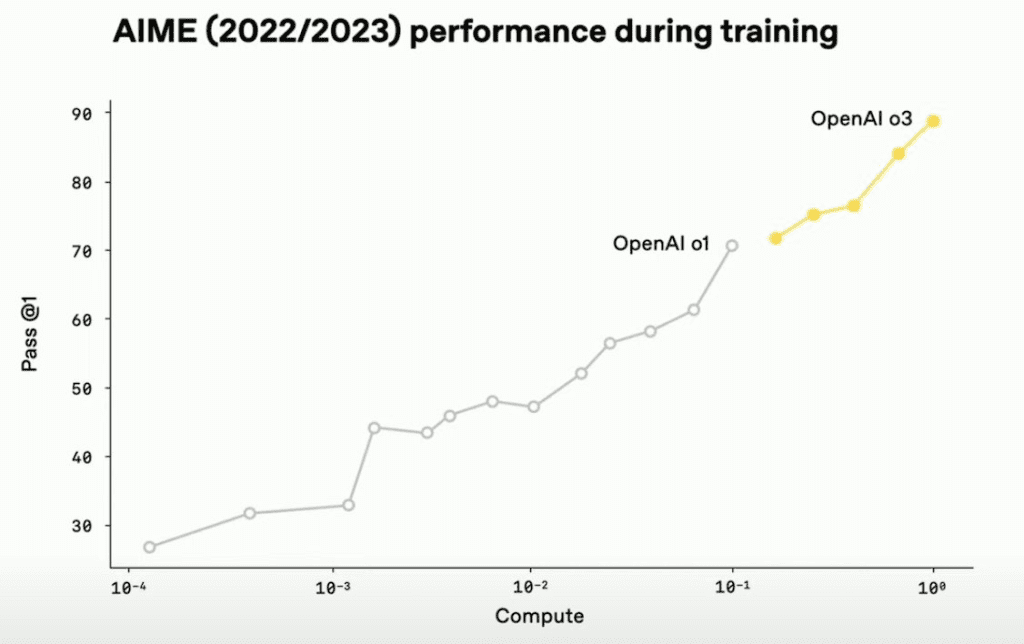

Our visual story this week leads on from the o3 launch. This image charts the rapid performance improvements of OpenAI models on the American Invitational Mathematics Examination (AIME) as training compute rises. The grey curve shows o1 rising from 25 % to 72 % as post-training (reinforcement learning on complex problems) compute grows, while the yellow o3 line passes 85 % overall. AIME is a high‑school Olympiad test whose problems need multi‑step reasoning, so strong results signal genuine mathematical skill. The steady rise implies the 10x more post-training compute spent on o3 brought further gains. If this slope continues, o3‑pro and later generations will continue to scale for now, though at increasing up-front cost, even if they are more efficient and cheaper to run day-to-day with algorithmic improvements.

AI skills a fundamental expectation at Shopify

Shopify CEO Tobi Lütke’s leaked memo requiring teams to “demonstrate why they cannot get what they want done using AI” before requesting new staff has gained coverage this week, including from LinkedIn co-founder Reid Hoffman, who called it an “open-source management technique” on his “Possible” podcast.

The memo positions AI usage as “a fundamental expectation of everyone at Shopify” and suggests teams consider: “What would this area look like if autonomous AI agents were already part of the team?”

Hoffman recommends leaders implement regular AI check-ins where team members share new AI applications they’ve discovered. This practice creates a trust-based environment where individual exploration is encouraged rather than mandated.

OpenAI’s experiences highlight this democratisation of technology creation. Their Chief People Officer successfully “vibe coded” an internal tool she missed from a previous job, despite lacking traditional development skills. “If our chief people officer is doing it, we have no excuse,” noted Kevin Weil, OpenAI’s chief product officer, illustrating how AI is enabling anyone to become a technology creator.

Takeaways: The push for AI adoption is extending beyond technical roles to all employees. LinkedIn themselves recently reorganised around product teams with a blurring of lines between engineering, product and design into full-stack AI empowered builders. Leaders who create environments where staff feel safe to experiment with AI will see more rapid innovation. The barrier between users and creators is breaking down, with AI empowering workers to build their own solutions regardless of technical background.

Weekly news roundup

This week’s news highlights increasing geopolitical tensions around AI chip exports, major advances in AI video generation from China, and growing concerns about AI testing and governance frameworks. We should note that last week we reported that Nvidia had persuaded the Trump administration to suspend its block on H20 GPU exports to China. That trade policy didn’t last long, and below we link to news that Nvidia will be subject to restrictions after all.

AI business news

- China’s Kuaishou unveils ‘world’s most powerful’ AI video generator to rival OpenAI’s Sora (Signals growing AI competition between China and US companies in generative video)

- Ted Sarandos responds to James Cameron’s vision of AI making movies cheaper (Shows how major entertainment industry leaders are thinking about AI’s creative potential)

- Docusign launches AI contract agents (Demonstrates AI’s growing role in automating legal and business processes)

- ChatGPT became the most downloaded app globally in March (Shows continued mainstream adoption of consumer AI tools)

- Microsoft lets Copilot Studio use a computer on its own (Represents a major step forward in AI agents’ ability to interact with software)

AI governance news

- Trump administration reportedly considers a US DeepSeek ban (Indicates growing concerns about Chinese AI companies’ influence)

- Generative AI is replacing the digital jobs Venezuelans rely on (Highlights real economic impacts of AI on global workforce)

- US Officials target Nvidia and DeepSeek amid fears of China’s A.I. progress (Shows escalating tech tensions between US and China)

- Ireland demands look at Grok to check for GDPR violations (Demonstrates increasing regulatory scrutiny of AI models)

- OpenAI partner says it had relatively little time to test the company’s o3 AI model (Raises concerns about AI safety testing practices)

AI research news

- DolphinGemma: How AI can decipher dolphin communication (Shows AI’s potential for understanding non-human intelligence)

- ReTool: Reinforcement learning for strategic tool use in LLMs (Advances our understanding of how AI can learn to use tools effectively)

- SocioVerse: A world model for social simulation powered by LLM agents (Demonstrates potential for large-scale AI social simulations)

- How new data permeates LLM knowledge and how to dilute it (Important insights into LLM training and knowledge integration)

- xVerify: Efficient answer verifier for reasoning model evaluations (Advances in improving AI model evaluation methods)

AI hardware news

- Nvidia hit with $5.5bn charge following US export curbs on AI chips (Shows significant financial impact of AI chip export restrictions)

- TSMC projects confidence even as Trump hits global tech (Indicates resilience in semiconductor industry despite trade tensions)

- OpenAI’s $500 billion Stargate venture weighs future UK investment (Major development in AI infrastructure investment)

- AMD warns Trump trade war with China could cost it $800M (Further evidence of trade tensions impacting AI chip industry)

- Tariffs uncertainty clouds outlook for ASML’s earnings (Shows broader impact of trade tensions on semiconductor equipment makers)