Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- Salesforce’s new Agentforce 2dx developer platform launched at their TDX conference

- Emerging consensus around possible AGI timeframes and proposed national security frameworks

- OpenAI’s projected revenue growth with SoftBank partnership driving expansion

Agents get the Salesforce treatment

Salesforce held its developer conference, TDX, in San Francisco this week and the focus was the launch of its new agent developer experience Agentforce 2dx. The release builds on the core platform with enhanced developer tools, a marketplace for agents, and enterprise-grade management capabilities. Analysts suggest these updates could give Salesforce an edge over rivals in the agentic enterprise market, including Microsoft in particular.

In many hours of keynotes and demos, Salesforce laid out its vision for integrating AI with its established suite of business products. The highlights we spotted were as follows:

- Platform integration: Agentforce connects with Salesforce’s existing Sales and Service Clouds alongside Data Cloud. It interfaces with Slack for collaboration, Tableau for analytics, and MuleSoft for data integration. This breadth of integration is a competitive advantage and the case they make for keeping data in a single secure environment is compelling.

- Modern AI architecture: The platform is built around the ‘Atlas Reasoning Engine’ that seeks to leverage the recent evolution of LLMs into reasoners, and also leverage a range of small customer trained models from Salesforce including their 1B and 7B xLAM models specifically optimised for executing actions on the Salesforce platform.

- Headless agents: The new Agentforce API enables background, data-triggered AI processes that can function without constant human guidance. These agentic workflows with LLM-powered decision-making can interface with the more traditional automation flows already in the stack.

- Prototyping: A free Developer Edition includes access to Data Cloud, data storage, and a quota of LLM generations per hour.

- Pricing shift: Salesforce is moving from the $2/conversation agent cost to a credit-based consumption model for the API. This should make costs more manageable, but they are still going to be relatively high versus a custom build solution.

- Development tools: New tools include VS Code support, DX Inspector, YAML agent definitions, Apex integrations and the Testing Centre. This allows developers to simulate thousands of potential interactions, reducing manual testing requirements.

- Integration approach: Agentforce 2dx allows for incremental AI agent integration rather than wholesale system replacement. Salesforce reports 5,000 deals with Agentforce, though the depth of implementation and specific business outcomes remain to be seen.

- Partner ecosystem: The AgentExchange marketplace includes over 200 partners, including Google Cloud, DocuSign, and Box. This ecosystem approach resembles app stores and other developer marketplaces, with the usual challenges of maintaining quality and consistency.

What’s missing?

- Cross-agent collaboration framework: While individual agents can be built and deployed, the big gap is the lack of an architecture for how multiple agents should work together on complex tasks. Enterprise workflows often require handoffs between specialised systems, but the current release lacks sophisticated orchestration between agents. Multi-agent is slated for later in the year, however.

- Custom model and training capabilities: The ability to train agent models on proprietary enterprise data appears limited. Competitors and custom solutions can offer private fine-tuning and greater model variations. Whilst there is the concept of BYOM (bring your own model) it’s not clear how these can be used to power agents.

- Multi-modal capabilities: There was little information about how agents will handle image, voice, or video inputs. As enterprise use cases expand beyond text, these capabilities will become increasingly important.

- Edge deployment options: For latency-sensitive or offline scenarios, the ability to deploy lightweight agents at the edge isn’t addressed. This capability will be critical for manufacturing, field service, and similar applications.

- LLM cost optimisation: As token costs for large models remain significant, tools for automatically selecting the most cost-effective model for each task will be needed in the future.

Takeaways: We’ve written before about companies such as Klarna moving away from the traditional enterprise software stacks provided by Salesforce, Microsoft, Oracle and Workday etc. (Right on cue, Klarna’s CEO issued a clarification post on their phasing out of Salesforce this week). In reality, it’s more likely that a new layer of agentic intelligence is going to be built on those traditional digital business representations (customers, jobs, sales, tickets, invoices etc.), and Salesforce wants to retain their relevance with a broad ecosystem including developer tools, marketplace distribution, and enterprise governance. While key competitor Microsoft offers well-established productivity products, mature agent-building tools and robust cloud infrastructure, it lacks a cohesive digital business representation layer. This gives Salesforce an advantage in terms of modelling enterprise processes, customer journeys, and business relationships. Their representation, built over years of hoovering up customer data, provides the context that many agents will need to make meaningful business decisions. The real battle isn’t about which company builds the best chatbot, but which can create the most comprehensive representation or ‘digital twin’ of an enterprise in a form and with the tools and integrations to make the agentic workforce come alive. Salesforce’s combination of business process experience and new capabilities looks promising if costs can be controlled, and the roadmap quickly delivers more advanced features.

EXO

Mutually assured AI malfunction

A consensus is forming in Silicon Valley and now in Washington that Artificial General Intelligence (AGI) – AI systems capable of performing nearly any cognitive task humans can do and more – could arrive within just 2-3 years. As former Biden AI adviser Ben Buchanan told The New York Times, “AI is going to be [THE] big thing inside Donald Trump’s second term.”

This urgency has prompted notable policy proposals. Anthropic, led by CEO Dario Amodei, submitted recommendations to the White House emphasising national security testing, stronger export controls on chips, enhanced lab security protocols, and expanded energy infrastructure to maintain US technological leadership.

Similarly, a paper by former Google CEO Eric Schmidt, AI safety expert Dan Hendrycks, and Scale AI CEO Alexandr Wang introduces “Mutual Assured AI Malfunction” (MAIM). This deterrence framework suggests nations will naturally avoid pursuing unilateral AI dominance because rivals can easily sabotage advanced AI projects. Since AI datacentres are difficult to defend and training runs are vulnerable to subtle interference, any country racing toward superintelligence would likely trigger preventive cyber espionage or attack from competitors. Much like nuclear deterrence, this “balance of terror” creates a standoff where no state dares seek dominance.

Both proposals highlight compute security and AI chip supply chains as central to national security, though they differ on governance approaches. Anthropic ultimately favours structured action while the Schmidt paper emphasises deterrence dynamics.

Takeaways: As we’ve highlighted many times in this newsletter, the gap between expert warnings and policy readiness is growing at the rate at which AI advances. While many now predict AGI in 2-3 years, coherent national security frameworks are non-existent. The Trump administration faces a critical choice: pursue a unilateral AI advantage through a Manhattan Project-style initiative, or adopt the multipolar approach suggested by Schmidt’s MAIM concept. Their early moves in a chaotic first few weeks suggest a preference for dominance, despite technical realities favouring the latter. China is unlikely to accept US technological supremacy given the existential stakes, making mutual deterrence almost inevitable. The greatest near-term danger might be miscalculation during this unstable transition period rather than the technology itself.

OpenAI’s revenue projections

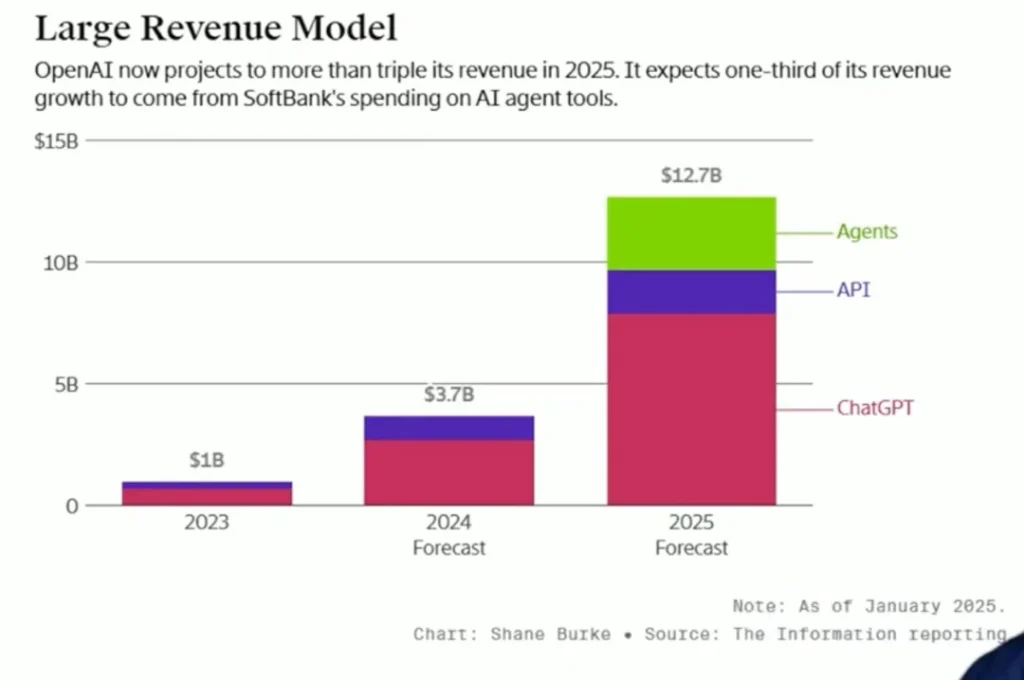

This chart from The Information projects OpenAI’s revenue will grow significantly from $1 billion in 2023 to $12.7 billion in 2025 with a new “Agents” category appearing this year. This growth aligns with their recent SoftBank partnership, where SoftBank committed $3B annually to deploy “Cristal intelligence” across its companies. The chart reveals SoftBank will drive one-third of OpenAI’s revenue growth in 2025. Their joint venture in Japan establishes a blueprint for enterprise AI adoption globally, as OpenAI looks to transition from research lab to commercial powerhouse.

Takeaways: OpenAI is betting on agent technology as its next major revenue stream. SoftBank’s investment signals enterprise appetite for AI automation. This partnership model may become the template for how AI companies scale globally through large corporate tie-ups. It’s also telling that ChatGPT continues to be OpenAI’s primary revenue driver, with API usage showing steadier but slower growth despite powering countless third-party products. This suggests consumer-facing leadership remain critical even as enterprise solutions emerge in a highly competitive space.

Weekly news roundup

This week shows major tech companies intensifying AI competition, growing concerns about AI safety and governance, breakthrough research in reasoning capabilities, and significant infrastructure investments to support AI development.

AI business news

- Microsoft developing AI reasoning models to compete with OpenAI (Signals growing competition between major AI players and advances in reasoning capabilities.)

- Meta’s Llama 4 AI model expected in weeks, powering voice assistants and AI agents (Shows open-source AI models continuing to advance rapidly.)

- Google’s latest experiment is an all-AI search result mode (Demonstrates how AI is reshaping core internet services.)

- OpenAI reportedly plans to charge up to $20,000 a month for specialized AI ‘agents’ (Reveals emerging business models for advanced AI capabilities.)

- A quarter of startups in YC’s current cohort have codebases that are almost entirely AI-generated (Shows the growing impact of AI on software development practices.)

AI governance news

- A well-funded Moscow-based global ‘news’ network has infected Western artificial intelligence tools worldwide with Russian propaganda (Highlights vulnerabilities in AI training data.)

- State dept. to use AI to revoke visas of foreign students who appear “pro-Hamas” (Shows controversial government applications of AI surveillance.)

- New data shows just how badly OpenAI and Perplexity are screwing over publishers (Illustrates ongoing tensions between AI companies and content creators.)

- The English schools looking to dispel ‘doom and gloom’ around AI (Demonstrates efforts to promote positive AI education.)

- Reinforcement learning pioneers harshly criticise the “unsafe” state of AI development (Highlights growing concerns about AI safety from leading experts.)

AI research news

- AI pioneers scoop Turing award for reinforcement learning work (Recognition of foundational work in AI development.)

- Visual document retrieval-augmented generation via dynamic iterative reasoning agents (Advances in combining visual and textual AI understanding.)

- LLM post-training: A deep dive into reasoning large language models (Important insights into improving LLM capabilities.)

- MPO: Boosting LLM agents with meta plan optimisation (New techniques for improving AI agent performance.)

- Chain of draft: Thinking faster by writing less (Novel approach to improving AI efficiency.)

AI hardware news

- CoreWeave acquires AI developer platform Weights & Biases (Consolidation in AI infrastructure space.)

- Mistral urges telcos to get into the hyperscaler game (Push for more diverse AI infrastructure providers.)

- TSMC pledges to spend $100B on US chip facilities (Major investment in AI chip manufacturing capacity.)

- Nordics’ efficient energy infrastructure ideal for Microsoft’s data centre expansion (Strategic location choices for sustainable AI infrastructure.)

- Broadcom shares surge as solid forecast eases demand worries for AI chips (Strong financial outlook for AI chip sector.)