Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- The recent tech stock turbulence and its implications for AI investments.

- METR’s innovative approach to benchmarking AI capabilities against human experts.

- Decoding OpenAI’s mysterious strawberry-themed teasers.

Market rollercoaster rocks tech stocks

In a week of market turmoil, tech stocks faced a significant downturn, sparking further debates about the stability of the AI boom. The catalyst? A confluence of events, from Japan’s interest rate hike to disappointing US jobs data, set against a backdrop of ongoing economic uncertainty.

Let’s summarise the sequence of events:

- Japan raised interest rates to 0.25% last week

- Rumours first emerged of a design flaw in Nvidia’s new Blackwell chip that could result in a 3-month delay in shipments

- The OECD’s leading indicator signalled a weaker US economic outlook

- Former tech giant Intel reported a $1.6 billion Q2 loss and planning 15% workforce reduction (despite a recent injection of federal cash from the CHIPS act)

- Disappointing US jobs data heightened economic concerns

- The US Fed failed to cut interest rates

- Of the back of the BOJ’s decision the yen surged, forcing investors who borrowed yen for tech investments to sell

- Japanese stocks suffered their biggest daily loss since 1987

- Widespread selling driven by these factors created a snowball effect

- Investors and algo trades rotated out of tech into ‘safer’ sectors

- Falling big-tech stocks, where 30% of the S&P is concentrated (some $14 trillion) dragged down indices, triggering further cross-sector selling

- As of Friday, US jobs numbers improved slightly, and stocks had re-bounded somewhat with the tech sector at +14% YTD

This sequence highlights how global economic factors, fiscal decisions, and market dynamics interacted to create a significant market event. Today tech stocks are a huge deal and conversely, any concerns about economic growth will downgrade outlooks for the tech platforms that power every industry. Plus, to a degree the tech correction also reflects the longer-term trend of struggling SaaS firms and the reduced VC deal flows seen in 2024 (somewhat at odds with the default narrative of excessive AI investment).

But is this all of this merely a short-term economic correction or a sign of deeper issues in the AI landscape? As we explored back in July, lazy comparisons to the dot-com bubble and AI ignorance abound. Andrew Odlyzko, a professor of mathematics at the University of Minnesota and expert on economic bubbles, argues that the AI situation more closely mirrors the early days of electricity – a fundamental shift in how we harness and apply technology.

At ExoBrain we believe that what we’re witnessing with AI isn’t a market cycle, but a fundamental reorganisation of how compute power is applied and valued in the economy. Market turbulence will likely continue as a result of the new, unpredictable and recursive evolution of multiple new technologies, which will drive what we’ll look back on as ‘creative destruction’.

There will be failures. Intel has been caught out by this new wave and has made the wrong calls. Nvidia may have dropped the ball somewhat rushing to ship their next generation chip. The pure-play AI companies like OpenAI and Anthropic face intense competition, uncertainty on profit mechanisms, and the cost of pushing the frontier forward remains huge while the next generation of more efficient AI chips are not yet deployed (and now delayed).

Yet, amidst this uncertainty, the tech giants remain well-positioned to weather the storm. Their diversified business models, cash (and data) reserves, and essential role in global business infrastructure provide a buffer against short-term volatility. Amazon, Google, Microsoft, and Meta have all signalled their intention to continue heavy investment in infrastructure. They are scaling up their capital expenditures significantly, with a focus on servers and datacentres to support growing cloud demand across the board. While some are developing their own custom AI chips, Nvidia’s GPUs remain a critical component of their infrastructure. The reported Blackwell delay is likely to be a temporary setback in the broader context of sustained demand for high-performance compute.

Takeaways: For investors and business leaders, the critical challenge is to differentiate between market blips and broader economic shifts (and the simplistic theories that people and traders latch onto), and the long-term transformative potential of AI. While caution is advisable, abandoning AI investments at this juncture would be short sighted. As we consistently highlight in this newsletter, we are merely at the dawn of a compute-driven revolution. Now is not the time to blink first.

The holy grail of benchmarks

This week, METR provided an update on their novel approach to evaluating AI capabilities, pitting machine performance against human experts across a diverse range of tasks. This new methodology aims to provide an improved understanding of AI progress, moving beyond abstract benchmarks to assess real-world impact.

The AI landscape is dominated by leader boards and benchmark scores that often fail to translate into meaningful insights about AI’s practical capabilities (as we covered in a previous newsletter). METR’s novel approach addresses this gap by directly comparing AI agent performance to that of human experts on a variety of complicated technical tasks, from cybersecurity to machine learning.

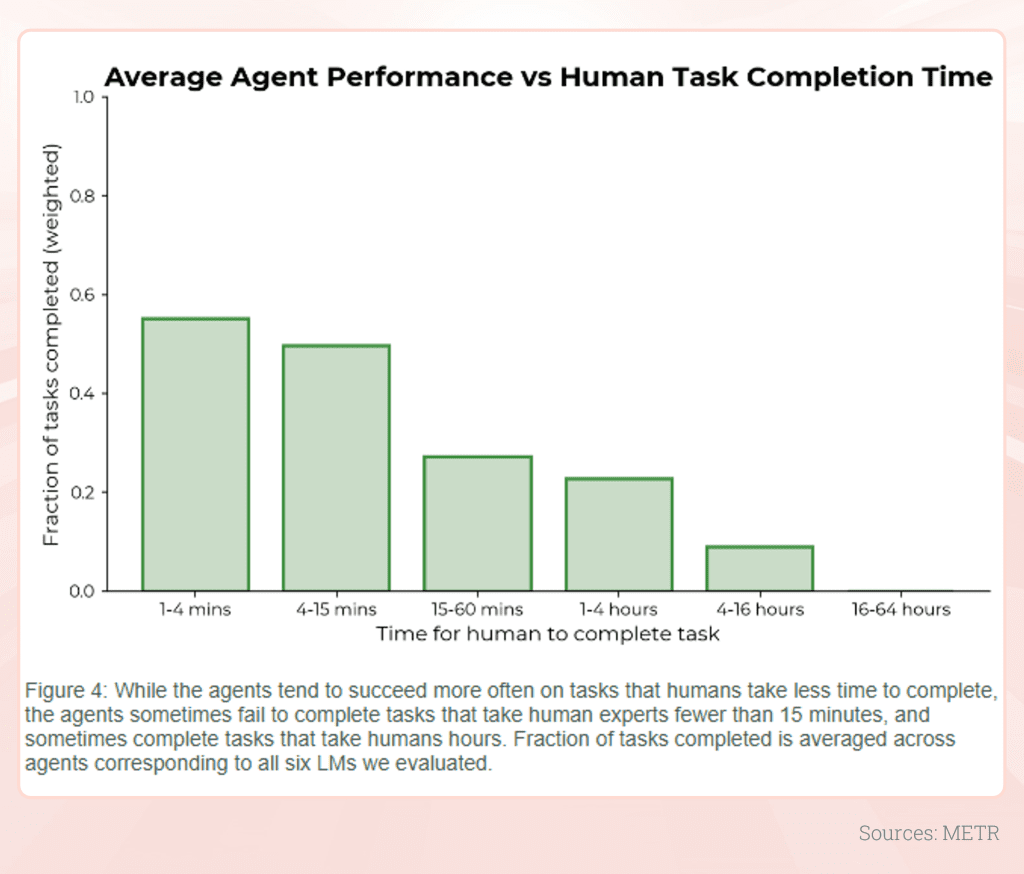

At the heart of METR’s evaluation is a focus on the correlation between human and agent performance. Their findings reveal that while AI agents generally excel at tasks that humans can complete quickly, models like Claude 3.5 and GPT-4o struggle to complete tasks that take human experts hours to solve. This view offers a clearer picture of where AI stands in relation to human capabilities and is essentially the holy grail of AI development in 2024 and the road to AGI… ‘longer horizon’ tasks with all of their dependencies, complexities and need for planning and reasoning.

This chart compares the performance of AI agents to human experts across tasks of varying difficulty. The x-axis represents how long it takes humans to complete different tasks, ranging from 1-4 minutes to 16-64 hours. The y-axis shows the fraction of tasks completed by AI agents, averaged across six different language models:

This is a great new evaluation method. For businesses, a customised version of this could offer a more reliable way to assess where different AIs can most effectively augment or potentially replace human labour. The study’s revelation that AI agents can generally complete tasks at 1/30th the cost of human experts is particularly noteworthy, suggesting significant potential for cost savings and efficiency gains in certain areas. However, the research also highlights the room for improvement in AI’s ability to tackle complex, long-form tasks. It will be fascinating and fundamental to see how the next generation of models perform here, such as GPT-5, Llama 4 and Claude 3.5 Opus. Will they push the line out into long task territory, and what will this mean for job displacement? Or will the line move up, increasing quality but not moving the quantum of automation to a new level?

Takeaways: METR’s new evaluation approach is a great way to better understand where AI capabilities currently sit; many short and medium sized tasks are in scope and in many cases vastly cheaper to complete with AI. Longer and more complex tasks are the next frontier.

EXO

Open AI’s forbidden fruit

Sam Altman, CEO of OpenAI, stirred up speculation this week with a social media post featuring a photo of strawberries growing in planters. The caption, “I love summer in the garden,” sent the AI community into a frenzy of interpretation, with many believing it to be a veiled reference to a rumoured new AI planning algorithm codenamed ‘Strawberry’.

In July, Reuters reported on a secret OpenAI project called ‘Strawberry’, aimed at enhancing AI reasoning and enabling autonomous internet navigation. The timing of Altman’s post, amidst growing competition in the AI space, has only fuelled the fire of speculation.

Adding to the intrigue is a mysterious X account, “I rule the world MO“, which has been posting cryptic messages seemingly related to OpenAI’s developments. The account’s posting patterns are unusually frequent and responsive, leading some to speculate whether it might be an AI-powered account testing advanced language models. When this account posted “Welcome to level 2. How do you feel?”, Altman himself responded within hours with “Amazing to be honest,” further stoking curiosity.

The LMSYS chatbot arena once again featured in the mystery as an anonymous new model called “sus column R”, demonstrated impressive reasoning abilities and sparked speculation about its origins and whether it might be related to OpenAI’s rumoured ‘Strawberry’ project.

The peculiarity of the situation isn’t lost on industry observers. Ethan Mollick, a professor at the Wharton School, noted, “OpenAI is the only company whose corporate communication strategy consists of obscure hints, Delphic pronouncements and riddles that could fit into adventure games.” This approach, while unorthodox, has proven effective in generating buzz and maintaining OpenAI’s mystique in the fast-paced world of AI development. The hype has even led to some extreme displays of enthusiasm, with one member of the AI community reportedly getting a strawberry tattoo in anticipation of the rumoured new model.

In a strange twist of fate, amidst all this speculation about advanced AI capabilities, it has come to light that most leading AI models struggle with a seemingly simple task: correctly counting the number of “r” letters in the word “strawberry”. This quirky limitation serves as a reminder that even as we speculate about ground breaking AI advancements, current models still struggle with some basic tasks.

Takeaways: Despite the social media frenzy, it’s worth noting that as of yet, nothing concrete has materialised from this flurry of activity. While OpenAI’s teasers have certainly captured attention, the company might need to hurry up and ship actual products. Competitors like Meta, Google, and Anthropic are making significant strides in AI development, with some arguably ahead of OpenAI on several fronts. The AI race is heating up, and cryptic hints can only sustain interest for so long without tangible results.

Weekly news roundup

This week’s news highlights the expanding influence of AI across various sectors, ongoing regulatory scrutiny, advancements in AI research, and the competitive landscape in AI hardware development.

AI business news

- Palantir partners with Microsoft to sell AI to the government (This collaboration underscores the growing interest in AI solutions for government applications, raising questions about surveillance and data privacy.)

- AI use now widespread in US legal sector despite ethical concerns, ABA task force finds (This report highlights the tension between AI adoption and ethical considerations in the legal field, a theme we’ve explored in previous discussions on AI ethics.)

- AI is coming for India’s famous tech hub (This story illustrates the global impact of AI on traditional tech hubs, potentially reshaping the international IT landscape.)

- Forget Midjourney — Flux is the new king of AI image generation and here’s how to get access (The emergence of new AI image generation tools like Flux demonstrates the rapid evolution and competition in the generative AI space.)

- OpenAI reportedly leads $60M round for webcam startup Opal (This investment suggests OpenAI’s interest in expanding its influence beyond language models into visual AI applications.)

AI governance news

- UK starts probe into Amazon’s AI partnership with Anthropic (This investigation reflects growing regulatory scrutiny of big tech collaborations in AI, a recurring theme in our coverage of AI governance.)

- OpenAI says its latest GPT-4o model is ‘medium’ risk (This self-assessment by OpenAI highlights the ongoing debate about AI safety and the need for transparent risk evaluation.)

- As AI transforms cybersecurity, Cisco’s Martin Lee has only one piece of advice for IT managers: prepare for the unexpected (This advice underscores the unpredictable nature of AI’s impact on cybersecurity, a critical concern for businesses and organisations.)

- UK: Creators’ Rights Alliance campaigns against unlicensed AI use (This campaign highlights the ongoing tension between AI development and intellectual property rights, a topic we’ve covered extensively.)

- No one is ready for digital immortality (This article explores the ethical and psychological implications of AI-powered digital avatars, touching on themes of identity and mortality in the AI age.)

AI research news

- CodexGraph: bridging large language models and code repositories via code graph databases (This research could significantly enhance AI’s ability to understand and generate code, potentially revolutionising software development.)

- Fluent Student-Teacher Redteaming (This study explores novel approaches to AI safety, a crucial area of research as AI systems become more powerful.)

- GMAI-MMBench: a comprehensive multimodal evaluation benchmark towards general medical AI (This benchmark could accelerate the development of AI in healthcare, a field with enormous potential for AI applications.)

- Optimus-1: hybrid multimodal memory empowered agents excel in long-horizon tasks (This advancement in AI agents’ memory capabilities could lead to more versatile and capable AI systems.)

- Self-Taught Evaluators (This research into AI self-evaluation could improve the reliability and transparency of AI systems.)

- Predicting the results of social science experiments using LLMs (This study explores the potential of AI to contribute to social science research, potentially opening new avenues for interdisciplinary collaboration.)

AI hardware news

- How chip giant Intel spurned OpenAI and fell behind the times (This story illustrates the high stakes in the AI chip market and the consequences of missing key opportunities.)

- AI chip startup Groq rakes in $640M to grow LPU cloud (This significant funding round highlights the intense competition and investment in specialised AI hardware.)

- Exclusive: Chinese firms stockpile high-end Samsung chips as they await new US curbs, say sources (This report underscores the geopolitical tensions surrounding AI chip supply chains, a recurring theme in our coverage.)

- TSMC shatters records with July surge fueled by Apple, Intel, and Nvidia orders (This surge in chip orders reflects the growing demand for AI-capable hardware across various tech sectors.)

- AMD hopes latest software unleashes MI300’s full potential (This development highlights the importance of software optimisation in maximising AI hardware performance, a crucial factor in the competitive AI chip market.)