Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we deep-dive into the Nvidia GTC conference announcements and specifically their new chip Blackwell. GPU compute is the fundamental driving force and also main indicator for AI progress:

- Blackwell is a big chip and a big deal; Exa-scale ‘AI factories’ packed with these GPUs will pave the way for an age of abundant compute and Nvidia becoming the most valuable company on earth

- Inflection and Mustafa Suleyman get subsumed by Microsoft indicating the power of big tech, and the challenge of consumer-centric foundation model building

- GPT-5 is a few months away but OpenAI CEO Sam Altman and the rumour mill are setting high expectations

The key themes this week…

Blackwell is a big deal

At their AI ‘festival’ (GTC) this week Nvidia announced their next generation super chip; the RTX 50-series or Blackwell (named after the statistician and game theorist David Blackwell). The basic numbers are predictably huge… 208 billion transistors, 4-30x more performance than the current generation Hopper chip, plus huge boosts to the memory, connectivity and density across the supporting Blackwell datacentre architecture. What’s impressive is that in 2020 we saw the first exaFLOP super computer (an entire building sized machine pumping out more than 1 quintillion floating point operations per second) and with Blackwell, a single rack the size of the fridge in your kitchen is exa-scale. The ultimate expression of this new chip generation is what Nvidia calls the “AI Factory”. 32,000 GB200 super GPUs packed into a datacentre with more internal high-speed bandwidth than the ENTIRE Internet and 600x more power than that ultimate supercomputer from way back in… 2020.

Takeaways: With maybe 100-200 of these AI factories to be deployed over the next few years, what does this mean for us humans? We are about to realise that we have been living in a world with a miniscule amount of computational power. Like our medieval ancestors who would not have been able to imagine the future abundance of food, healthcare, travel and entertainment that would be born from the first industrial revolution, we now face the challenge of adjusting to a future of truly ‘abundant compute’. The difference here is that this will happen in the next few years, not over several centuries.

We’re also going to see a lot more AIs… GPT-4 took 100 days to train back in 2022, it will now take <3 days in an AI Factory. We’ll also see bigger smarter models, Nvidia predict that Blackwell kit will run models of 20+ trillion parameters (nearer to 1/4 the size of a human brain rather than the 1/100th we see today).

And with these AIs and ‘accelerated’ computing capabilities Nvidia’s conference also gives a glimpse of the applications; from new intelligent enterprise software, to autonomous industry, world simulators where time is sped up 1000x to train robots or design new chemistry and biology, to the brains of humanoid machines themselves.

Whilst Blackwell suggests we’re not running out of ‘space at the bottom’ to reference Richard Feynman’s assertion in the 1950s that the nano-scale would provide huge scope for machine miniaturisation, we may be nearing the buffers ‘at the top’. Power grids are straining as we build new datacentres, and the ability to plug in these AI factories, not fabricate the chips, will become the constraining factor. This explains Amazon’s recent step in buying a nuclear power station (at 960MW this could in theory power around 15 Blackwell AI factories). This post provides an excellent analysis of the power challenges, and a concerning note for us Europeans. We are far behind in building AI capable datacentres. Today a simple chatbot doesn’t require nearby compute, but in the next few years, more advanced high bandwidth AI applications may thrive on low latency. The future will be one of intense competition over sovereign AI factory capacity as much as the ingenuity to make use of it.

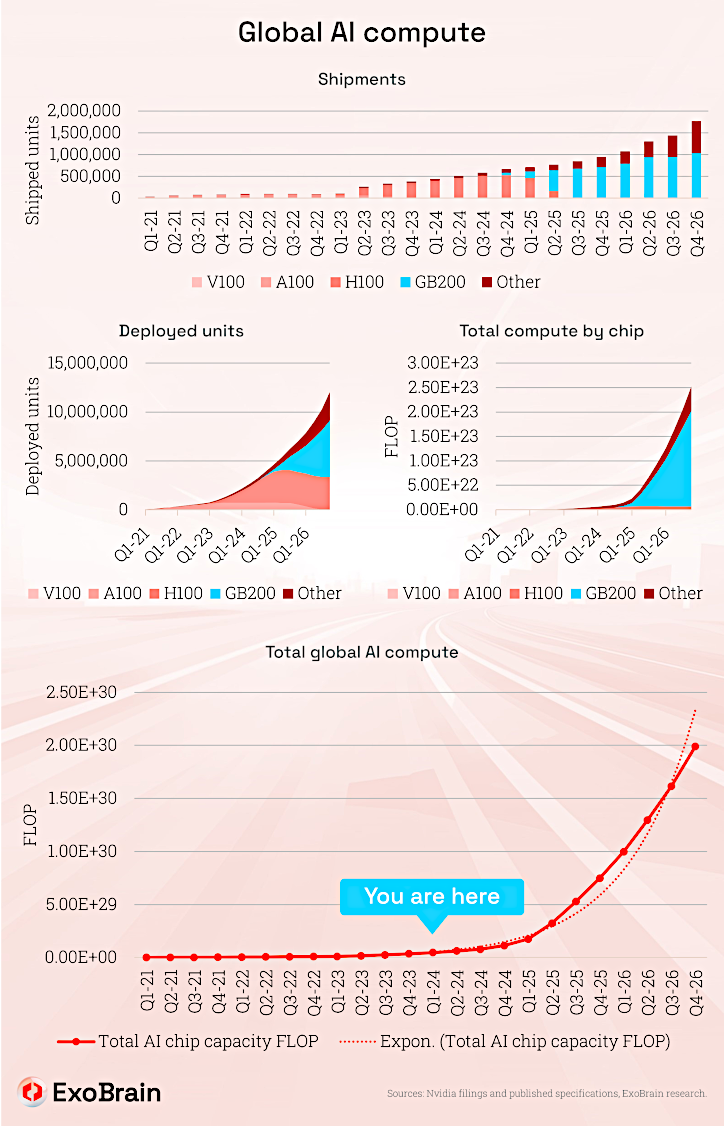

Our newly updated ExoBrain AI compute model predicts we’ll see a 10x growth by this time next year and 50x by the end of 2026. This is hardware only and does not include the huge gains that are coming from more efficient models and software techniques. Where today a power user might be running 4-5 model instances on and off during the day, in a few short years we’ll each be able to run 2,000-3,000, the vast majority of which will be autonomous working tirelessly to advance every facet of our physical and virtual lives.

Some interesting points:

- We assume that other firms will start to compete more effectively with Nvidia, but outside of some radical breakthrough, that can’t happen fast enough to shift their compute domination and production deployment head start. Nvidia is likely to become the most valuable company in the world.

- This is of course assuming there are no disruptions to the chip output from the single TSMC facility in Taiwan where ALL of these chips come from today (and that the data centres can be built or upgraded, but we are not estimating an impossible rate in this regard).

- No matter how fast competitors ramp up or how many H100s are shipped, from 2025 and beyond the vast majority if AI computations in the cycle (and potentially the emergence of AGI) will happen on a Blackwell, by shear dint of its relative power.

- Its a cliché in the AI world to see a “you are here” on an exponential curve. But as of this week’s news, this is a real curve. You are here..

Consumer foundation AI is hard

In a surprise move this week, Microsoft announced that Mustafa Suleyman would be joining them from Inflection AI, and to all intents and purposes the lab would be coming under the wing of the tech giant, with a licencing and staff transfer deal. Suleyman will lead the consumer focused AI arm at Microsoft across Copilot, Edge and Bing. Inflection posted that they would be shifting focus to enterprise use cases. Pi their ultra-friendly assistant had recently seen a model enhancement to near GPT-4 levels at a 40% smaller size. But with no brand awareness, distribution, API, or paid consumer subscription option, and tough competition from ChatGPT and Claude, it seems Inflection were not up for the fight. Perhaps for Microsoft, Suleyman is seen as the creative force that can stitch together the consumer space more coherently. In other lab news Stability AI saw the departure of further key technical staff. Despite having substantial GPU compute and having made some progress with its Stable Diffusion and LM text models, again not being closely aligned to a big tech firm is proving problematic.

Takeaways: In the current landscape, the big tech firms are the ultra-dominant forces (and perhaps now Saudi Arabia with their new $40bn AI fund). AI labs need vast pockets, market access, and AI GPU ‘factories’ given the huge costs in researching, training and continuously deploying competitive models. Plus consumer AI is still a challenge, with limited user maturity and willingness to pay for Internet tools. But as the compute abundance equation changes, and open-weight models grow in power and diversity, this concentration of power will lessen. Meta will release their Llama 3 open-weight model to the world in July and this will be exactly the disruptive event Meta are wanting to achieve (from their war chest of some 600,000 GPUs).

GPT-5 rumours

As the Claude 3 family of models continue to impress, with new capabilities emerging as more users switch, pressure continues to mount on OpenAI to release GPT-5. This week Sam Altman has been busy hyping up the potential for the model, and some upcoming features (likely in the planning, autonomous agents and robotics spaces), but also dampening down timeline expectation.

Takeaways: The latest consensus is mid-year for GPT-5, and a major step up in capability, although what that really means will be a huge indicator of future AI trends. You can hear Altman’s interview on the Lex Fridman podcast here. Several quotes have emerged from Altman’s publicity activity this week that are worth considering:

- Firstly that ChatGPT to date has been a revolution in AI “expectation” but not in function… this seems fair in that much of what AI can achieve is still theoretical and there is a lot of integration to be done. But also suggests that the future capability steps will be more significant.

- He also suggested that companies should not be delaying innovation, and should learn and integrate now, so that they are ready for these significant future steps in capability as we near AGI. (Naturally we at ExoBrain would agree, but it also just seems like the most common-sense approach in an unpredictable world, which is about to be flooded with AI compute).

EXO

This week’s news highlights the massive scale of AI investment and development, with deals between tech giants, billions in funding for nations and companies, and new efforts around AI governance on a global stage.

AI business news

- Apple in talks to let Google’s Gemini power iPhone AI features, Bloomberg News says (This potential deal could significantly impact how iPhone users interact with AI, echoing previous discussions about Apple’s evolving AI strategy.)

- Saudi Arabia plans $40bn push into artificial intelligence, NYT reports (This massive investment shows AI’s growing importance on a global scale and how nations are positioning themselves in the AI race.)

- Neuralink video shows patient playing chess using brain implant (This highlights advances in AI-powered medical devices and raises ethical questions about the intersection of human and machine.)

- Google’s DeepMind is coming for European football tactics (DeepMind’s foray into sports analytics shows AI’s potential to disrupt established strategies, potentially inspiring new approaches across industries.)

- BBC develops AI plans and talks to Big Tech over archives access (This shows how traditional media are exploring AI to manage vast content libraries, potentially unlocking new insights and revenue streams.)

AI governance news

- The first ‘Fairly Trained’ AI large language model is here (This model could serve as a template for mitigating biases in AI systems, addressing a major concern we’ve discussed frequently.)

- Google hit with $270m fine in France as authority finds news publishers’ data was used for Gemini (This fine highlights the ongoing struggle between tech giants and regulators over AI data usage, similar to the OpenAI copyright lawsuit.)

- India drops plan to require approval for AI model launches (This shows a more relaxed approach to AI governance, potentially fostering rapid development but also raising concerns about responsible deployment.)

- US Leads Charge For Global AI Regulations At UN (This demonstrates how AI governance is becoming a global discussion, highlighting the need for international collaboration on ethical and safe AI use.)

- GitHub’s latest AI tool can automatically fix code vulnerabilities (This tool could improve software security and reduce the risks of AI-related exploits, addressing a key concern amid the rapid adoption of AI tools.)

AI research news

- Quiet-STaR: Language Models Can Teach Themselves to Think Before Speaking (This research focuses on improving AI’s reasoning abilities, potentially making it more reliable and aligned with human expectations.)

- Introducing RAG 2.0 – Contextual AI (This update to the Retrieval Augmented Generation model could improve AI’s ability to access and utilize relevant information, enhancing its output quality.)

- Apple MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training (This paper offers insights into how multimodal models like Gemini are trained, which could be useful for understanding their strengths and limitations.)

- LlamaFactory: Unified Efficient Fine-Tuning of 100+ Language Models (This tool streamlines AI model development, potentially making it easier for businesses to create custom AI solutions.)

- Evolutionary Optimization of Model Merging Recipes (This research explores new ways to combine AI models, which could lead to more powerful and versatile AI systems in the future.)

AI hardware news

- Intel awarded $8.5 billion in funding for building chipmaking fabs – CHIPS Act funding to boost US semiconductor manufacturing (This government support underscores the importance of specialized AI hardware for national competitiveness in the AI landscape)

- Nvidia And Dell Build An AI Factory Together (This partnership signals growing demand for end-to-end AI solutions and the need for collaboration in the hardware space.)

- Memory chipmaker Micron’s shares surge as AI boom drives h3 forecast (This demonstrates the financial impact of AI on the hardware industry and the increased demand for memory in AI systems.)

- NVIDIA’s Project GR00T, Running on the Jetson Thor, Aims to Deliver Embodied AI for Humanoid Robots (This project pushes the boundaries of AI in robotics, potentially leading to new applications and interactions between humans and machines.)

- Foundry Emerges From Stealth With $80m For Purpose-Built AI Cloud (This investment highlights the growing market for specialized AI cloud services, offering more options for businesses looking to leverage AI.)