Welcome to our weekly news post, a combination of thematic insights from the founders at ExoBrain, and a broader news roundup from our AI platform Exo…

Themes this week

JOEL

This week we look at:

- AI’s bubble debate versus early signs of structural workforce change

- ChatGPT’s new branching feature

- Google’s Nano Banana becoming the breakout AI photo editor

Bursting bubble or workforce transformation?

The ExoBrain newsletter is back after a short summer break. While we were away a relatively quiet period in launches allowed attention to shift to uncomfortable questions around AI progress, and the sector’s massive data centre investments and lab valuations. An MIT study claiming 95% failure rates for AI projects coincided with tech stock selloffs and mixed results, not least Salesforce’s disappointing AI monetisation figures. With GPT-5’s initial reception proving lukewarm, many sceptics have been talking of vindication for their warnings about scaling limitations and a bursting bubble.

But we also saw Stanford University publishing some of the first large scale research showing AI’s workforce impacts may have already begun. Using high‑frequency payroll microdata, the study found a 13% relative decline in employment for workers aged 22 to 25 in AI-exposed professions like software engineering since late 2022. Entry-level positions are disappearing while senior roles remain stable or grow. This suggests that as such workers typically handle more structured, codified tasks, exactly what current AI systems are starting to automate, they are at greater risk.

The study identifies what it calls the “tacit knowledge premium” which can explain why businesses haven’t yet deployed deeper automation or new innovation on a large scale. The messiness factors including data access, real-time coordination, dynamic environments and are what experienced workers navigate using accumulated knowledge that isn’t easily codified. Current AI systems and agents still require scarce expertise to implement and learn successfully, and the techniques and architectures remain work in progress.

On the surface this seems to be a contradictory picture; early job-displacement suggesting AI systems could start to capture a material proportion of economic activity, whilst analysts are concerned about the lack of returns on the $400 billion bill for new data centres in 2025. On the surface the numbers don’t look great. To earn a 20% return on invested capital, the industry would need to generate $500+ billion annually. Current AI revenues, even generously estimated, sit around $50-100 billion. This gap between required and actual revenue drives the bubble narrative.

Yet this analysis treats data centres like traditional infrastructure. Stanford economist Erik Brynjolfsson, part of the team behind the employment study, offers an alternate framework that he calls the “productivity J-curve.” During technological revolutions, productivity initially decreases as companies invest in innovation, training, process redesign, and infrastructure without immediate returns. These investments create “intangible assets” that accounting systems don’t capture. Later, organisations harvest the gains as the capabilities mature. Hyperscalers spending billions on data centres and model training are building unique AI capacity. Companies that move early, experiment, and are ambitious with agent implementation experience failure but learn. Every new AI workflow iteration creates intellectual capital. When a company spends six months failing to deploy an AI agent, they learn which edge cases break automation, how to restructure workflows, and where human-in-the-loop remains critical. This knowledge doesn’t appear on any balance sheet but determines who succeeds when the technology matures. Traditional financial analysis sees the costs but misses the massive potential capital.

So, when does the harvesting begin? Herein lie the fundamental differences to past technology revolutions. AI is moving faster than many realise whilst still being pre-harvest, but we may be nearing thresholds that will trigger significant impact. As GPT-5’s capabilities have become clearer through use, particularly in coding tasks where it has regained ground from Claude, we see the model fits perfectly into METR’s analysis of accelerating AI progress which we have discussed several times in past newsletters. Their research shows GPT-5 can complete tasks taking humans 2.3 hours with 50% reliability, up from 54 minutes for Claude 3.7 Sonnet released months earlier, fitting the exponential progress curve. METR’s research also suggests AI task horizons have doubled every seven months since 2019. By 2027, systems might handle 40-hour tasks reliably. More critically, this crosses the threshold where AI could meaningfully contribute to R&D and implementation, potentially accelerating its own progress. At ExoBrain we focus on novel use cases and agentic implementations that are highly valuable but today require extensive human effort and investment. This recursive improvement possibility, where AI enhances AI development and even self-implements represents uncharted territory. Unlike previous technologies, AI could theoretically compound its own capabilities, making traditional economic models obsolete. Systems that can meaningfully contribute to self-improvement don’t need full AGI/ASI, just reliable execution of multi-hour coding and experimentation tasks. GPT-5’s 2.3-hour horizon suggests we’re 2-3 doublings (14-21 months) away.

The magnificent seven of Google, Microsoft, Amazon, Apple, Nvidia, Tesla and Meta and the other big tech firms face a complex game theory problem. They understand this rapid trajectory and the potential for future self-improving dynamics. They see a tantalising vision of unlocking trillions of dollars in potential services-as-a-software revenue. But the technological limits are unknown, and the competition is unceasing. Nobody knows what breakthroughs competitors might be about to achieve, but they know such breakthroughs have happened in recent history (Google had transformers for years before anyone took notice). And nobody knows where the capability ceiling is. If you exit at 2-hour task completion for example, and then competitors reach 200-hour capabilities, you’re permanently destroyed.

The “bubble” framing assumes linear value creation from static infrastructure. But if capabilities continue doubling every 7 months the excessive 2025 billions will look cheap. The companies that stay the course and amass infrastructure and capability could reap astronomical rewards. The cost of overinvesting might be huge, but the cost of underinvesting could be existential. Bubbles pop when rational actors can exit profitably. Here, the rational actors are trapped by the logic of exponential progress and winner-takes-everything. The geopolitical dimension greatly intensifies this dynamic. US companies cannot slow investment while China develops domestic AI capabilities and it’s labs build on their now total dominance of open-source AI. This creates a three-way prisoner’s dilemma: US companies competing against each other, the US collectively racing China, and China circumventing hardware restrictions through algorithmic efficiency.

So, to the key question, will AI continue its current progress? A recent Existential Risk Persuasion Tournament XPT found that both superforecasters, individuals with exceptional prediction track records, and domain experts systematically underestimated AI progress from 2022 to 2025. A more recent exercise from 2025 found that experts and superforecasters alike expected AI to continue to progress rapidly although predictions varied greatly on what the societal impacts of this might be.

Takeaways: The current situation resembles early industrial revolution dynamics. Steam engines existed for 70 years before factories reorganised around steam power, and were able to harvest the returns. The current AI phase will see massive investment, mixed signals, and unclear economics, as the new “general purpose” technology’s productivity gains materialise. Sceptics and hype-merchants alike will have indicators that prove their conflicting points. But there are also critical differences to the 1700s and the “bubble” tag is too simplistic. The question isn’t whether current investments will pay off linearly, but whether we’re building infrastructure for recursive cognitive improvement. If AI reaches such capabilities, today’s seemingly irrational spending will prove prescient. The companies that survive 2025-2027 with both infrastructure and the organisational knowledge of how to use it may find themselves in possession of the means of cognitive production and a prize potentially worth far more than today’s trillion-dollar valuations.

ChatGPT branches out

After being a top feature request for some time, ChatGPT finally lets users branch their chats. Google AI Studio had this capability for a while, but ChatGPT’s feature rollout this week marks its arrival in mainstream tools.

The feature seems minor: hover over a message, click “Branch in new chat”, and explore an alternative path whilst preserving your original conversation. Yet this is actually a pretty big deal. Human thought doesn’t follow straight lines, we explore, backtrack, and pursue parallel ideas simultaneously. Until now, ChatGPT forced us into rigid sequential dialogue, creating an awkward mismatch between how we think and how we had to interact with AI.

Why did this take so long? Perhaps OpenAI was too focused on capability leaps, bigger models, longer contexts, multimodal features, whilst neglecting interface innovation. The implementation is basic, but it can transform interactions from linear assistance into a multi-path thinking. Some suggested uses include:

- Re-use: Build a rich multi-turn context and re-use as many times as needed for different tasks

- Ideation: Explore multiple creative directions from differing points in a flow of thought

- Recovery: When conversations drift or lose clarity branch from the last coherent point rather than abandoning

Takeaways: ChatGPT’s branching feature highlights how primitive our AI interfaces are. Expect this to become table stakes for all AI tools within months and to add a surprising amount of new utility.

EXO

Photo editing goes bananas

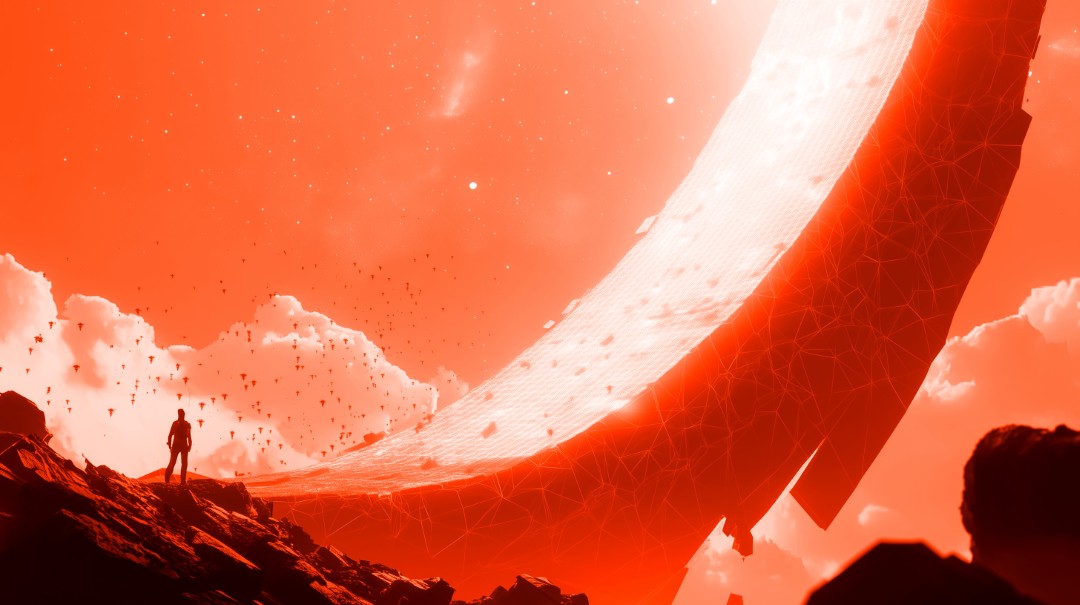

This image showcases Google’s “Nano Banana” (officially Gemini 2.5 Flash Image), the breakout AI release of the summer. The image editing model allows users to heavily modify photos via text prompts whilst maintaining highly consistent features. Since launching in late August, it has attracted 10 million first-time Gemini app users and processed over 200 million edits. The technology integrates with Google Photos and the new Pixel 10 phones. The model tops LMArena’s Image Edit leaderboard and includes visible watermarks plus SynthID digital watermarking on all edited images. Nano Banana enables photo combining and blending, style transfer, and multi-turn editing within the Gemini app.

Weekly news roundup

This week’s AI news reveals massive capital concentration with Anthropic’s $183B valuation, escalating geopolitical tensions as companies restrict Chinese access, and a rush towards vertical integration as major players build their own chips and infrastructure.

AI business news

- Tech CEOs take turns praising Trump at White House dinner (Shows how tech leaders are positioning themselves for potential policy influence in the AI era.)

- Atlassian to buy Arc developer The Browser Company for $610M (Demonstrates consolidation as established companies acquire AI-powered productivity tools to stay competitive.)

- CoreWeave acquires agent-training startup OpenPipe (Highlights infrastructure providers vertically integrating to offer complete AI development stacks.)

- Anthropic raises $13B Series F at $183B valuation (Reveals the staggering scale of capital flowing into frontier AI labs and market expectations for AI impact.)

- HubSpot’s 200+ product blitz aims to power hybrid human-AI teams (Shows how established software companies are rapidly integrating AI across their entire product suites.)

AI governance news

- Anthropic to stop selling AI services to majority Chinese-owned groups (Signals escalating AI geopolitical tensions and potential fragmentation of global AI development.)

- OpenAI eats jobs, then offers to help you find a new one (Highlights the irony of AI companies addressing the job displacement they’re actively causing.)

- OpenAI to route sensitive conversations to GPT-5, introduce parental controls (Reveals safety measures and inadvertently confirms GPT-5’s existence and capabilities.)

- Anthropic settles AI book-training lawsuit with authors (Sets precedent for how AI companies might resolve copyright disputes over training data.)

- Elon Musk’s xAI sues Apple and OpenAI, alleging anticompetitive collusion (Exposes competitive tensions as AI becomes central to major tech platforms.)

AI research news

- Why language models hallucinate (OpenAI) (Provides crucial understanding of fundamental AI limitations that affect all applications.)

- Implicit reasoning in large language models: a comprehensive survey (Analyses how LLMs perform reasoning tasks without explicit instruction, key for understanding AI capabilities.)

- Towards a unified view of large language model post-training (Offers technical insights into how models are refined after initial training, crucial for performance improvements.)

- AgenTracer: who is inducing failure in the LLM agentic systems? (Provides tools for debugging complex AI agent systems, essential as autonomous AI deployment increases.)

- A comprehensive survey on trustworthiness in reasoning with large language models (Addresses critical concerns about AI reliability and decision-making trustworthiness.)

AI hardware news

- OpenAI set to start mass production of its own AI chips with Broadcom (Shows major AI labs pursuing vertical integration to reduce dependence on Nvidia and control their infrastructure.)

- Nvidia signs $1.5 billion deal with cloud startup Lambda to rent back its own AI chips (Illustrates the extreme chip shortage dynamics where Nvidia rents access to its own products.)

- Trump to impose tariffs on semiconductor imports from firms not moving production to US (Signals potential disruption to global AI chip supply chains through protectionist policies.)

- Google’s TPU business seen as $900B opportunity amid growing AI demand (Highlights competition in specialised AI hardware beyond Nvidia’s dominance.)

- Nvidia says two mystery customers accounted for 39% of Q2 revenue (Reveals concentration risk in AI infrastructure market with heavy dependence on few major buyers.)